Building Stream Processing Pipelines With Dataflow R Googlecloud Foundation data pipeline with google cloud → goo.gle unicorn gcstobq here to bring you the latest news in the startup program by google cloud is ella grier! more. To run a pipeline in streaming mode, set the streaming flag in the command line when you run your pipeline. you can also set the streaming mode programmatically when you construct your.

Serverless Data Processing With Dataflow Develop Pipelines Datafloq Create a streaming data pipeline to capture taxi revenue, passengers, trip status and more, and visualise the results in a management dashboard. visualizing key metrics in looker studio . This tutorial focuses on constructing data pipelines using google dataflow, a fully managed service that simplifies the process of stream and batch processing. we will explore dataflow's capabilities, provide practical examples, and examine advanced features for effective data manipulation. Integration with google cloud services: dataflow seamlessly integrates with different google cloud services, such as bigquery, cloud storage, and pub sub. this integration simplifies statistics ingestion, storage, and analysis, growing a cohesive atmosphere for end to end data processing. what is etl pipeline in gcp?. Google cloud dataflow provides a simple, powerful model for building both batch and streaming parallel data processing pipelines. this repository hosts a few example pipelines to get you started with dataflow. googlecloudplatform dataflowsdk examples.

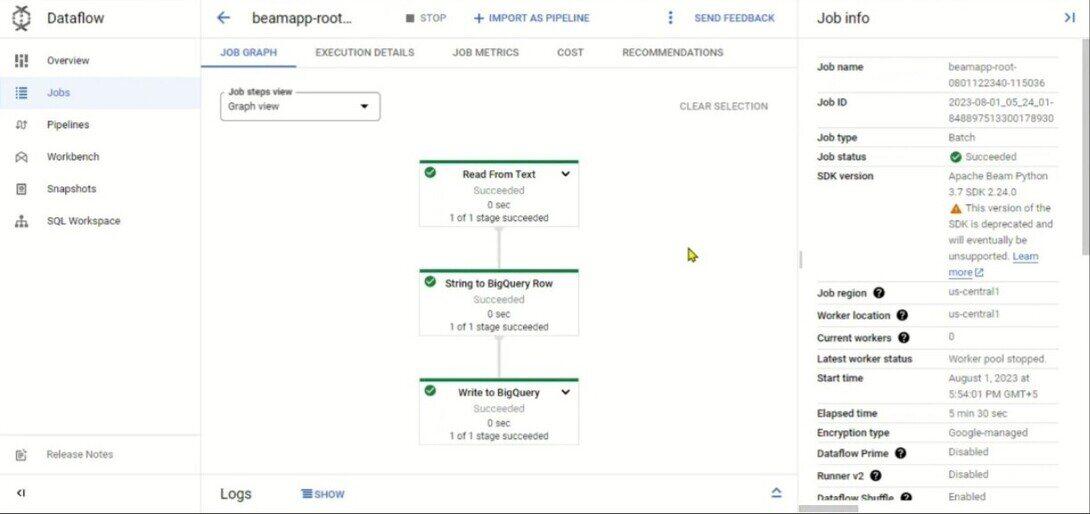

Building Data Pipelines With Google Cloud Dataflow Etl Processing Integration with google cloud services: dataflow seamlessly integrates with different google cloud services, such as bigquery, cloud storage, and pub sub. this integration simplifies statistics ingestion, storage, and analysis, growing a cohesive atmosphere for end to end data processing. what is etl pipeline in gcp?. Google cloud dataflow provides a simple, powerful model for building both batch and streaming parallel data processing pipelines. this repository hosts a few example pipelines to get you started with dataflow. googlecloudplatform dataflowsdk examples. 1:17 what can dataflow bring to your business? 4:06 how do you start using dataflow? 12:10 how do you observe and monitor your pipelines? 12:44 how do you optimize and scale your pipelines? 13:16 how are other customers using dataflow? 14:06 wrap up!. In this lab, you a) build a batch etl pipeline in apache beam, which takes raw data from google cloud storage and writes it to bigquery b) run the apache beam pipeline on dataflow and c) parameterize the execution of the pipeline. in this module, you will learn about how to process data in streaming with dataflow. Dataflow has two data pipeline types, streaming and batch. both types of pipeline run jobs that are defined in dataflow templates. a streaming data pipeline runs a dataflow. It simplifies the process of building data pipelines for both stream and batch processing. whether you're processing real time data streams or handling large volumes of batch data,.

Improving Dataflow Pipelines For Text Data Processing 1:17 what can dataflow bring to your business? 4:06 how do you start using dataflow? 12:10 how do you observe and monitor your pipelines? 12:44 how do you optimize and scale your pipelines? 13:16 how are other customers using dataflow? 14:06 wrap up!. In this lab, you a) build a batch etl pipeline in apache beam, which takes raw data from google cloud storage and writes it to bigquery b) run the apache beam pipeline on dataflow and c) parameterize the execution of the pipeline. in this module, you will learn about how to process data in streaming with dataflow. Dataflow has two data pipeline types, streaming and batch. both types of pipeline run jobs that are defined in dataflow templates. a streaming data pipeline runs a dataflow. It simplifies the process of building data pipelines for both stream and batch processing. whether you're processing real time data streams or handling large volumes of batch data,.