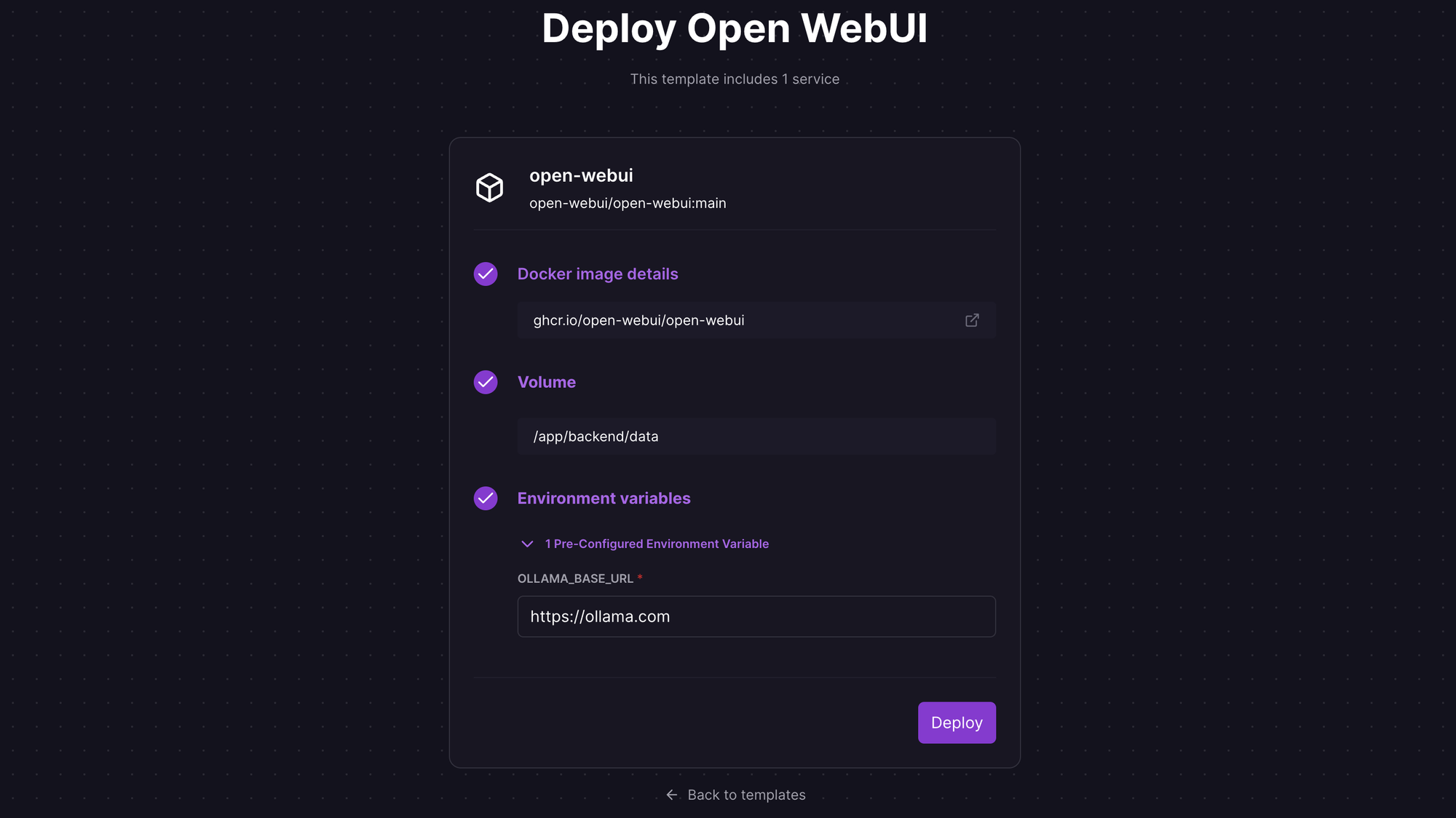

Open Webui Open Webui Most likely you can resolve this by assuming that you are not on the same layer2 network segment and working accordingly. the ollama server by default only listens on 127.0.0.1, so you need to set environment variables ollama host=0.0.0.0 and possibly also ollama origins=*, per their faq. Open webui requires reasoning thinking content to be rendered with

Open Webui Github Two postgresql databases – stores configuration and conversation history for openwebui and litellm respectively. redis – uses its built‑in pub sub system for websocket message brokering in open webui, publishing user events to redis channels and subscribing across instances so all connected clients receive live, synchronized updates. The litellm integration enables users to access a wide range of ai models from different providers through the openai compatible api interface. litellm is a popular library that abstracts away the differences between various llm providers by offering a unified interface. Litellm is a package that simplifies api calls to various llm (large language model) providers, such as azure, anthropic, openai, cohere, and replicate, allowing calls to be made in a consistent format similar to the openai api. By combining either open web ui (previously called olamas web ui) or prompta with a small library called litellm, you can set up one clean interface to talk to models from google, anthropic, and openai, all using your own api keys.

/w=256,quality=90,fit=scale-down)

Open Webui Litellm is a package that simplifies api calls to various llm (large language model) providers, such as azure, anthropic, openai, cohere, and replicate, allowing calls to be made in a consistent format similar to the openai api. By combining either open web ui (previously called olamas web ui) or prompta with a small library called litellm, you can set up one clean interface to talk to models from google, anthropic, and openai, all using your own api keys. Openwebui is a web based chatbot ui designed to work with local and cloud based ai models. litellm is a unified interface, allowing developers to interact with numerous llms using a consistent…. Open webui is an open source, self hosted web interface for ai that allows users to utilize any large language model (llm) they desire. unlike traditional cloud based services, open webui enables users to run self hosted models like llama 3 and myre, alongside popular cloud models. This article explores a powerful solution: a docker based setup combining litellm proxy with open webui that streamlines ai development and provides substantial benefits for teams of all sizes. litellm is an open source project that acts as a universal proxy for large language models (llms). In this guide, i’ll walk you through setting up a powerful local ai environment by combining litellm (for managing multiple ai model providers) with openwebui (for a user friendly interface).

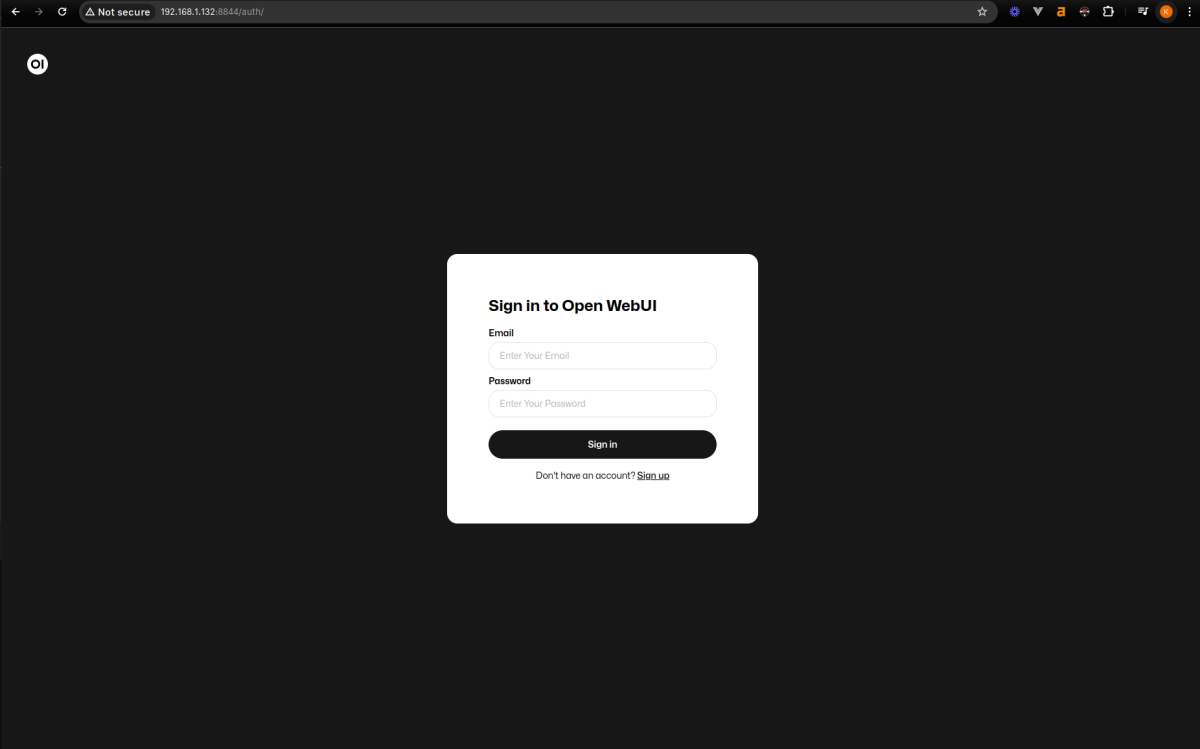

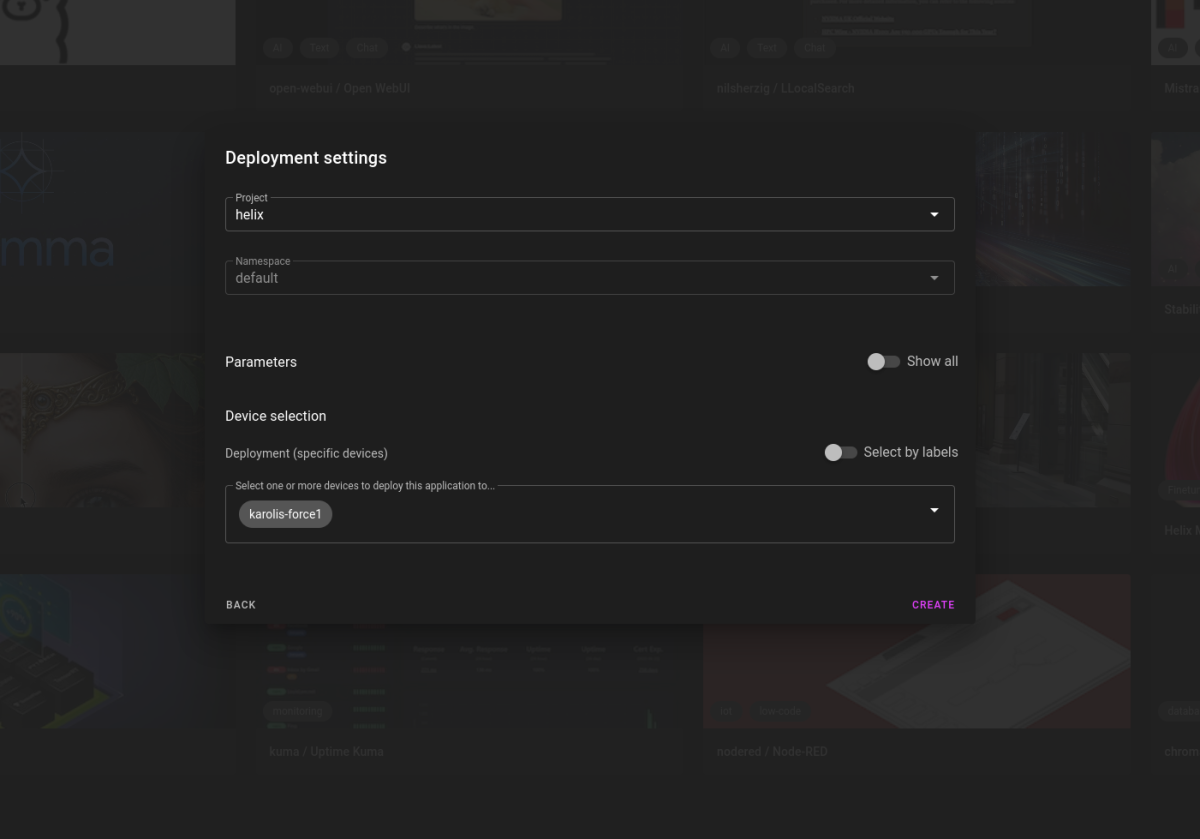

How To Install Open Webui On Local Server Synpse Openwebui is a web based chatbot ui designed to work with local and cloud based ai models. litellm is a unified interface, allowing developers to interact with numerous llms using a consistent…. Open webui is an open source, self hosted web interface for ai that allows users to utilize any large language model (llm) they desire. unlike traditional cloud based services, open webui enables users to run self hosted models like llama 3 and myre, alongside popular cloud models. This article explores a powerful solution: a docker based setup combining litellm proxy with open webui that streamlines ai development and provides substantial benefits for teams of all sizes. litellm is an open source project that acts as a universal proxy for large language models (llms). In this guide, i’ll walk you through setting up a powerful local ai environment by combining litellm (for managing multiple ai model providers) with openwebui (for a user friendly interface).

How To Install Open Webui On Local Server Synpse This article explores a powerful solution: a docker based setup combining litellm proxy with open webui that streamlines ai development and provides substantial benefits for teams of all sizes. litellm is an open source project that acts as a universal proxy for large language models (llms). In this guide, i’ll walk you through setting up a powerful local ai environment by combining litellm (for managing multiple ai model providers) with openwebui (for a user friendly interface).

Open Source Chatgpt Ui Alternative With Open Webui