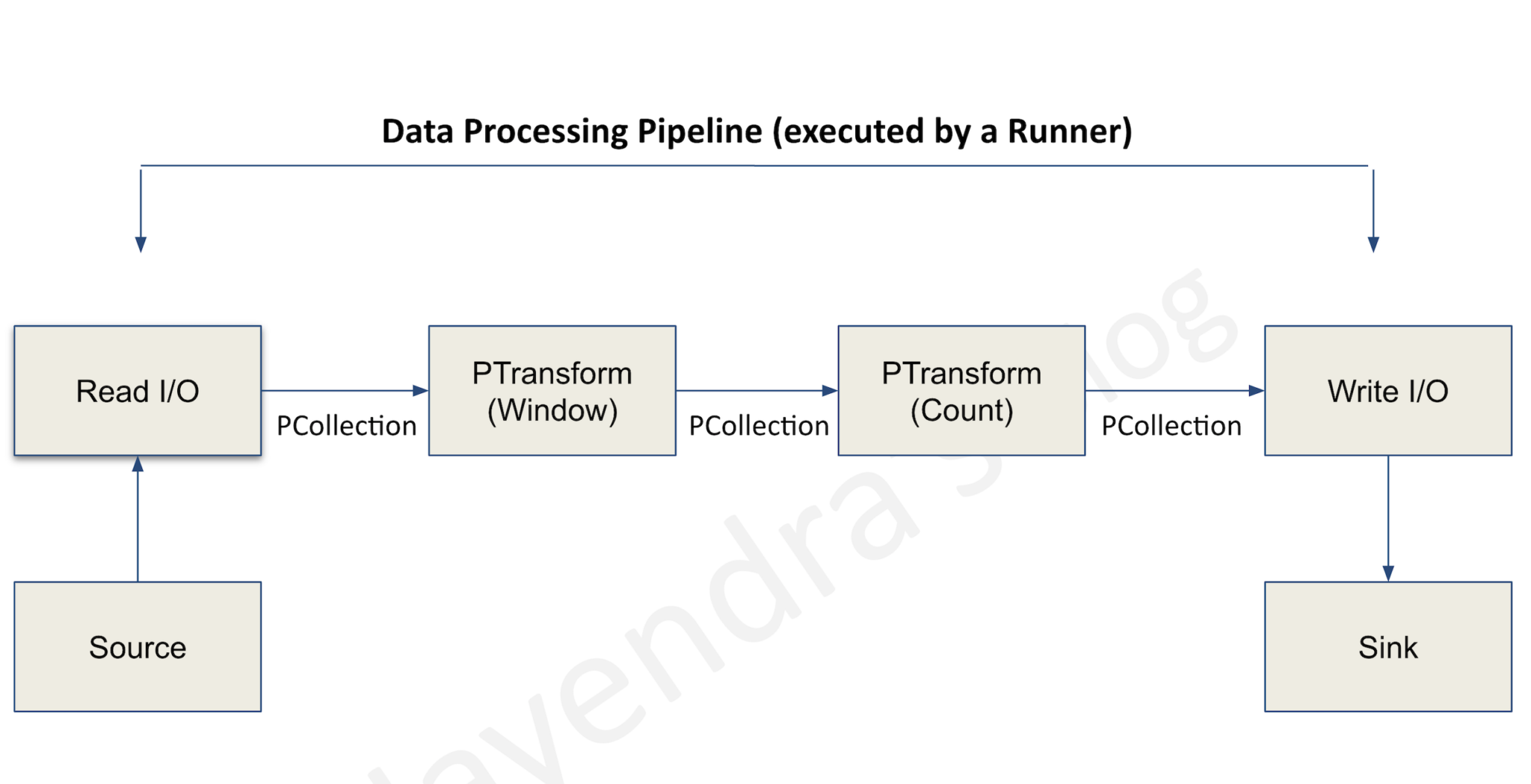

Cloud Dataflow Executed Successfully But Not Inserted Data Into My cloud dataflow got executed successfully but data was not inserted into my bigquery table. i am not sure what would be the problem. i ran below code in eclipse: string line = ""; string csvsplitby = ","; integer incflg = 0; stringreader strdr = new stringreader(c.element().tostring()); br = new bufferedreader(strdr);. The following diagram shows the dataflow troubleshooting workflow described in this page. dataflow provides real time feedback about your job, and there is a basic set of steps you can use to.

Tips And Tricks To Get Your Cloud Dataflow Pipelines Into Production When we execute the pipeline on adf which has the required data flow task, we can see that the pipeline executes successfully. also, we see all data to be inserted in data preview of sink in data flow task. however, when the pipeline completes successfully, we do not see any data inserted in db. I am trying to insert data from source to destination table. both the tables are of same name and same table structure, just they are in different databases. the whole process executes. It seems to happen when you have a referenced query that still has records in it that show as [list]. even when this query isnt set to output to a destination. disabling staging on the main, referenced query, fixes it. If you run into problems with your dataflow pipeline or job, this page lists error messages that you might see and provides suggestions for how to fix each error. errors in the log types.

Google Cloud Dataflow It seems to happen when you have a referenced query that still has records in it that show as [list]. even when this query isnt set to output to a destination. disabling staging on the main, referenced query, fixes it. If you run into problems with your dataflow pipeline or job, this page lists error messages that you might see and provides suggestions for how to fix each error. errors in the log types. Upsert is a combination of update or insert that enables the server to detect whether a record exists or not and apply the appropriate update or create operation in dataverse. learn how to get the best outcomes when you create dataflows that write their output to dataverse. If the cloudformation script completed successfully but enabling failed before completing the infrastructure setup, this might be an indication that the kubernetes cluster cannot communicate with the control plane or other public endpoints like container image repositories. Copying dataflows as part of a back up and restore environments operation isn't supported. copying dataflows as part of a power platform environments copy operation don't preserve their email notification setting. change owner for a dataflow with a connection and a query parameter would also change the parameter value to a previous value (if such value has been set). I'm currently using the 2.18.0 beam sdk because when i tried to use 2.19.0, i was receiving this error: "failed to construct instance from factory method dataflowrunner#fromoptions (interface org.apache.beam.sdk.options.pipelineoptions): invocationtargetexception: no files to stage has been found.".

Dataflow Overview Google Cloud Upsert is a combination of update or insert that enables the server to detect whether a record exists or not and apply the appropriate update or create operation in dataverse. learn how to get the best outcomes when you create dataflows that write their output to dataverse. If the cloudformation script completed successfully but enabling failed before completing the infrastructure setup, this might be an indication that the kubernetes cluster cannot communicate with the control plane or other public endpoints like container image repositories. Copying dataflows as part of a back up and restore environments operation isn't supported. copying dataflows as part of a power platform environments copy operation don't preserve their email notification setting. change owner for a dataflow with a connection and a query parameter would also change the parameter value to a previous value (if such value has been set). I'm currently using the 2.18.0 beam sdk because when i tried to use 2.19.0, i was receiving this error: "failed to construct instance from factory method dataflowrunner#fromoptions (interface org.apache.beam.sdk.options.pipelineoptions): invocationtargetexception: no files to stage has been found.".

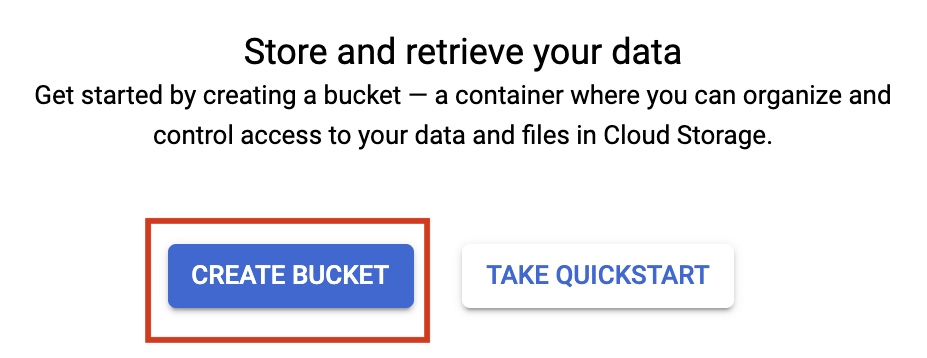

Run A Big Data Text Processing Pipeline In Cloud Dataflow Google Codelabs Copying dataflows as part of a back up and restore environments operation isn't supported. copying dataflows as part of a power platform environments copy operation don't preserve their email notification setting. change owner for a dataflow with a connection and a query parameter would also change the parameter value to a previous value (if such value has been set). I'm currently using the 2.18.0 beam sdk because when i tried to use 2.19.0, i was receiving this error: "failed to construct instance from factory method dataflowrunner#fromoptions (interface org.apache.beam.sdk.options.pipelineoptions): invocationtargetexception: no files to stage has been found.".