Wsl Localhost Docker Apps With Ruby On Rails And Vite Deanin With ollama web ui you'll not only get the easiest way to get your own local ai running on your computer (thanks to ollama), but it also comes with ollamahub support, where you. With open webui you'll not only get the easiest way to get your own local llm running on your computer (thanks to the ollama engine), but it also comes with openwebui hub support, where you can find prompts, modelfiles (to give your ai a personality) and more, all of that power by the community.

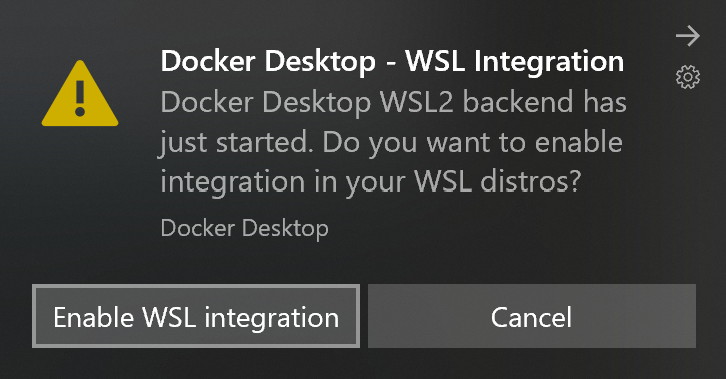

6 Tips To Improve Your Wsl 2 Docker Experience On Windows This is a comprehensive guide on how to install wsl on a windows 10 11 machine, deploying docker and utilising ollama for running ai models locally. Ollama is a free, open source, developer friendly tool that makes it easy to run large language models (llms) locally — no cloud, no setup headaches. with a single command, you can pull and run models directly on your machine with full gpu acceleration. Install docker: download and install docker desktop for windows and macos, or docker engine for linux. grab your llm model: choose your preferred model from the ollama library (lamda, jurassic 1 jumbo, and more!). Ensure wsl integration is enabled in docker desktop settings. with these steps, you'll have a powerful environment ready for ai model experimentation and development. enjoy exploring! uh oh! there was an error while loading. please reload this page.

Using Docker In Wsl 2 Install docker: download and install docker desktop for windows and macos, or docker engine for linux. grab your llm model: choose your preferred model from the ollama library (lamda, jurassic 1 jumbo, and more!). Ensure wsl integration is enabled in docker desktop settings. with these steps, you'll have a powerful environment ready for ai model experimentation and development. enjoy exploring! uh oh! there was an error while loading. please reload this page. In this tutorial, we'll walk through the complete installation and configuration of three essential tools for your local ai environment: ollama, open webui, and docker desktop. this combination provides a powerful and flexible foundation for running ai models locally. Once you have both ollama and docker set up, it’s time to put your local ai assistant to work. follow these steps: open docker: open the docker application on your computer. select the new port:. The goal of this blog is to guide you through the process of setting up your ai development environment within windows subsystem for linux (wsl). wsl allows you to harness the full power of linux tools and workflows directly inside windows, without the need for a dual boot setup. With just a few tools — ollama, mcp, and langchain — you’ve built a local ai agent that goes beyond chatting: it actually uses tools, interacts with your filesystem, and provides real utility — all offline. this project demonstrates how easy it is to:.