Estimation Theory Pdf Estimator Standard Deviation Metric esti problem 1.1. estimate the unknown parameter θ ∈ Θ, by using an obser vation y of the random vector y ∈ y. to construct a tions to the t : y → Θ. the value ˆθ = t (y), returned by the estimator when applied to the observa tion y of y, is called estimate of θ. Abstract the 1st part of the lecture notes in graduate level module within the course in wireless communications. good old hardcore mathematical introduction to estimation theory.

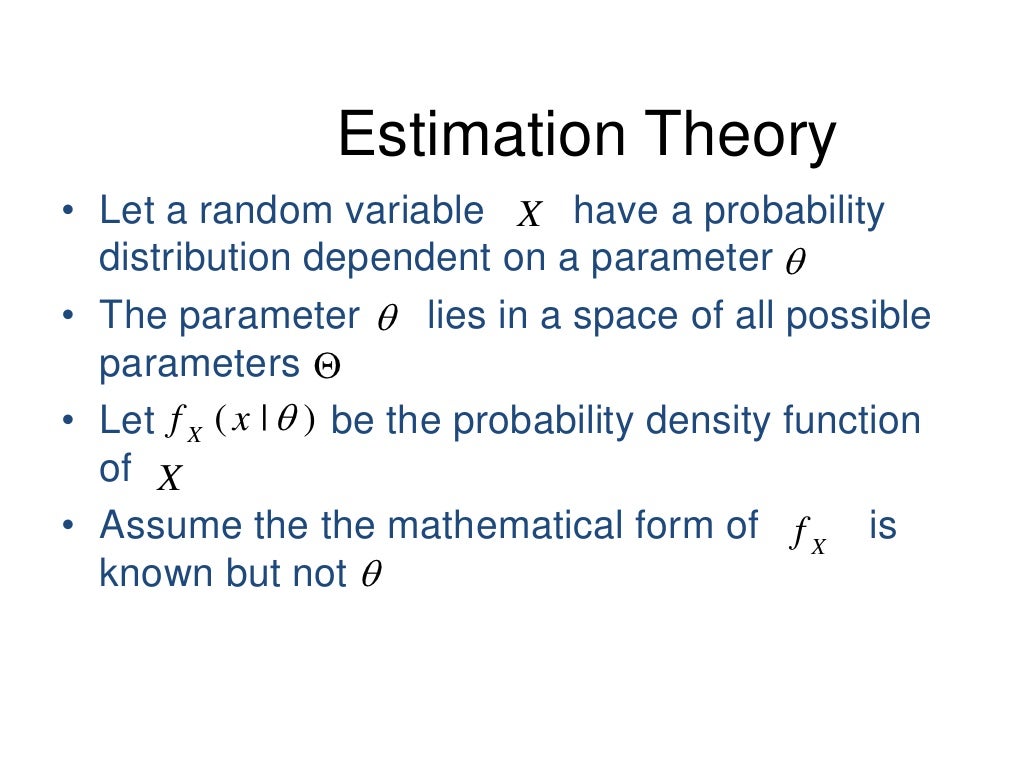

Theory Of Estimation Pdf Estimator Statistical Inference The entire purpose of estimation theory is to arrive at an estimator, which takes the sample as input and produces an estimate of the parameters with the corresponding accuracy. I.introduction to estimation theory: statistical inference can be defined as the process by which conclusions are about some measure or attribute of a population (eg mean or standard deviation) based upon analysis of sample data. “point estimation” refers to the decision problem we were talking about last class: we observe data xi drawn i.i.d. from p (x)16, and our goal is to estimate the parameter from the data. an “estimator” is any decision rule, that is, any function from the data space n into the parameter space . e.g. the sample mean, in the gaussian case. Before collecting the data the mean of the prior pdf p(a) is the best estimate while after collecting the data the best estimate is the mean of posterior pdf p(a x).

Estimation 1 Pdf Estimator Estimation Theory “point estimation” refers to the decision problem we were talking about last class: we observe data xi drawn i.i.d. from p (x)16, and our goal is to estimate the parameter from the data. an “estimator” is any decision rule, that is, any function from the data space n into the parameter space . e.g. the sample mean, in the gaussian case. Before collecting the data the mean of the prior pdf p(a) is the best estimate while after collecting the data the best estimate is the mean of posterior pdf p(a x). This paper comprises a collection of some basic concepts of statistical estimation theory obtained from various sources and presented in summary fashion; it makes no original contribution to. Which μ would you choose? for x = 3? intuitively, it is more believable or more likely that when x = 1.5 that we should estimate μ to be zero, and when x = 3 we should estimate μ to be one. r. a. fisher formalized this intuition by introducing the − likelihood function. Sion and estimation theory. this intro duction is based on the books of lehmann [243, 244], the lecture notes of künsch [229] and the ook of van der vaart [363]. this chapter presents classical statistical estimation theory, it embeds estimation into a historical context, and it provides important aspects and intuition for modern data scie. In statistics, we say that you have an estimator ^p = the approximation becomes very close when n is big. an estimator is a way of estimating an unknown parameter (p in our example) of a statistical model (the coin) given some data (no. of heads in n trials).

Estimation Theory This paper comprises a collection of some basic concepts of statistical estimation theory obtained from various sources and presented in summary fashion; it makes no original contribution to. Which μ would you choose? for x = 3? intuitively, it is more believable or more likely that when x = 1.5 that we should estimate μ to be zero, and when x = 3 we should estimate μ to be one. r. a. fisher formalized this intuition by introducing the − likelihood function. Sion and estimation theory. this intro duction is based on the books of lehmann [243, 244], the lecture notes of künsch [229] and the ook of van der vaart [363]. this chapter presents classical statistical estimation theory, it embeds estimation into a historical context, and it provides important aspects and intuition for modern data scie. In statistics, we say that you have an estimator ^p = the approximation becomes very close when n is big. an estimator is a way of estimating an unknown parameter (p in our example) of a statistical model (the coin) given some data (no. of heads in n trials).