Build Your Own Llm Model Using Openai Pdf Learn how to master large language models (llms) by configuring, deploying, and supercharging openai service models. click here to learn more!. Learn how to get started with azure openai and create your first resource and deploy your first model in the azure cli or the azure portal.

How To Configure Deploy And Consume An Llm Model With Openai Service In this post i will describe how i use docker compose to set up an llm experimentation environment where i can connect tools and chat to cloud based openai api compatible and local llm. How to set up an ai foundry hub and project how to deploy a large language model (llm) in the project key features like model fine tuning, deployment options, agent services, and playgrounds the difference between azure ai foundry and azure openai best practices for setting up access, storage, and security what is azure ai foundry?. In this blog post, we are going to see, how we can use the azure’s openai api endpoints for interacting with llm’s hosted in azure. at a high level, we will be going through the following steps deploy the azure openai service in azure portal. This article illustrated in a step by step fashion how to set up and run your first local openai api project for using openai state of the art models like gpt 4, based on fastapi for quick model inference through a web based interface.

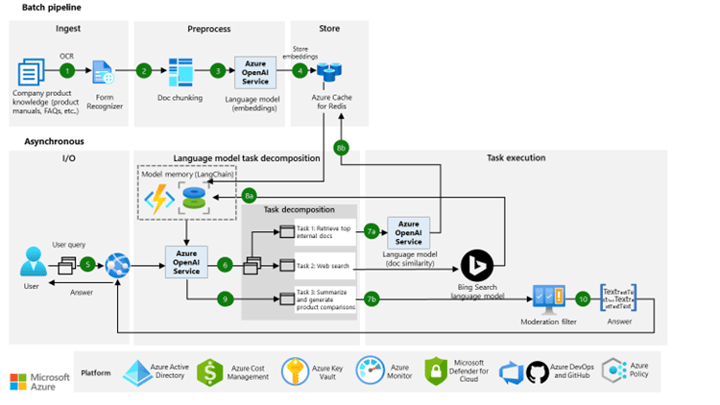

How To Configure Deploy And Consume An Llm Model With Openai Service In this blog post, we are going to see, how we can use the azure’s openai api endpoints for interacting with llm’s hosted in azure. at a high level, we will be going through the following steps deploy the azure openai service in azure portal. This article illustrated in a step by step fashion how to set up and run your first local openai api project for using openai state of the art models like gpt 4, based on fastapi for quick model inference through a web based interface. In the following sections, we will first introduce methods for starting the service, choosing the appropriate one based on your application scenario. Deploying large language models (llms) in production requires strategic planning, the right infrastructure, and continuous optimization. whether you’re building a chatbot, enhancing search functionality, or deploying generative ai tools, this guide will walk you through the process to ensure a successful deployment. let’s dive in. In this hands on guide, we'll be taking a closer look at the avenues for scaling your ai workloads from local proofs of concept to production ready deployments, and walk you through the process of deploying models like gemma 3 or llama 3.1 at scale. I this tutorial, you will learn how to build an llm agent that would run locally using various tools. first we’ll build a basic chatbot the just echoes the users input. next would enhance it to use openai api and finally we’ll further refine it to used llm running locally. we would cover the following. 1. setup ollama locally and test.

Openai Deploy Model Microsoft Q A In the following sections, we will first introduce methods for starting the service, choosing the appropriate one based on your application scenario. Deploying large language models (llms) in production requires strategic planning, the right infrastructure, and continuous optimization. whether you’re building a chatbot, enhancing search functionality, or deploying generative ai tools, this guide will walk you through the process to ensure a successful deployment. let’s dive in. In this hands on guide, we'll be taking a closer look at the avenues for scaling your ai workloads from local proofs of concept to production ready deployments, and walk you through the process of deploying models like gemma 3 or llama 3.1 at scale. I this tutorial, you will learn how to build an llm agent that would run locally using various tools. first we’ll build a basic chatbot the just echoes the users input. next would enhance it to use openai api and finally we’ll further refine it to used llm running locally. we would cover the following. 1. setup ollama locally and test.