About In this episode of getting started with apache beam, we cover what makes stream processing different from batch processing, how beam uses windows to work with unbounded data, and much more . Dataflow pipelines simplify the mechanics of large scale batch and streaming data processing and can run on a number of runtimes like apache flink, apache spark, and google cloud dataflow (a cloud service).

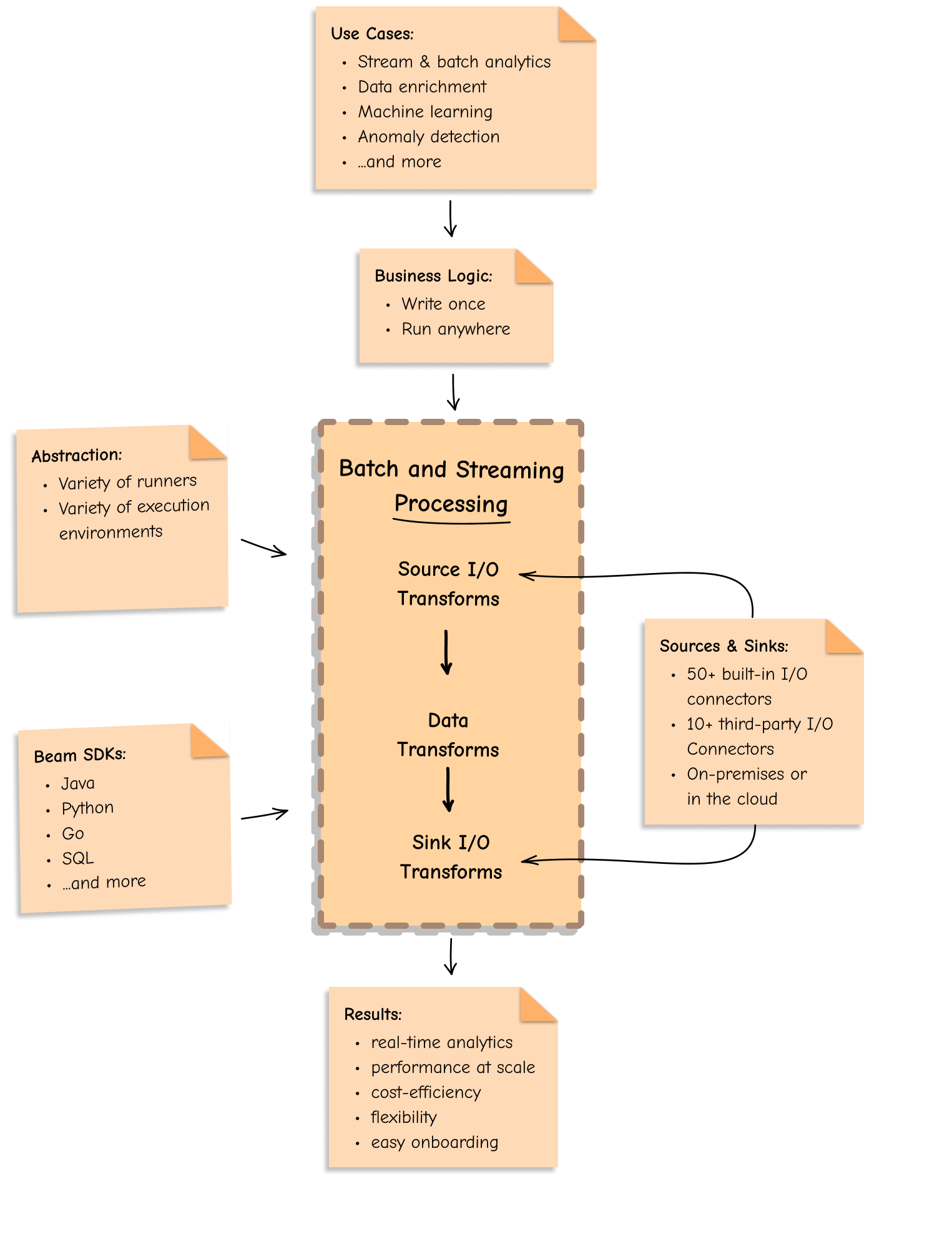

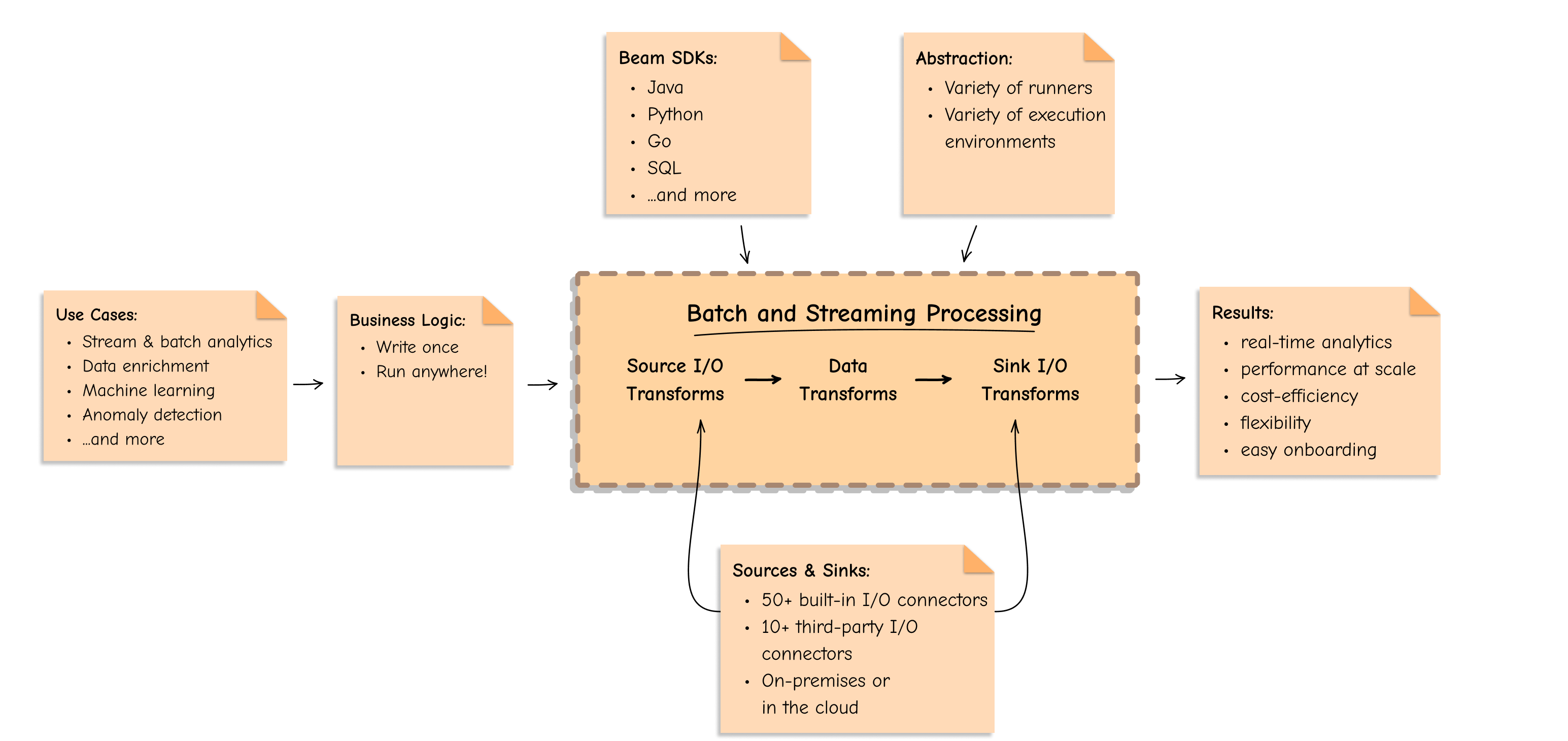

About In this article, we’ll delve into the world of streaming data processing using apache beam, and i’ll guide you through the process of building a streaming etl (extract, transform, load) pipeline. In this tutorial we will guide you through the steps of writing a beam app as well as executing such an app on a spark cluster. as a brief introduction, apache beam is a high level data. In this tutorial, you'll learn how to build a streaming etl pipeline using apache beam and redpanda. through this practical example, you'll become more comfortable with beam and develop the skills needed for your own data processing pipelines. Apache beam supports two types of pipelines: batch and streaming. batch pipelines are designed to process finite datasets, such as a daily report or a monthly summary. streaming pipelines, on the other hand, are designed to process unbounded datasets, such as a continuous stream of sensor data.

Github Crosscutdata Apache Beam Dataflow Build Etl Pipeline Using In this tutorial, you'll learn how to build a streaming etl pipeline using apache beam and redpanda. through this practical example, you'll become more comfortable with beam and develop the skills needed for your own data processing pipelines. Apache beam supports two types of pipelines: batch and streaming. batch pipelines are designed to process finite datasets, such as a daily report or a monthly summary. streaming pipelines, on the other hand, are designed to process unbounded datasets, such as a continuous stream of sensor data. To modify a batch pipeline to support streaming, you must make the following code changes: use an i o connector that supports reading from an unbounded source. use an i o connector that supports writing to an unbounded source. choose a windowing strategy. Apache beam offers a unified programming model that allows developers to write batch and streaming data processing pipelines that can run on various processing engines such as apache flink, apache spark, and google cloud dataflow. Apache beam is an open source platform for building batch and streaming data processing pipelines. it provides a unified programming model that allows you to write code once and run it on multiple execution engines, including apache flink, apache spark, and google cloud dataflow. Apache beam is an open source, unified model for defining both batch and streaming data parallel processing pipelines. it allows developers to write data processing jobs that can run on various execution engines like apache flink, apache spark, and google cloud dataflow.