Spark Read Multiple Csv Files Spark By Examples I'm using spark.read.csv (filename).todf (columns). you have two methods to read several csv files in pyspark. if all csv files are in the same directory and all have the same schema, you can read then at once by directly passing the path of directory as argument, as follow:. Reading csv files in pyspark means using the spark.read.csv () method to pull comma separated value (csv) files into a dataframe, turning flat text into a structured, queryable format within spark’s distributed environment.

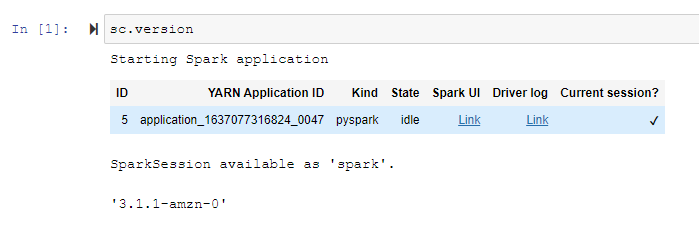

How To Read Multiple Csv Files In Pyspark Predictive Hacks To read a csv file into pyspark dataframe use csv("path") from dataframereader. this article explores the process of reading single files, multiple files, or all files from a local directory into a dataframe using pyspark. Below, we will show you how to read multiple compressed csv files that are stored in s3 using pyspark. assume that we are dealing with the following 4 .gz files. note that all files have headers. for this example, we will work with spark 3.1.1. finally, if we want to get the schema of the data frame, we can run: or, in a more compact form:. Learn how to efficiently read multiple csv files into a single dataframe in apache spark, overcoming common path errors with hdfs. more. Using patterns or a list of file paths allows you to import multiple csv files efficiently in a single load with apache spark. this can be done easily using the dataframe api, available in languages like python and scala.

How To Read Multiple Csv Files In Pyspark Predictive Hacks Learn how to efficiently read multiple csv files into a single dataframe in apache spark, overcoming common path errors with hdfs. more. Using patterns or a list of file paths allows you to import multiple csv files efficiently in a single load with apache spark. this can be done easily using the dataframe api, available in languages like python and scala. Firstly, read all file paths into rdd. write rdd keys, which tells path names into a list. finally, using for loop and the union merge all the files into a dataframe. df=spark.read.csv(i, header=true) if combined df is none: combined df = df . else: combined df=combined df.union(df) . Spark provides spark.read ().csv (“path”) and spark.read.format (“csv”).load (“path”) in this tutorial, you will learn how to read a single file, multiple files, and read all files in a directory into dataframe using scala. In this tutorial, i will explain how to load a csv file into spark rdd using a scala example. using the textfile () the method in sparkcontext class we can read csv files, multiple csv files (based on pattern matching), or all files from a directory into rdd [string] object. Learn how to read and merge multiple csv files into a single dataframe in pyspark, overcoming common errors related to column mismatches. more.

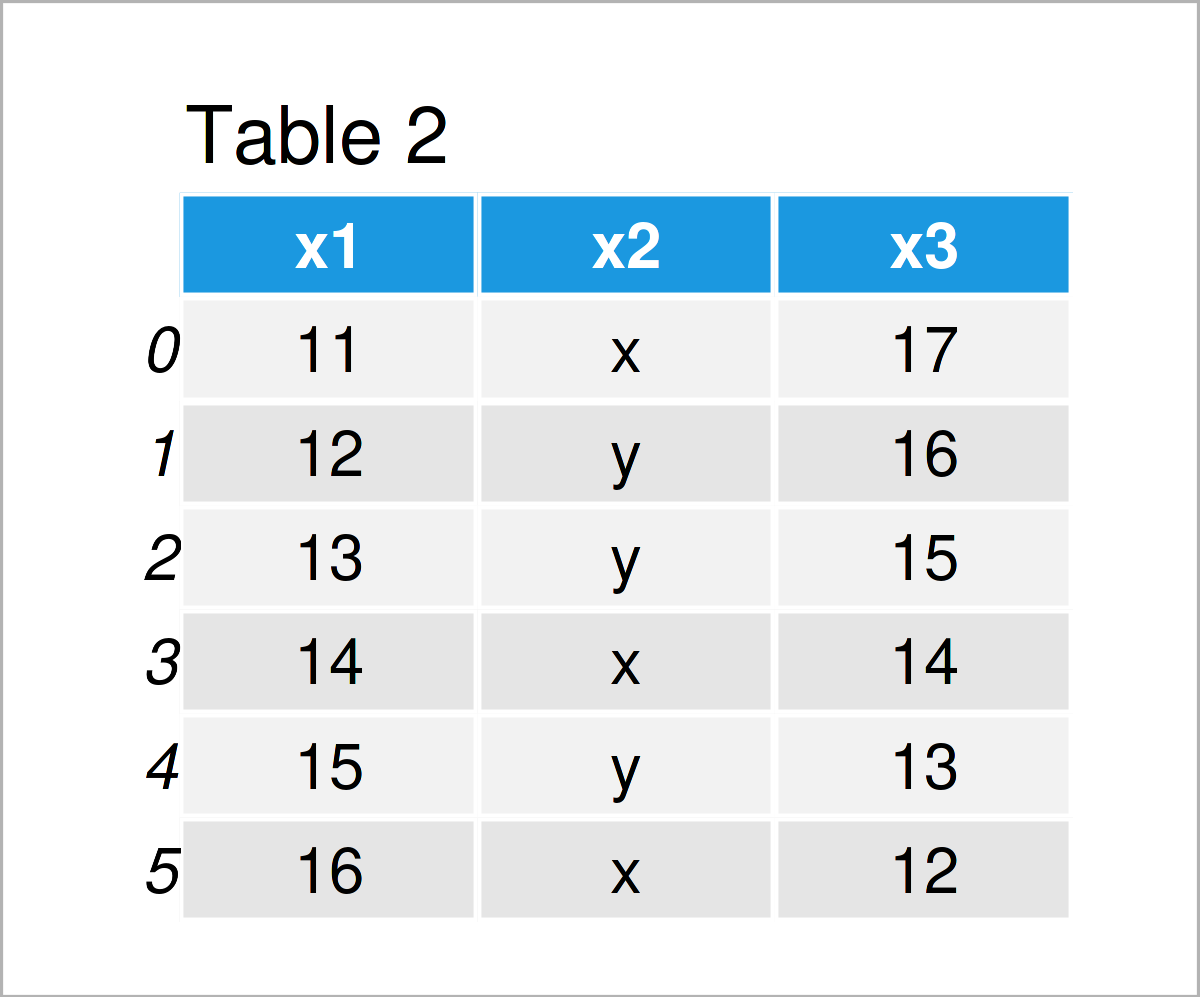

Read Multiple Csv Files Append Into One Pandas Dataframe In Python Firstly, read all file paths into rdd. write rdd keys, which tells path names into a list. finally, using for loop and the union merge all the files into a dataframe. df=spark.read.csv(i, header=true) if combined df is none: combined df = df . else: combined df=combined df.union(df) . Spark provides spark.read ().csv (“path”) and spark.read.format (“csv”).load (“path”) in this tutorial, you will learn how to read a single file, multiple files, and read all files in a directory into dataframe using scala. In this tutorial, i will explain how to load a csv file into spark rdd using a scala example. using the textfile () the method in sparkcontext class we can read csv files, multiple csv files (based on pattern matching), or all files from a directory into rdd [string] object. Learn how to read and merge multiple csv files into a single dataframe in pyspark, overcoming common errors related to column mismatches. more.

Read Multiple Csv Files Append Into One Pandas Dataframe In Python In this tutorial, i will explain how to load a csv file into spark rdd using a scala example. using the textfile () the method in sparkcontext class we can read csv files, multiple csv files (based on pattern matching), or all files from a directory into rdd [string] object. Learn how to read and merge multiple csv files into a single dataframe in pyspark, overcoming common errors related to column mismatches. more.