How To Submit A Bigquery Job Using Google Cloud Dataflow Apache Beam This document describes how to write data from dataflow to bigquery. overview for most use cases, consider using managed i o to write to bigquery. managed i o provides features such as automatic. Using apache beam libraries, we are defining these steps in python program. first we need to import the beam and its pipelineoptions modules. next we need to set the program arguments such as runner, job name, gcp project id, service account email, region, gcp temporary and staging location.

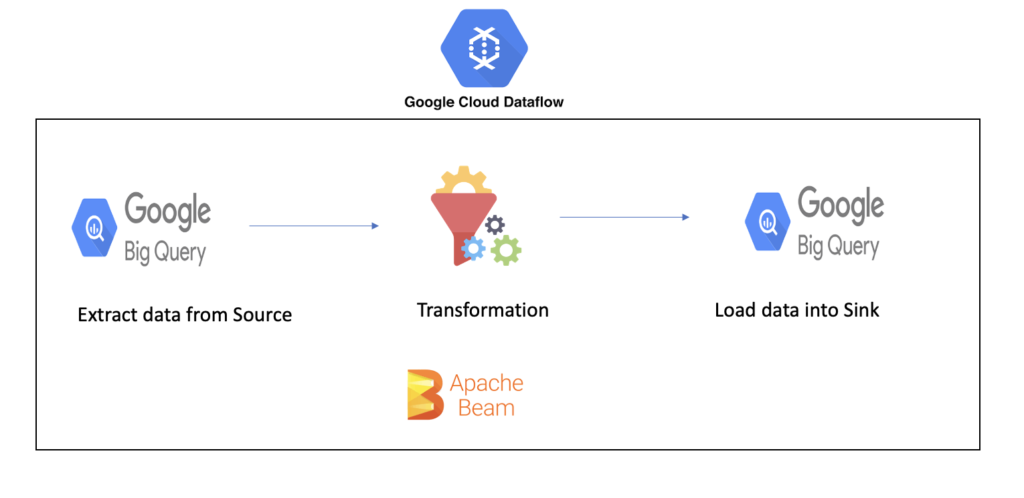

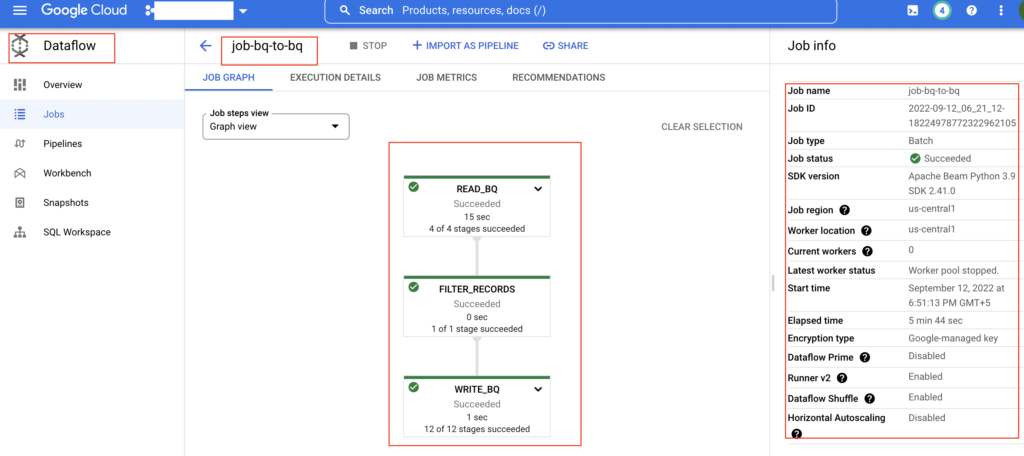

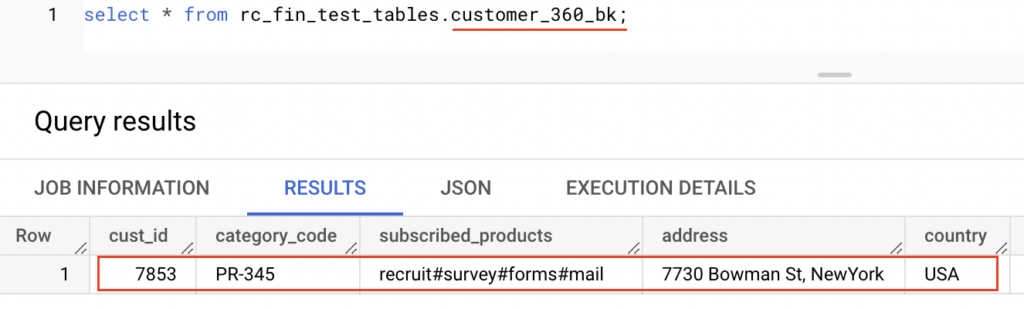

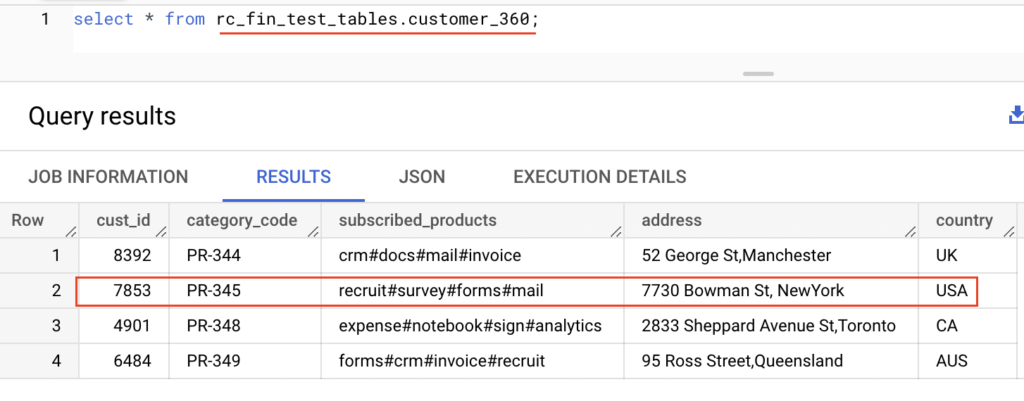

How To Submit A Bigquery Job Using Google Cloud Dataflow Apache Beam I am looking for a better approach using which 2 pcolls can be joined based on keys and then the result can be loaded to bigquery. the code i could think of, was given above which generates the result in python tuple but that can't be written to bq. When you run your pipeline with the cloud dataflow service, the runner uploads your executable code and dependencies to a google cloud storage bucket and creates a cloud dataflow job, which executes your pipeline on managed resources in google cloud platform. In this lab, you a) build a batch etl pipeline in apache beam, which takes raw data from google cloud storage and writes it to bigquery b) run the apache beam pipeline on dataflow and c) parameterize the execution of the pipeline. This project demonstrates how to build an etl (extract, transform, load) pipeline using google cloud services. we will utilize dataflow (powered by apache beam) to process data and load it into bigquery for analysis. through a series of python based pipelines, we will explore data ingestion, transformation, enrichment, and data merging.

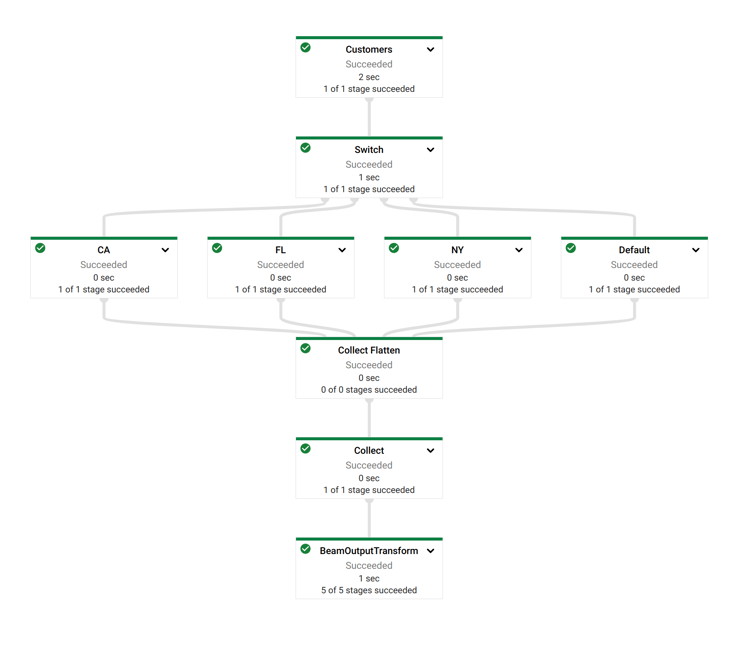

How To Submit A Bigquery Job Using Google Cloud Dataflow Apache Beam In this lab, you a) build a batch etl pipeline in apache beam, which takes raw data from google cloud storage and writes it to bigquery b) run the apache beam pipeline on dataflow and c) parameterize the execution of the pipeline. This project demonstrates how to build an etl (extract, transform, load) pipeline using google cloud services. we will utilize dataflow (powered by apache beam) to process data and load it into bigquery for analysis. through a series of python based pipelines, we will explore data ingestion, transformation, enrichment, and data merging. The following is a step by step guide on how to use apache beam running on google cloud dataflow to ingest kafka messages into bigquery. environment setup let’s start by installing a. This tutorial uses the pub sub subscription to bigquery template to create and run a dataflow template job using the google cloud console or google cloud cli. the tutorial walks you. As you can see in the picture above we have a dataflow job in the middle that involves the following steps: continuously reads json events from pubsub published by the backend of our application. processes json events in a ptransform. loads them to bigquery. the destination differs based on event type field in the json event. Apache beam playground: an interactive environment to try out apache beam transforms and examples without having to install apache beam in your environment. create your pipeline:.

How To Submit A Bigquery Job Using Google Cloud Dataflow Apache Beam The following is a step by step guide on how to use apache beam running on google cloud dataflow to ingest kafka messages into bigquery. environment setup let’s start by installing a. This tutorial uses the pub sub subscription to bigquery template to create and run a dataflow template job using the google cloud console or google cloud cli. the tutorial walks you. As you can see in the picture above we have a dataflow job in the middle that involves the following steps: continuously reads json events from pubsub published by the backend of our application. processes json events in a ptransform. loads them to bigquery. the destination differs based on event type field in the json event. Apache beam playground: an interactive environment to try out apache beam transforms and examples without having to install apache beam in your environment. create your pipeline:.

Google Cloud Dataflow Data Pipelines With Apache Beam And Apache Hop As you can see in the picture above we have a dataflow job in the middle that involves the following steps: continuously reads json events from pubsub published by the backend of our application. processes json events in a ptransform. loads them to bigquery. the destination differs based on event type field in the json event. Apache beam playground: an interactive environment to try out apache beam transforms and examples without having to install apache beam in your environment. create your pipeline:.

Write Google Ads Data To Bigquery With Apache Beam And Dataflow