Spark Write Dataframe To Csv File Spark By Examples Spark sql provides spark.read().csv("file name") to read a file or directory of files in csv format into spark dataframe, and dataframe.write().csv("path") to write to a csv file. In this article, i will explain how to save write spark dataframe, dataset, and rdd contents into a single file (file format can be csv, text, json e.t.c) by merging all multiple part files into one file using scala example.

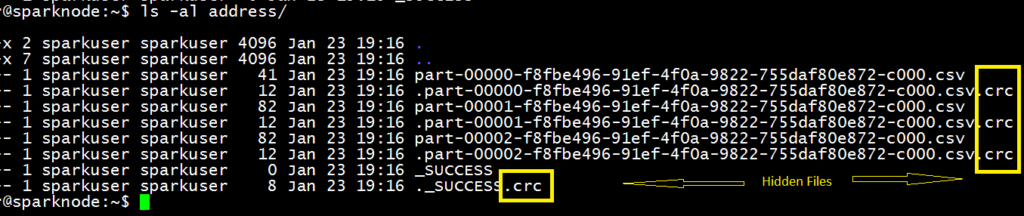

Spark Write Dataframe To Csv File Spark By Examples In pyspark you can save (write extract) a dataframe to a csv file on disk by using dataframeobj.write.csv("path"), using this you can also write dataframe to aws s3, azure blob, hdfs, or any pyspark supported file systems. In spark 2.0.0 , one can convert dataframe(dataset[rows]) as a dataframewriter and use the .csv method to write the file. the function is defined as. path : the location folder name and not the file name. spark stores the csv file at the location specified by creating csv files with name part *.csv.

Spark Write Dataframe Into Single Csv File Merge Multiple Part Files

Spark Write Dataframe Into Single Csv File Merge Multiple Part Files