Implementing Rag With Spring Ai And Ollama Using Local Ai Llm Models In this article, learn how to use ai with rag independent from external ai llm services with ollama based ai llm models. Build a privacy friendly ai banking chatbot using spring ai, ollama local llms, and retrieval augmented generation (rag). fully self hosted, no cloud needed.

Implementing Rag With Spring Ai And Ollama Using Local Ai Llm Models This project demonstrates the implementation of retrieval augmented generation (rag) using spring ai, ollama, and pgvector database. the application serves as a personal assistant that can answer questions about spring boot by referencing the spring boot reference documentation pdf. Spring ai: a java ai development framework for the spring ecosystem, providing a unified api for accessing large models, vector databases, and other ai infrastructure. ollama: a local model running engine (similar to docker) that supports quick deployment of open source models. In this tutorial, we'll build a simple rag powered document retrieval app using langchain, chromadb, and ollama. the app lets users upload pdfs, embed them in a vector database, and query for relevant information. all the code is available in our github repository. you can clone it and start testing right away. We will build a basic rag app with: note that i've added claude.ai as part of the technology stack, because i believe, that now, if you're not using genai tools to help you program and sketch out ideas, you will quickly fall behind the curve.

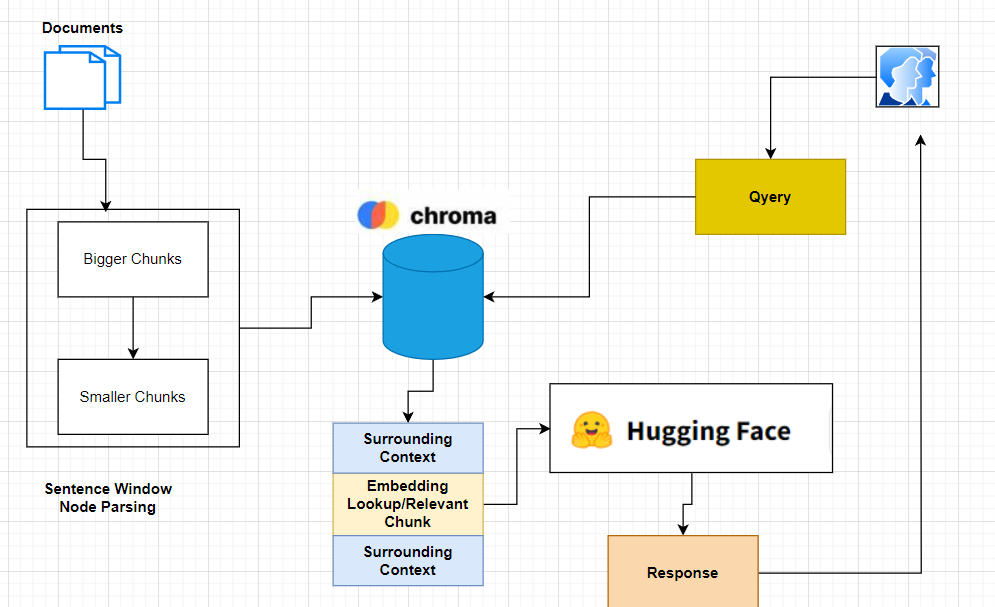

Implementing Rag With Spring Ai And Ollama Using Local Ai Llm Models In this tutorial, we'll build a simple rag powered document retrieval app using langchain, chromadb, and ollama. the app lets users upload pdfs, embed them in a vector database, and query for relevant information. all the code is available in our github repository. you can clone it and start testing right away. We will build a basic rag app with: note that i've added claude.ai as part of the technology stack, because i believe, that now, if you're not using genai tools to help you program and sketch out ideas, you will quickly fall behind the curve. One more trait that makes this publication unique is the meticulous scrutiny of a variety of aspects related to implementing rag with spring ai and ollama using local ai llm models. In this guide, we will learn how to develop and productionize a retrieval augmented generation (rag) based llm application, with a focus on scale and evaluation. in this guide, we explain what retrieval augmented generation (rag) is, specific use cases and how vector search and vector databases help. learn more here!. This guide will show you how to build a complete, local rag pipeline with ollama (for llm and embeddings) and langchain (for orchestration)—step by step, using a real pdf, and add a simple ui with streamlit. Retrieval augmented generation (rag) is an emerging technique in ai applications that enhances responses by retrieving relevant documents from a knowledge base before generating an answer.

Implementing Rag With Spring Ai And Ollama Using Local Ai Llm Models One more trait that makes this publication unique is the meticulous scrutiny of a variety of aspects related to implementing rag with spring ai and ollama using local ai llm models. In this guide, we will learn how to develop and productionize a retrieval augmented generation (rag) based llm application, with a focus on scale and evaluation. in this guide, we explain what retrieval augmented generation (rag) is, specific use cases and how vector search and vector databases help. learn more here!. This guide will show you how to build a complete, local rag pipeline with ollama (for llm and embeddings) and langchain (for orchestration)—step by step, using a real pdf, and add a simple ui with streamlit. Retrieval augmented generation (rag) is an emerging technique in ai applications that enhances responses by retrieving relevant documents from a knowledge base before generating an answer.

Advanced Rag Pipeline And Llm Evaluation Using Open Source Models This guide will show you how to build a complete, local rag pipeline with ollama (for llm and embeddings) and langchain (for orchestration)—step by step, using a real pdf, and add a simple ui with streamlit. Retrieval augmented generation (rag) is an emerging technique in ai applications that enhances responses by retrieving relevant documents from a knowledge base before generating an answer.