Interpretability Of Deep Neural Networks With Sparse Autoencoders Ppt In mathematical logic, interpretability is a relation between formal theories that expresses the possibility of interpreting or translating one into the other. assume t and s are formal theories. Ai interpretability helps people better understand and explain the decision making processes that power artificial intelligence (ai) models. ai models use a complex web of data inputs, algorithms, logic, data science and other processes to return insights.

Interpretability Of Deep Neural Networks With Sparse Autoencoders Ppt Anthropic is doubling down on interpretability, and we have a goal of getting to “interpretability can reliably detect most model problems” by 2027. we are also investing in interpretability startups. Interpretability takes many forms and can be difficult to define; we first explore general frameworks and sets of definitions in which model interpretability can be evaluated and compared (lipton 2016, doshi velez & kim 2017). Explainability refers to the ability of a model to provide clear and understandable explanations for its predictions or decisions. interpretability, on the other hand, focuses on the ability to understand and make sense of how a model works and why it makes certain predictions. Models are interpretable when humans can readily understand the reasoning behind predictions and decisions made by the model. the more interpretable the models are, the easier it is for someone to comprehend and trust the model.

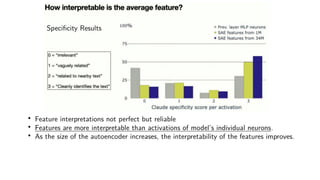

Interpretability Of Deep Neural Networks With Sparse Autoencoders Ppt Explainability refers to the ability of a model to provide clear and understandable explanations for its predictions or decisions. interpretability, on the other hand, focuses on the ability to understand and make sense of how a model works and why it makes certain predictions. Models are interpretable when humans can readily understand the reasoning behind predictions and decisions made by the model. the more interpretable the models are, the easier it is for someone to comprehend and trust the model. What is model interpretability? model interpretability refers to the ability to understand and explain how a machine learning or deep learning model makes its predictions or decisions. Interpretation is something one does to an explanation with the aim of producing another, more understandable, explanation. as with explanation, there are various concepts and methods involved in interpretation: total or partial, global or local, and approximative or isomorphic. Interpretability is the ability to understand the overall consequences of the model and ensuring the things we predict are accurate knowledge aligned with our initial research goal. Interpretability: interpretability, often used interchangeably with explainability, is the ability to explain or provide meaning to model predictions. in particular, the goal of interpretability is to describe the structure of a model in a fashion easily understandable by humans.

Interpretability Of Deep Neural Networks With Sparse Autoencoders Ppt What is model interpretability? model interpretability refers to the ability to understand and explain how a machine learning or deep learning model makes its predictions or decisions. Interpretation is something one does to an explanation with the aim of producing another, more understandable, explanation. as with explanation, there are various concepts and methods involved in interpretation: total or partial, global or local, and approximative or isomorphic. Interpretability is the ability to understand the overall consequences of the model and ensuring the things we predict are accurate knowledge aligned with our initial research goal. Interpretability: interpretability, often used interchangeably with explainability, is the ability to explain or provide meaning to model predictions. in particular, the goal of interpretability is to describe the structure of a model in a fashion easily understandable by humans.