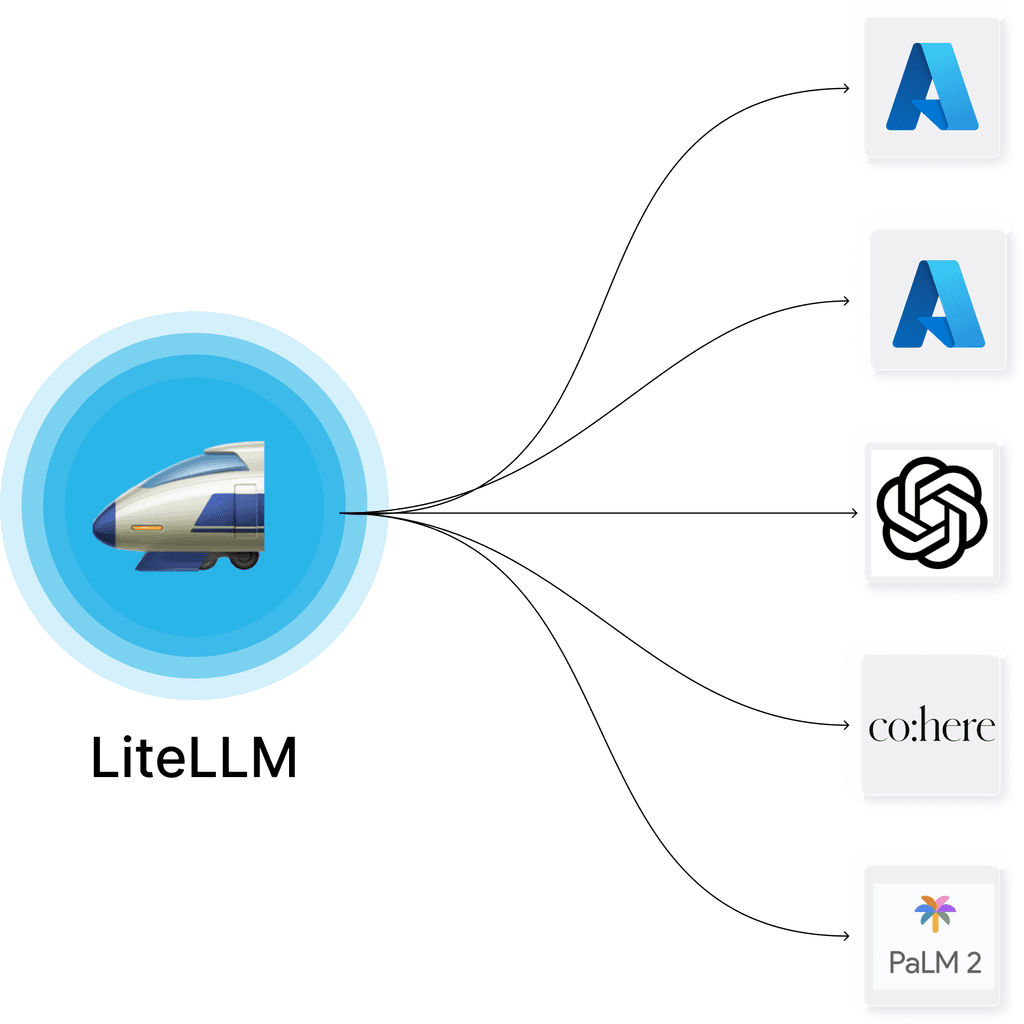

Litellm Proxy Llm Gateway Litellm Litellm maps exceptions across all supported providers to the openai exceptions. all our exceptions inherit from openai's exception types, so any error handling you have for that, should work out of the box with litellm. Python sdk, proxy server (llm gateway) to call 100 llm apis in openai format [bedrock, azure, openai, vertexai, cohere, anthropic, sagemaker, huggingface, replicate, groq] berriai litellm.

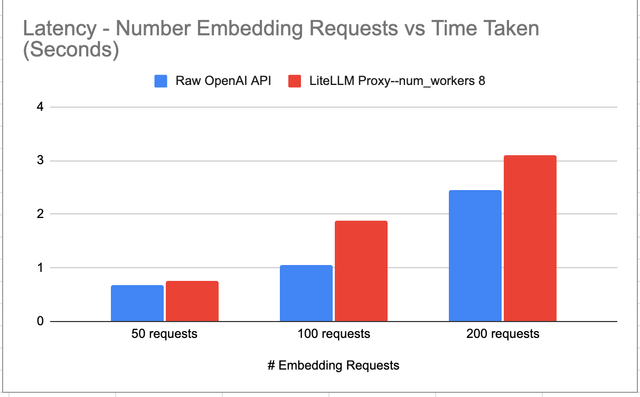

Litellm Proxy Performance Litellm Litellm streamlines the complexities of managing multiple llm models. the litellm proxy has streamlined our management of llms by standardizing logging, the openai api, and authentication for all models, significantly reducing operational complexities. this enables us to quickly adapt to changing demands and swiftly adopt new models. Litellm supports streaming the model response back, pass stream=true to get a streaming iterator in response. streaming is supported for all models (bedrock, huggingface, togetherai, azure, openai, etc.). Deploying litellm, an open source llm gateway, on embedded linux unlocks the ability to run lightweight ai models in resource constrained environments. acting as a flexible proxy server, litellm provides a unified api interface that accepts openai style requests — allowing you to interact with local or remote models using a consistent. Litellm is a powerful open source toolkit that revolutionizes how developers interact with large language models (llms). think of it as a universal translator for llms – it allows your application to communicate with any supported language model using a single, consistent interface. 🌐.

Litellm Deploying litellm, an open source llm gateway, on embedded linux unlocks the ability to run lightweight ai models in resource constrained environments. acting as a flexible proxy server, litellm provides a unified api interface that accepts openai style requests — allowing you to interact with local or remote models using a consistent. Litellm is a powerful open source toolkit that revolutionizes how developers interact with large language models (llms). think of it as a universal translator for llms – it allows your application to communicate with any supported language model using a single, consistent interface. 🌐. Yong sheng tan july 8, 2025 in this guide, i’ll show you how to build a high performance, self hosted ai chat system by integrating litellm with openwebui—providing a robust and private alternative to commercial chatbot solutions. previously, i published a comprehensive walkthrough titled “ running litellm and openwebui on windows localhost “, which also featured rag support using. Litellm supports deepgram's listen endpoint. litellm supports all ibm watsonx.ai foundational models and embeddings. elevenlabs provides high quality ai voice technology, including speech to text capabilities through their transcription api. anthropic, openai, mistral, llama and gemini llms are supported on clarifai. Build a multi provider chat app using litellm and streamlit to connect various ai models like chatgpt, claude, and gemini, allowing seamless conversation management and enhanced privacy with local models through ollama. future enhancements include rag capabilities and file upload support. Litellm files lightweight package to simplify llm api calls this is an exact mirror of the litellm project, hosted at github berriai litellm. sourceforge is not affiliated with litellm. for more information, see the sourceforge open source mirror directory.

Litellm Yong sheng tan july 8, 2025 in this guide, i’ll show you how to build a high performance, self hosted ai chat system by integrating litellm with openwebui—providing a robust and private alternative to commercial chatbot solutions. previously, i published a comprehensive walkthrough titled “ running litellm and openwebui on windows localhost “, which also featured rag support using. Litellm supports deepgram's listen endpoint. litellm supports all ibm watsonx.ai foundational models and embeddings. elevenlabs provides high quality ai voice technology, including speech to text capabilities through their transcription api. anthropic, openai, mistral, llama and gemini llms are supported on clarifai. Build a multi provider chat app using litellm and streamlit to connect various ai models like chatgpt, claude, and gemini, allowing seamless conversation management and enhanced privacy with local models through ollama. future enhancements include rag capabilities and file upload support. Litellm files lightweight package to simplify llm api calls this is an exact mirror of the litellm project, hosted at github berriai litellm. sourceforge is not affiliated with litellm. for more information, see the sourceforge open source mirror directory.