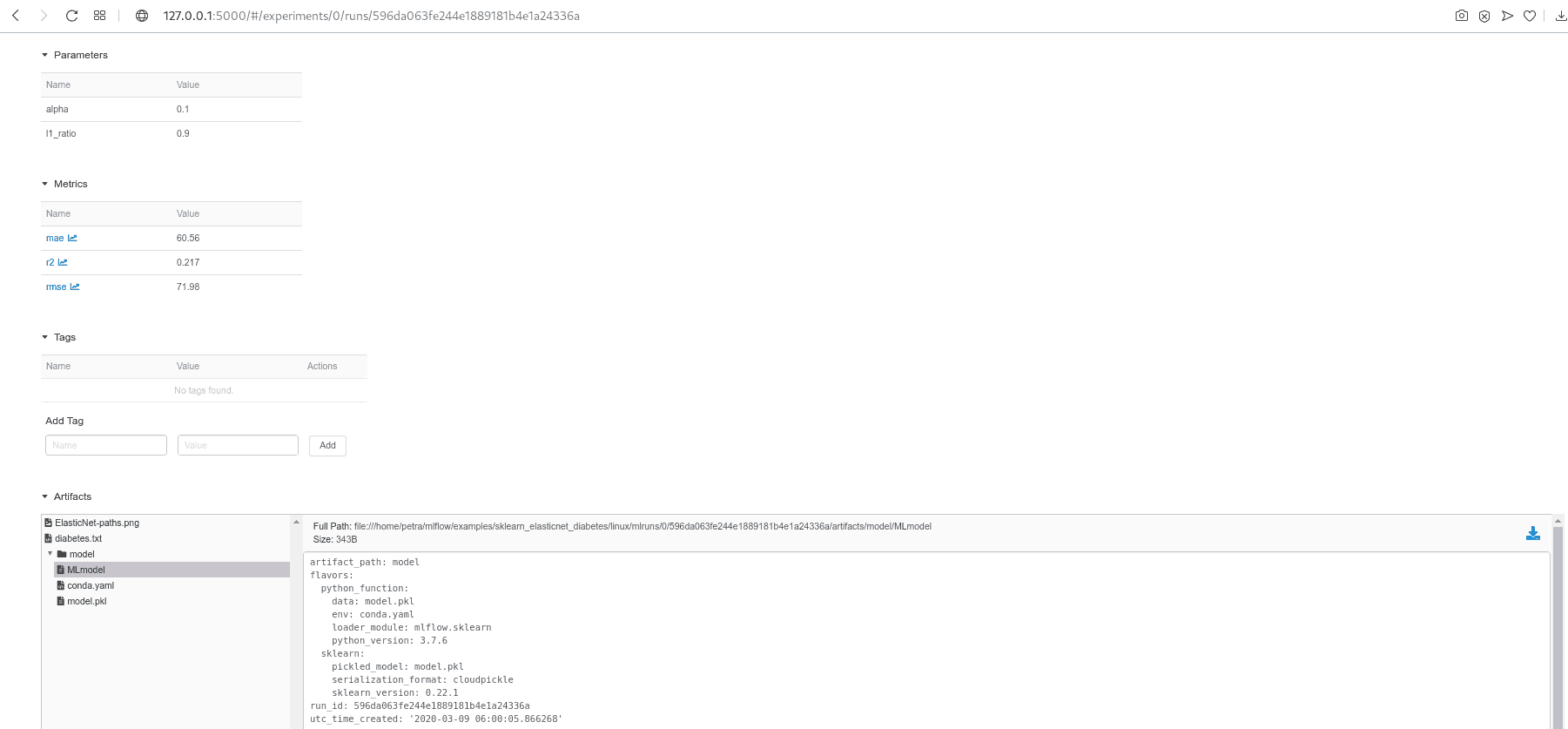

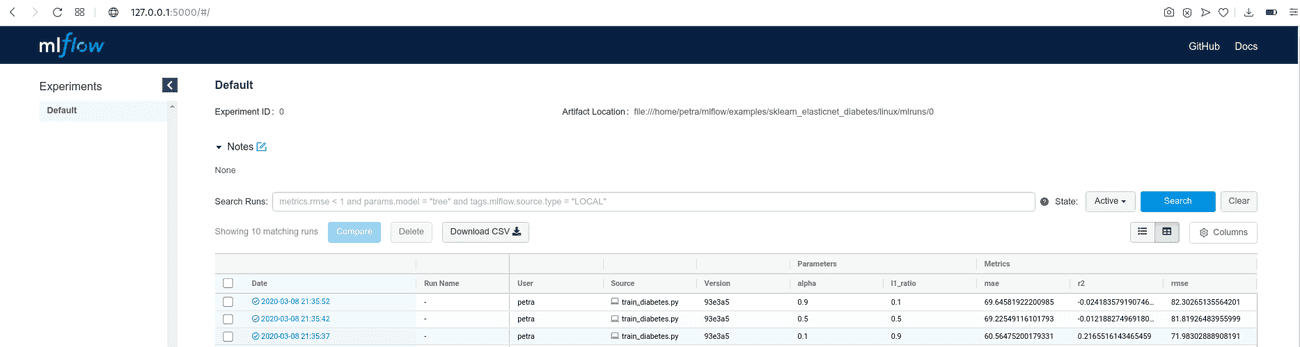

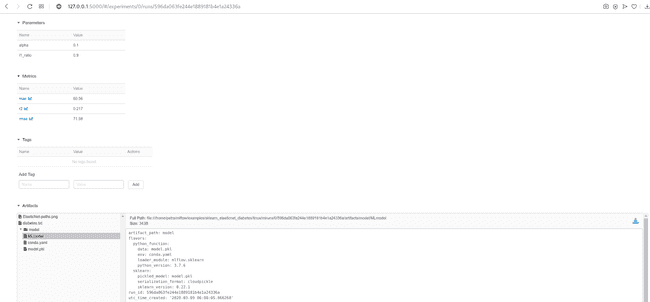

Mlflow Tutorial An Open Source Machine Learning Ml Platform Adaltas With mlflow client (mlflowclient) you can easily get all or selected params and metrics using get run(id).data: # create an instance of the mlflowclient, # connected to the tracking server url. I would like to update previous runs done with mlflow, ie. changing updating a parameter value to accommodate a change in the implementation. typical uses cases: log runs using a parameter a, and.

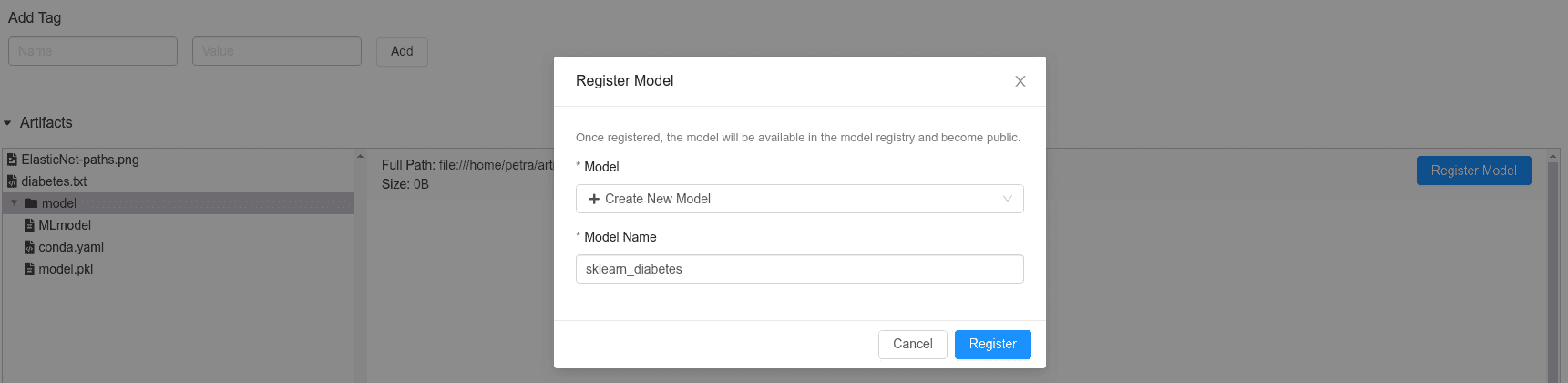

Mlflow Tutorial An Open Source Machine Learning Ml Platform Adaltas Timeouts like yours are not the matter of mlflow alone, but also depend on the server configuration. for instance, users reported problems when uploading large models to google cloud storage. The mlflow.pyfunc.log model function's artifact path parameter, is defined as : :param artifact path: the run relative artifact path to which to log the python model. that means, it is just a name that should identify the model in the context of that run and hence cannot be an absolute path like what you passed in. try something short like add5 model. reg model name = "ml flow addn test. It seems that if i execute it on the path where mlrun exists, this message pops out when accessing to mlflow entrypoint url. however, executing mlfow ui on the parent folder first (which would gets empty mlrun created) then go back to mlrun folder where experiments get created. I want to use mlflow to track the development of a tensorflow model. how do i log the loss at each epoch? i have written the following code: mlflow.set tracking uri(tracking uri) mlflow.set experi.

Mlflow Tutorial An Open Source Machine Learning Ml Platform Adaltas It seems that if i execute it on the path where mlrun exists, this message pops out when accessing to mlflow entrypoint url. however, executing mlfow ui on the parent folder first (which would gets empty mlrun created) then go back to mlrun folder where experiments get created. I want to use mlflow to track the development of a tensorflow model. how do i log the loss at each epoch? i have written the following code: mlflow.set tracking uri(tracking uri) mlflow.set experi. I am trying to see if mlflow is the right place to store my metrics in the model tracking. according to the doc log metric takes either a key value or a dict of key values. i am wondering how to log. I am running an ml pipeline, at the end of which i am logging certain information using mlflow. i was mostly going through databricks' official mlflow tracking tutorial. import mlflow import mlflow. I am using mlflow server to set up mlflow tracking server. mlflow server has 2 command options that accept artifact uri, default artifact root

Mlflow Tutorial An Open Source Machine Learning Ml Platform Adaltas I am trying to see if mlflow is the right place to store my metrics in the model tracking. according to the doc log metric takes either a key value or a dict of key values. i am wondering how to log. I am running an ml pipeline, at the end of which i am logging certain information using mlflow. i was mostly going through databricks' official mlflow tracking tutorial. import mlflow import mlflow. I am using mlflow server to set up mlflow tracking server. mlflow server has 2 command options that accept artifact uri, default artifact root

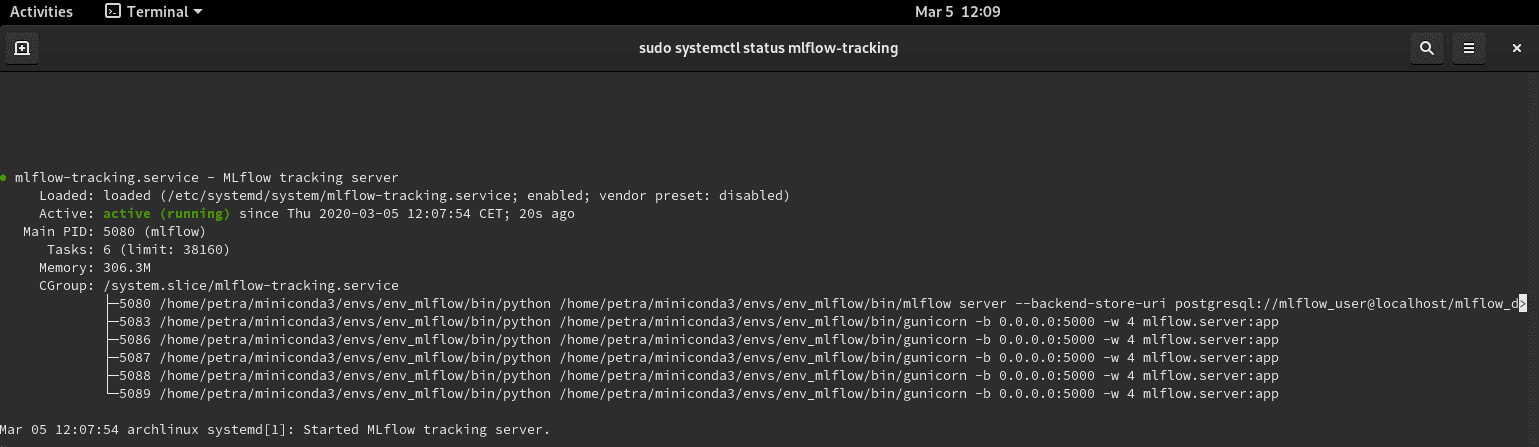

Mlflow Tutorial An Open Source Machine Learning Ml Platform Adaltas I am using mlflow server to set up mlflow tracking server. mlflow server has 2 command options that accept artifact uri, default artifact root

Mlflow Tutorial An Open Source Machine Learning Ml Platform Adaltas