Monitoring Azure Llm Performance Restackio To effectively monitor llm performance in azure environments, leveraging azure monitor is crucial. this service provides a comprehensive suite of tools designed to track and analyze performance metrics, ensuring that your llm applications operate efficiently and reliably. By integrating llmonitor with azure llm models, you can achieve robust performance monitoring and analytics. this setup not only enhances your understanding of model behavior but also aids in optimizing the overall performance of your ai applications.

Monitoring Azure Llm Performance Restackio Azure machine learning model monitoring for generative ai applications makes it easier for you to monitor your llm applications in production for safety and quality on a cadence to ensure it's delivering maximum business impact. monitoring ultimately helps maintain the quality and safety of your generative ai applications. Techniques for monitoring performance vary slightly depending on the setup of the llm powering the chatbot. however, the core goal remains the same – ensuring high end user satisfaction by delivering a low latency solution and collecting production input and output data to drive future improvement. Monitor performance: once configured, begin monitoring the performance of your llms. utilize the dashboard provided by your observability tool to visualize metrics and logs. Explore effective strategies for monitoring azure performance with llm observability to enhance system reliability and efficiency. the framework for autonomous intelligence.

Monitoring Llm Performance In Azure Restackio Monitor performance: once configured, begin monitoring the performance of your llms. utilize the dashboard provided by your observability tool to visualize metrics and logs. Explore effective strategies for monitoring azure performance with llm observability to enhance system reliability and efficiency. the framework for autonomous intelligence. Integrating llamaindex with azure llm observability tools enhances your ability to monitor and evaluate llm applications. by configuring a variable once, you can leverage azure's robust monitoring capabilities to track performance metrics and gain deeper insights into your application’s behavior. Monitoring ai and llm based applications ensures reliability, performance optimization, and proactive issue resolution. by integrating opentelemetry, azure monitor, and semantic kernel,. In this article, we will talk about how to monitor requests made to an azure open ai endpoint. this involves the usage of azure api management service, azure app insights and deciding on what dimensions to monitor. Explore advanced azure ai observability techniques for llm observability, enhancing performance and reliability in ai systems. monitoring is a critical aspect of deploying azure ai services, particularly for large language models (llms). it ensures that the system operates efficiently and meets user expectations.

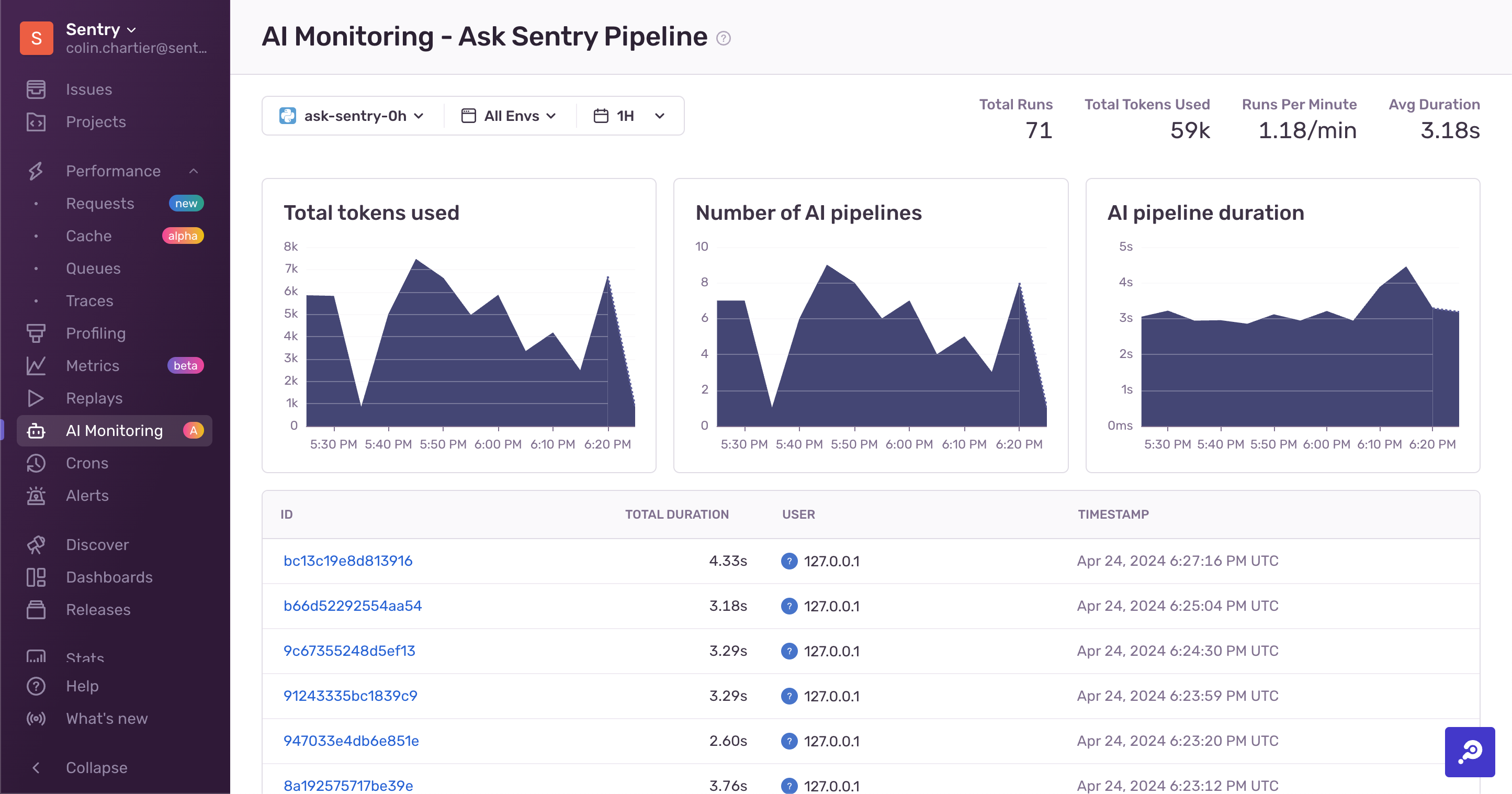

Llm Monitoring Beta Sentry Changelog Integrating llamaindex with azure llm observability tools enhances your ability to monitor and evaluate llm applications. by configuring a variable once, you can leverage azure's robust monitoring capabilities to track performance metrics and gain deeper insights into your application’s behavior. Monitoring ai and llm based applications ensures reliability, performance optimization, and proactive issue resolution. by integrating opentelemetry, azure monitor, and semantic kernel,. In this article, we will talk about how to monitor requests made to an azure open ai endpoint. this involves the usage of azure api management service, azure app insights and deciding on what dimensions to monitor. Explore advanced azure ai observability techniques for llm observability, enhancing performance and reliability in ai systems. monitoring is a critical aspect of deploying azure ai services, particularly for large language models (llms). it ensures that the system operates efficiently and meets user expectations.

Llm Monitoring In this article, we will talk about how to monitor requests made to an azure open ai endpoint. this involves the usage of azure api management service, azure app insights and deciding on what dimensions to monitor. Explore advanced azure ai observability techniques for llm observability, enhancing performance and reliability in ai systems. monitoring is a critical aspect of deploying azure ai services, particularly for large language models (llms). it ensures that the system operates efficiently and meets user expectations.

Performance Tuning And Monitoring With Azure Storage Metrics And Alerts