Python Multiprocessing Vs Multithreading To make my code more "pythonic" and faster, i use multiprocessing and a map function to send it a) the function and b) the range of iterations. the implanted solution (i.e., calling tqdm. I like pathos.multiprocessing, which can serve almost a drop in replacement of non parallel map while enjoying the multiprocessing. i have a simple wrapper of pathos.multiprocessing.map, such that it is more memory efficient when processing a read only large data structure across multiple cores, see this git repository.

Python Multiprocessing Vs Multithreading I'm having much trouble trying to understand just how the multiprocessing queue works on python and how to implement it. lets say i have two python modules that access data from a shared file, let'. The multiprocessing.pool modules tries to provide a similar interface. pool.apply is like python apply, except that the function call is performed in a separate process. pool.apply blocks until the function is completed. pool.apply async is also like python's built in apply, except that the call returns immediately instead of waiting for the. Sorry for the vagueness. i'm running a similar type of program with multiprocessing inside class functions. i just confirmed that i do have dill, so i'm not sure why cpickle is being called. What are the fundamental differences between queues and pipes in python's multiprocessing package? in what scenarios should one choose one over the other? when is it advantageous to use pipe()?.

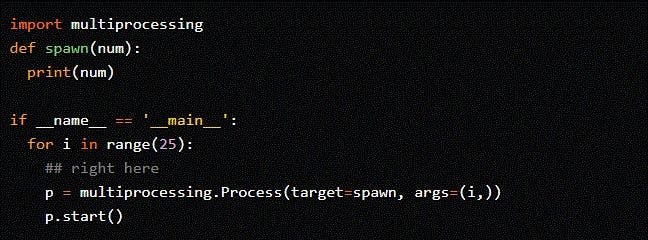

Python Multiprocessing Vs Multithreading Sorry for the vagueness. i'm running a similar type of program with multiprocessing inside class functions. i just confirmed that i do have dill, so i'm not sure why cpickle is being called. What are the fundamental differences between queues and pipes in python's multiprocessing package? in what scenarios should one choose one over the other? when is it advantageous to use pipe()?. I've looked into multiprocessing.queue, but it doesn't look like what i need or perhaps i'm interpreting the docs incorrectly. is there a way to limit the number of simultaneous multiprocessing.process s running?. Unlike multiprocessing.pool, multiprocessing.threadpool does work also in jupyter notebooks to make a generic pool class working on both classic and interactive python interpreters i have made this:. I want to profile a simple multi process python script. i tried this code: import multiprocessing import cprofile import time def worker(num): time.sleep(3) print 'worker:', num if name. I'm trying to port over some "parallel" python code to azure databricks. the code runs perfectly fine locally, but somehow doesn't on azure databricks. the code leverages the multiprocess.

Python Multiprocessing Vs Multithreading I've looked into multiprocessing.queue, but it doesn't look like what i need or perhaps i'm interpreting the docs incorrectly. is there a way to limit the number of simultaneous multiprocessing.process s running?. Unlike multiprocessing.pool, multiprocessing.threadpool does work also in jupyter notebooks to make a generic pool class working on both classic and interactive python interpreters i have made this:. I want to profile a simple multi process python script. i tried this code: import multiprocessing import cprofile import time def worker(num): time.sleep(3) print 'worker:', num if name. I'm trying to port over some "parallel" python code to azure databricks. the code runs perfectly fine locally, but somehow doesn't on azure databricks. the code leverages the multiprocess.