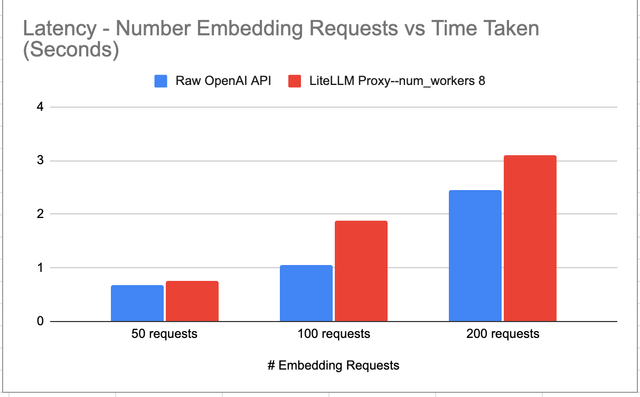

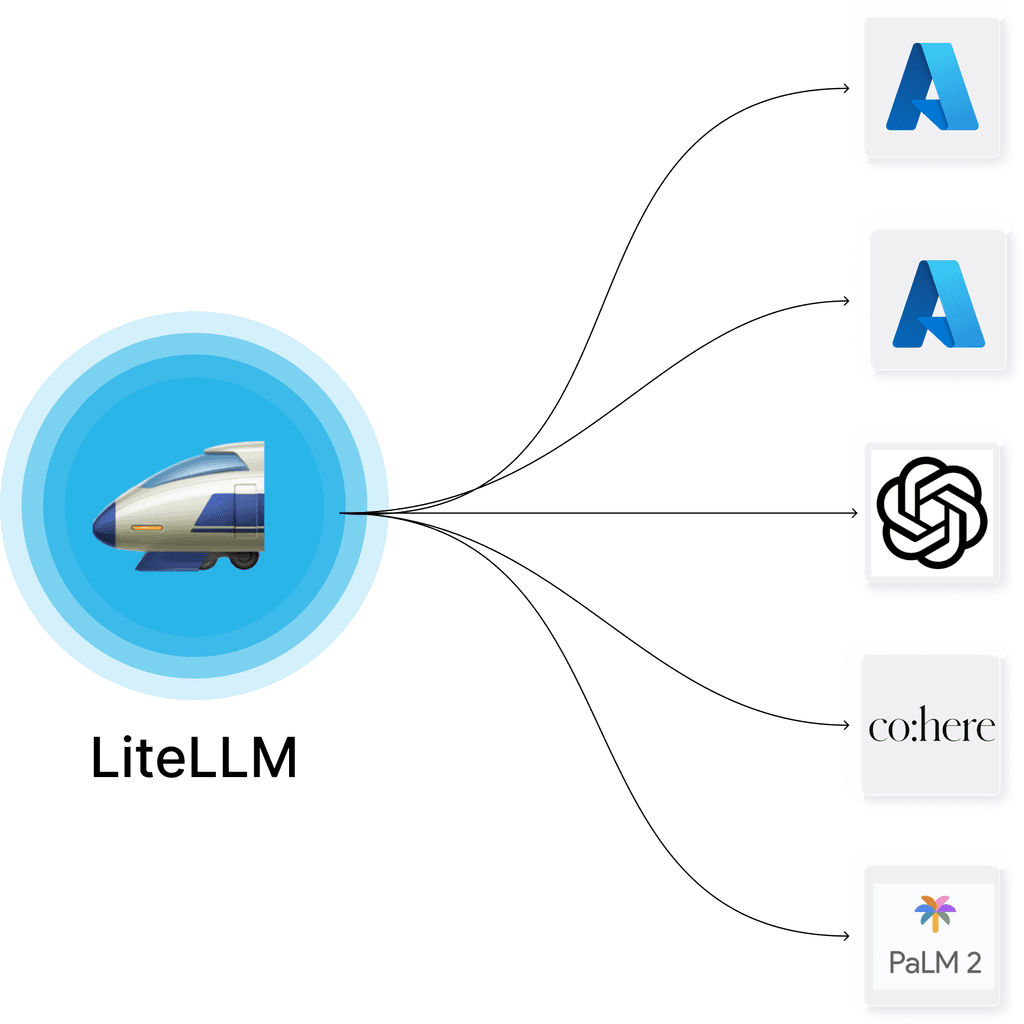

Litellm Proxy Performance Litellm Everything worked smoothly until recently when i encountered issues connecting to the mistral and perplexity models. to diagnose the issue, i tested the litellm proxy independently using curl commands, ensuring the correct "user" role and the same config.yaml configuration i use in open webui. This release brings support for proxy admins to select which specific models to health check and see the health status as soon as its individual check completes, along with last check times.

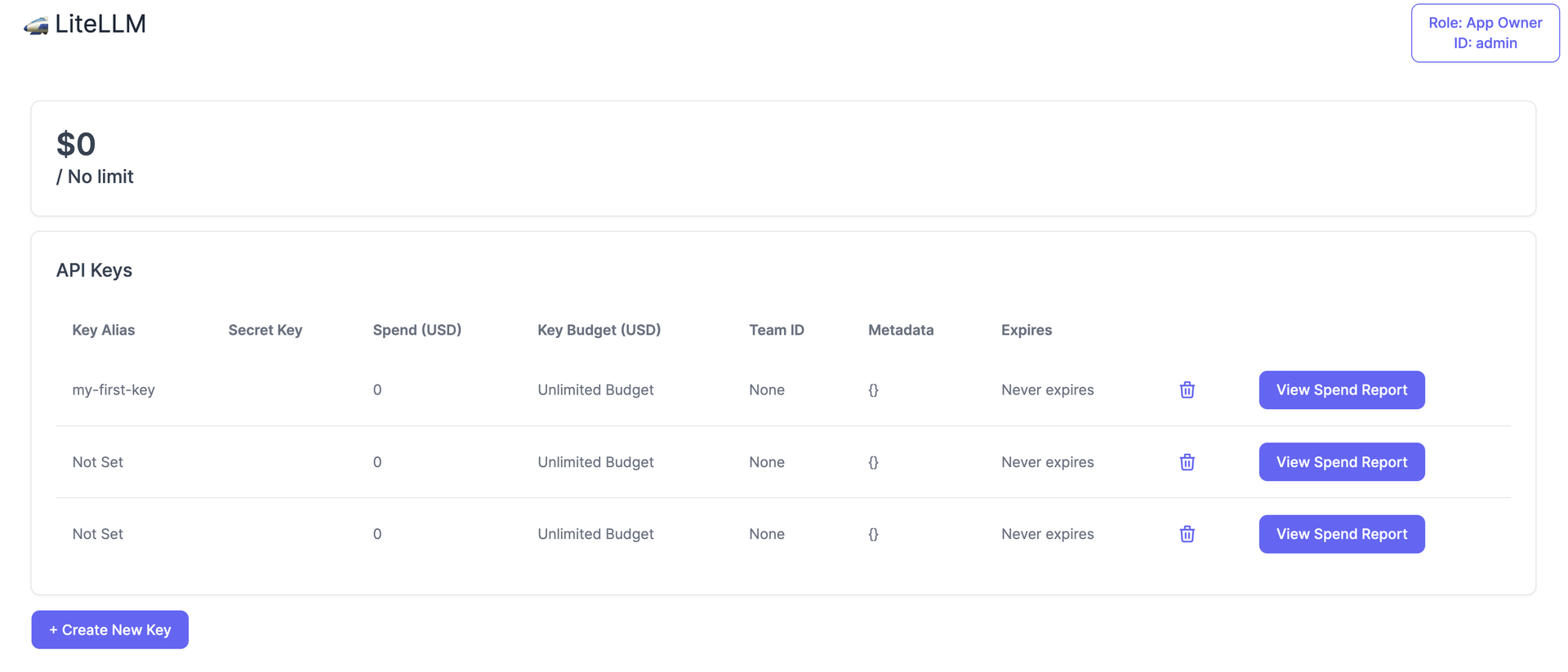

Quick Start Litellm Since updating to new crewai version 0.102.0, i have been facing a lot of issues related to timeouts when using local models with ollama. does anyone face the same issue?. Hi, i'm running the main latest branch in a docker image, having a strange problem with my setup regarding the "huggingface meta llama llama 3.3 70b instruct" model. in the litellm playground on my installation, chat queries work seemles. Add new models get model info without restarting proxy. retrieve detailed information about each model listed in the model info endpoint, including descriptions from the config.yaml file, and additional model info (e.g. max tokens, cost per input token, etc.) pulled from the model info you set and the litellm model cost map. Litellm v1.70.1 stable is live now. here are the key highlights of this release: spend logs retention period: enable deleting spend logs older than a certain period. email invites 2.0: send new users onboarded to litellm an email invite. nscale: llm api for compliance with european regulations.

Litellm Add new models get model info without restarting proxy. retrieve detailed information about each model listed in the model info endpoint, including descriptions from the config.yaml file, and additional model info (e.g. max tokens, cost per input token, etc.) pulled from the model info you set and the litellm model cost map. Litellm v1.70.1 stable is live now. here are the key highlights of this release: spend logs retention period: enable deleting spend logs older than a certain period. email invites 2.0: send new users onboarded to litellm an email invite. nscale: llm api for compliance with european regulations. I might have found the issue: the handler for azure ai models defers to openai which initializes a regular openai client which passes a bearer token auth header, rather than the azureopenai client which passes the api key header. It's a yaml formatted file that consolidates all the settings needed to define how your proxy will operate, including: which llm models you'll use, how to connect to them, how to route requests, how to manage authentication, and how to customize various aspects of the proxy's behavior. Previous [old proxy 👉 [new proxy here] (. simple proxy)] local litellm proxy server. While 200 and 429 are expected, the 500 isn't. the error message doesn't tell why models aren't available. if it's because of quota, why not return 429? it would be good to have information if the error is recoverable. the error is repeatable. litellm proxy: inside proxy logging pre call hook!.

Litellm I might have found the issue: the handler for azure ai models defers to openai which initializes a regular openai client which passes a bearer token auth header, rather than the azureopenai client which passes the api key header. It's a yaml formatted file that consolidates all the settings needed to define how your proxy will operate, including: which llm models you'll use, how to connect to them, how to route requests, how to manage authentication, and how to customize various aspects of the proxy's behavior. Previous [old proxy 👉 [new proxy here] (. simple proxy)] local litellm proxy server. While 200 and 429 are expected, the 500 isn't. the error message doesn't tell why models aren't available. if it's because of quota, why not return 429? it would be good to have information if the error is recoverable. the error is repeatable. litellm proxy: inside proxy logging pre call hook!.

Litellm Proxy Server Llm Gateway Litellm Previous [old proxy 👉 [new proxy here] (. simple proxy)] local litellm proxy server. While 200 and 429 are expected, the 500 isn't. the error message doesn't tell why models aren't available. if it's because of quota, why not return 429? it would be good to have information if the error is recoverable. the error is repeatable. litellm proxy: inside proxy logging pre call hook!.