How To Deploy Nvidia Inference Microservices Nims On Vultr Vultr Docs Learn about nim operators components and workflow through this short tutorial and how you can get started serving large language models and other inference microservices from nvidia on top. Nim operator facilitates this with simplified, lightweight deployment and manages the lifecycle of ai nim inference pipelines on kubernetes. nim operator also supports pre caching models to enable faster initial inference and autoscaling.

Nvidia Inference Microservice Nim Be On The Right Side Of Change Nvidia nim microservices deliver ai foundation models as accelerated inference microservices that are portable across data center, workstation, and cloud, accelerating flexible generative ai development, deployment and time to value. to use the operator in your cluster, refer to docs for installation and configuration information. You'll learn how to deploy a containerized ai model, specifically the llama 3 8b instruct model, and interact with it using simple api calls. these steps will demonstrate leveraging nvidia's powerful gpu acceleration for ai inference in a secure, self hosted environment. The nvidia kubernetes nim operator is a kubernetes operator designed to facilitate the deployment, management, and scaling of nvidia inference microservices (nim) and nemo microservices on kubernetes clusters. this operator extends the kubernetes api with custom resources that enable efficient ai model deployment and management. purpose and scope. Using the nim operator simplifies the operation and lifecycle management of nim and nemo microservices at scale and at the cluster level. custom resources simplify the deployment and lifecycle management of multiple ai inference pipelines, such as rag and multiple llm inferences.

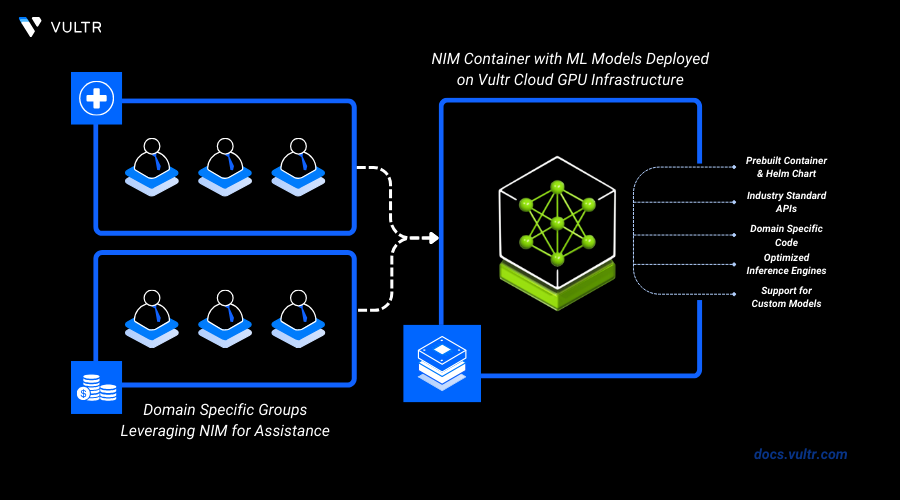

Nvidia Inference Microservice Nim Be On The Right Side Of Change The nvidia kubernetes nim operator is a kubernetes operator designed to facilitate the deployment, management, and scaling of nvidia inference microservices (nim) and nemo microservices on kubernetes clusters. this operator extends the kubernetes api with custom resources that enable efficient ai model deployment and management. purpose and scope. Using the nim operator simplifies the operation and lifecycle management of nim and nemo microservices at scale and at the cluster level. custom resources simplify the deployment and lifecycle management of multiple ai inference pipelines, such as rag and multiple llm inferences. Nim is a set of microservices designed to automate the deployment of generative ai inferencing applications. nim was built with flexibility in mind. it supports a wide range of genai models, but also enabled frictionless scalability of genai inferencing. below is a high level view of the nim components:. This repo contains reference implementations, example documents, and architecture guides that can be used as a starting point to deploy multiple nims and other nvidia microservices into kubernetes and other production deployment environments. Developers can now deploy, scale, and manage nim microservices with just a few clicks or commands. the operator also supports pre caching models for faster initial inference and enables auto scaling based on resource availability. The first release of nvidia nim operator simplified the deployment and lifecycle management of inference pipelines for nvidia nim microservices, reducing the workload for mlops, llmops engineers, and kubernetes admins.

Nvidia Inference Microservice Nim Be On The Right Side Of Change Nim is a set of microservices designed to automate the deployment of generative ai inferencing applications. nim was built with flexibility in mind. it supports a wide range of genai models, but also enabled frictionless scalability of genai inferencing. below is a high level view of the nim components:. This repo contains reference implementations, example documents, and architecture guides that can be used as a starting point to deploy multiple nims and other nvidia microservices into kubernetes and other production deployment environments. Developers can now deploy, scale, and manage nim microservices with just a few clicks or commands. the operator also supports pre caching models for faster initial inference and enables auto scaling based on resource availability. The first release of nvidia nim operator simplified the deployment and lifecycle management of inference pipelines for nvidia nim microservices, reducing the workload for mlops, llmops engineers, and kubernetes admins.

Managing Ai Inference Pipelines On Kubernetes With Nvidia Nim Operator Developers can now deploy, scale, and manage nim microservices with just a few clicks or commands. the operator also supports pre caching models for faster initial inference and enables auto scaling based on resource availability. The first release of nvidia nim operator simplified the deployment and lifecycle management of inference pipelines for nvidia nim microservices, reducing the workload for mlops, llmops engineers, and kubernetes admins.

Comments are closed.