How To Run Open Source Llms Locally Using Ollama Pdf Open Source This guide will show you how to easily set up and run large language models (llms) locally using ollama and open webui on windows, linux, or macos without the need for docker. ollama provides local model inference, and open webui is a user interface that simplifies interacting with these models. Learn how to deploy ollama with open webui locally using docker compose or manual setup. run powerful open source language models on your own hardware for data privacy, cost savings, and customization without complex configurations.

Ollama Open Webui A Way To Run Llms Locally Here's how to run your own little chatgpt locally, using ollama and open webui in docker! this is the first post in a series about running llms locally. the second part is about connecting stable diffusion webui to your locally running open webui . we’ll accomplish this using. you can enable rootless docker with the following nix configuration. The best way is to run llms locally so that the data is safe. for this purpose, we use ollama which is an open source tool to run llms locally. In this article, you will learn how to locally access ai llms such as meta llama 3, mistral, gemma, phi, etc., from your linux terminal by using an ollama, and then access the chat interface from your browser using the open webui. In just 3 (!) steps, you can be chatting with your favorite llms locally – “ no frills ” mode. and if you’re aiming for the full chatgpt ish experience offline, complete with a sleek interface and features like “chat with your pdf,” it’s only seven steps!.

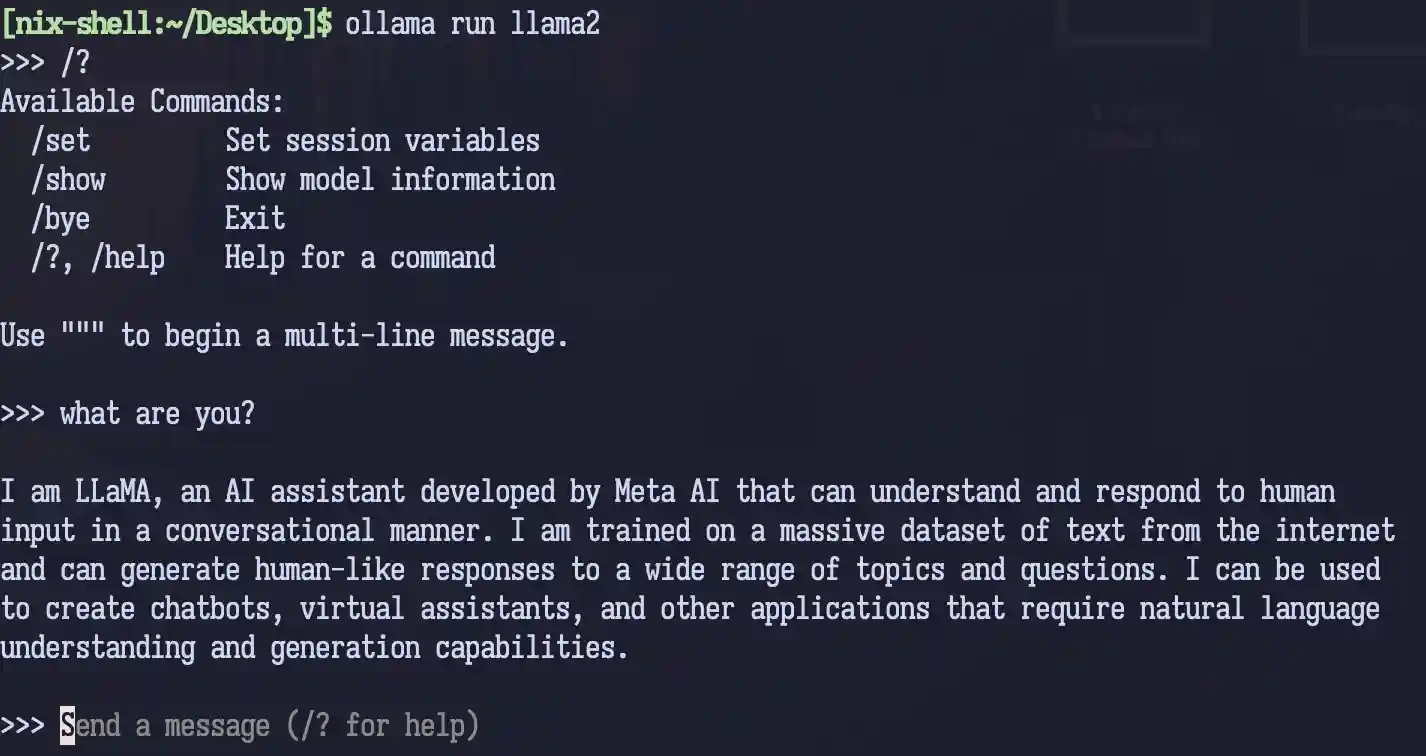

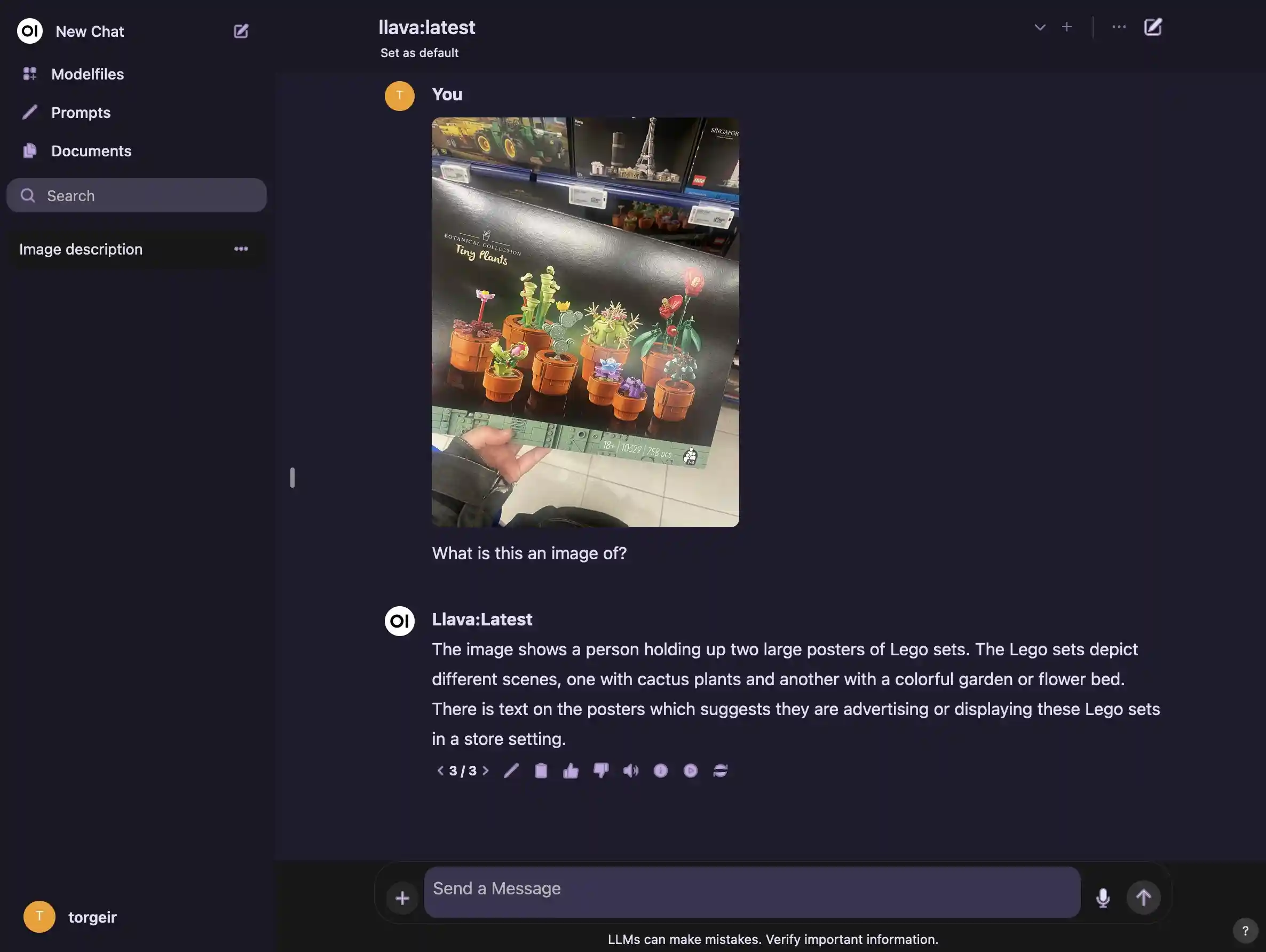

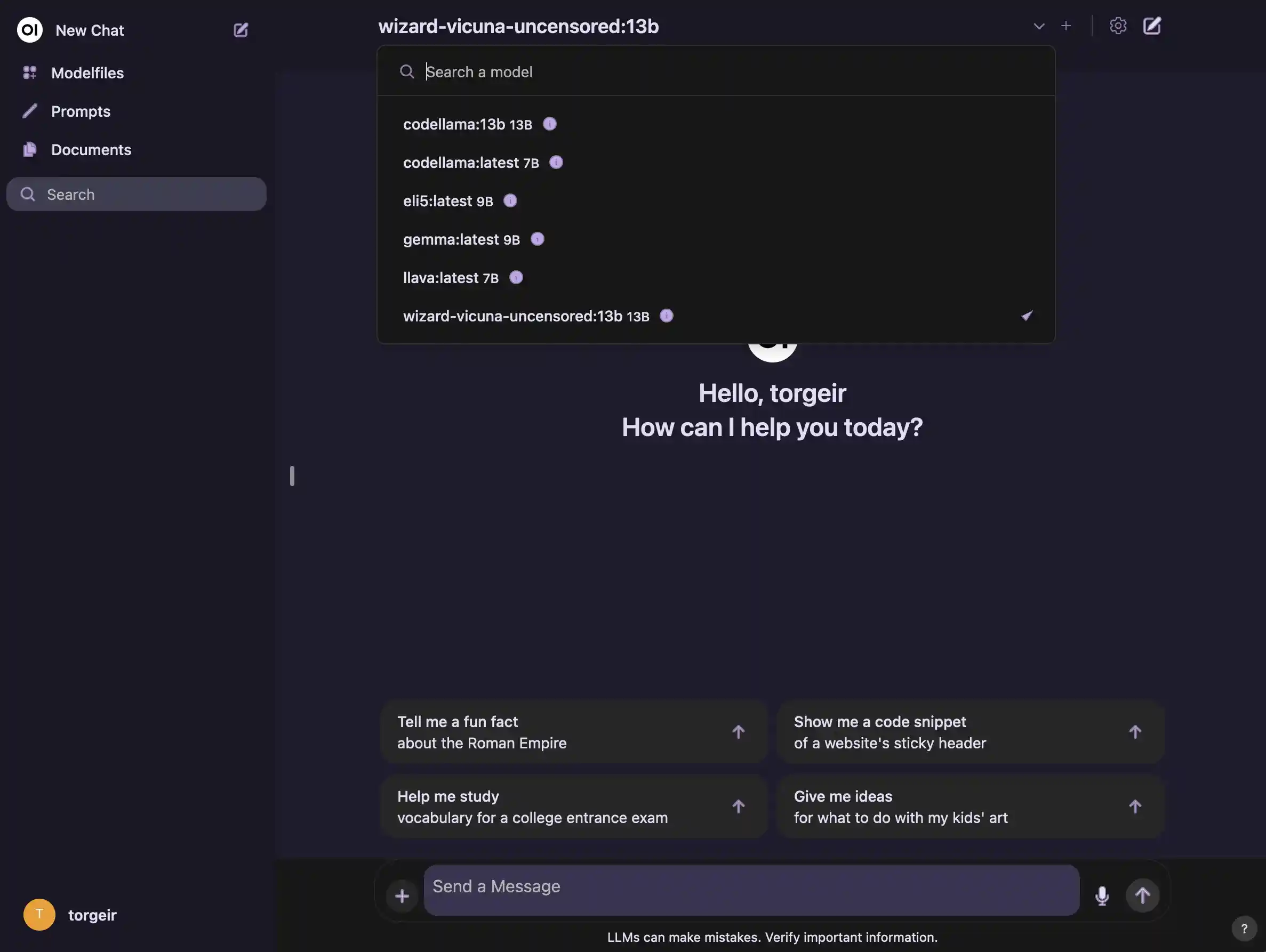

Ollama Open Webui A Way To Run Llms Locally In this article, you will learn how to locally access ai llms such as meta llama 3, mistral, gemma, phi, etc., from your linux terminal by using an ollama, and then access the chat interface from your browser using the open webui. In just 3 (!) steps, you can be chatting with your favorite llms locally – “ no frills ” mode. and if you’re aiming for the full chatgpt ish experience offline, complete with a sleek interface and features like “chat with your pdf,” it’s only seven steps!. Ollama and open webui are powerful tools that enable software developers to run llms on their own machines. ollama provides a simple interface for managing and running various llms locally, while open webui offers a user friendly, browser based interface for interacting with these models. Ollama lets you run powerful large language models (llms) locally for free, giving you full control over your data and performance. in this tutorial, a step by step guide will be provided to help you install ollama, run models like llama 2, use the built in http api, and even create custom models tailored to your needs. what is ollama?. Ollama is a tool that allows us to get up and running with large language models (llms) on our local machines. it's part of the wave of new technology that includes models like the newly open sourced llama 3.1 from meta, mistral, and many more. the big question on your mind might be: why would anyone want to run an llm locally?. By the end of this guide, you will have a fully functional llm running locally on your machine. before starting, ensure that you have the following installed on your machine: docker: install docker if you don't have it already. docker compose (optional): if you plan to manage multi container applications, install docker compose.

Running Llms Locally With Ollama And Open Webui Torgeir Dev Ollama and open webui are powerful tools that enable software developers to run llms on their own machines. ollama provides a simple interface for managing and running various llms locally, while open webui offers a user friendly, browser based interface for interacting with these models. Ollama lets you run powerful large language models (llms) locally for free, giving you full control over your data and performance. in this tutorial, a step by step guide will be provided to help you install ollama, run models like llama 2, use the built in http api, and even create custom models tailored to your needs. what is ollama?. Ollama is a tool that allows us to get up and running with large language models (llms) on our local machines. it's part of the wave of new technology that includes models like the newly open sourced llama 3.1 from meta, mistral, and many more. the big question on your mind might be: why would anyone want to run an llm locally?. By the end of this guide, you will have a fully functional llm running locally on your machine. before starting, ensure that you have the following installed on your machine: docker: install docker if you don't have it already. docker compose (optional): if you plan to manage multi container applications, install docker compose.

Running Llms Locally With Ollama And Open Webui Torgeir Dev Ollama is a tool that allows us to get up and running with large language models (llms) on our local machines. it's part of the wave of new technology that includes models like the newly open sourced llama 3.1 from meta, mistral, and many more. the big question on your mind might be: why would anyone want to run an llm locally?. By the end of this guide, you will have a fully functional llm running locally on your machine. before starting, ensure that you have the following installed on your machine: docker: install docker if you don't have it already. docker compose (optional): if you plan to manage multi container applications, install docker compose.

Running Llms Locally With Ollama And Open Webui Torgeir Dev