Spark With Sql Server Read And Write Table Spark By Examples Can we connect to sql server (mssql) from pyspark and read the table into pyspark dataframe and write the dataframe to the sql table? in order to connect. To write data from a spark dataframe into a sql server table, we need a sql server jdbc connector. also, we need to provide basic configuration property values like connection string, user name, and password as we did while reading the data from sql server.

Pyspark Read And Write Sql Server Table Spark By Examples I am using the code below to write a dataframe of 43 columns and about 2,000,000 rows into a table in sql server: dataframe .write .format ("jdbc") .mode ("overwrite") .option ("driver", "com. This tutorial focuses specifically on demonstrating the pyspark read from and write to mssql operations. consequently, only read() and write() variables will be discussed. Today in this article, we will see how to use pyspark connect to sql database using python code examples. here’s a basic example demonstrating how to read data from sql database, perform a transformation, and then write the results back to sql database. Once you have established a connection to sql server, you can use spark’s dataframe api to read and write data from and to the microsoft sql server database. to read data from sql server to spark, you can use the “spark.read.jdbc ()” method.

Pyspark Read And Write Sql Server Table Spark By Examples Today in this article, we will see how to use pyspark connect to sql database using python code examples. here’s a basic example demonstrating how to read data from sql database, perform a transformation, and then write the results back to sql database. Once you have established a connection to sql server, you can use spark’s dataframe api to read and write data from and to the microsoft sql server database. to read data from sql server to spark, you can use the “spark.read.jdbc ()” method. In this post, we are going to demonstrate how we can use apache spark to read and write data to a sql server table. this can be very handy if we need to read a huge amount of data from. To run sql queries in pyspark, you’ll first need to load your data into a dataframe. dataframes are the primary data structure in spark, and they can be created from various data sources, such as csv, json, and parquet files, as well as hive tables and jdbc databases. for example, to load a csv file into a dataframe, you can use the following code. In this article, you have learned what is pyspark sql module, its advantages, important classes from the module, and how to run sql like operations on dataframe and on the temporary tables. You can create and manage sql tables from pyspark dataframes, perform bulk writes, and query them using spark sql or synapse sql on demand. 🛠️ step by step examples.

Spark Read And Write Mysql Database Table Spark By Examples In this post, we are going to demonstrate how we can use apache spark to read and write data to a sql server table. this can be very handy if we need to read a huge amount of data from. To run sql queries in pyspark, you’ll first need to load your data into a dataframe. dataframes are the primary data structure in spark, and they can be created from various data sources, such as csv, json, and parquet files, as well as hive tables and jdbc databases. for example, to load a csv file into a dataframe, you can use the following code. In this article, you have learned what is pyspark sql module, its advantages, important classes from the module, and how to run sql like operations on dataframe and on the temporary tables. You can create and manage sql tables from pyspark dataframes, perform bulk writes, and query them using spark sql or synapse sql on demand. 🛠️ step by step examples.

Spark Spark Table Vs Spark Read Table Spark By Examples In this article, you have learned what is pyspark sql module, its advantages, important classes from the module, and how to run sql like operations on dataframe and on the temporary tables. You can create and manage sql tables from pyspark dataframes, perform bulk writes, and query them using spark sql or synapse sql on demand. 🛠️ step by step examples.

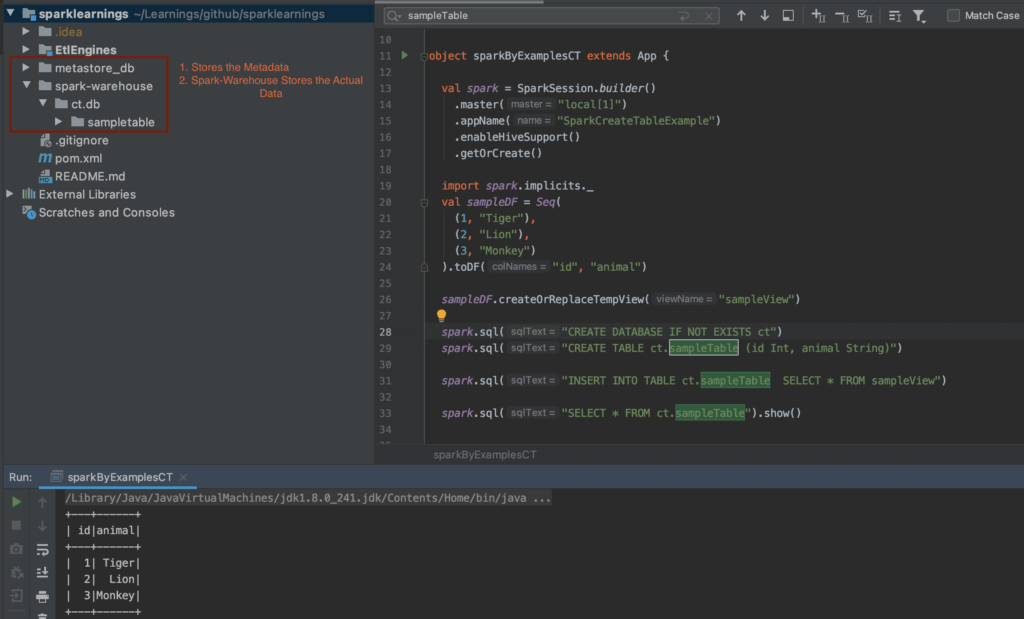

Create Sql Hive Table In Spark Pyspark Spark By Examples