Pyspark Tutorial For Beginners With Examples Spark By 57 Off The pyspark sql dataframe api provides a high level abstraction for working with structured and tabular data in pyspark. it offers functionalities to manipulate, transform, and analyze data using a dataframe based interface. Pyspark, a powerful data processing engine built on top of apache spark, has revolutionized how we handle big data. in this tutorial, we’ll explore pyspark with databricks, covering everything.

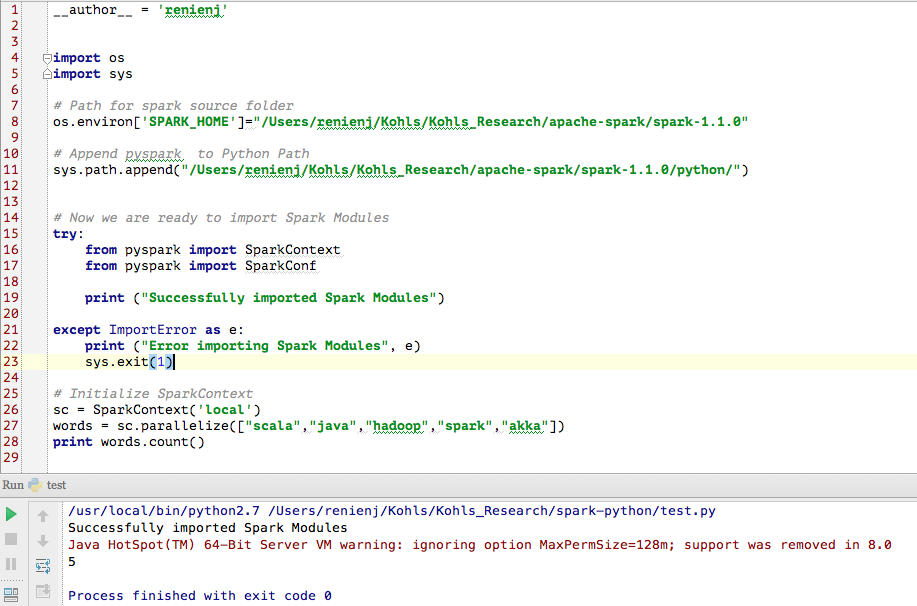

Pyspark Tutorial For Beginners With Examples Spark By 57 Off Explanation of all pyspark rdd, dataframe and sql examples present on this project are available at apache pyspark tutorial, all these examples are coded in python language and tested in our development environment. pyspark – what is it? & who uses it? uh oh! there was an error while loading. please reload this page. uh oh!. With pyspark, you can write python and sql like commands to manipulate and analyze data in a distributed processing environment. using pyspark, data scientists manipulate data, build machine learning pipelines, and tune models. Pyspark sql simplifies the process of working with structured and semi structured data in the spark ecosystem. in this article, we explored the fundamentals of pyspark sql, including dataframes and sql queries, and provided practical code examples to illustrate its usage. In this pyspark tutorial, you’ll learn the fundamentals of spark, how to create distributed data processing pipelines, and leverage its versatile libraries to transform and analyze large datasets efficiently with examples.

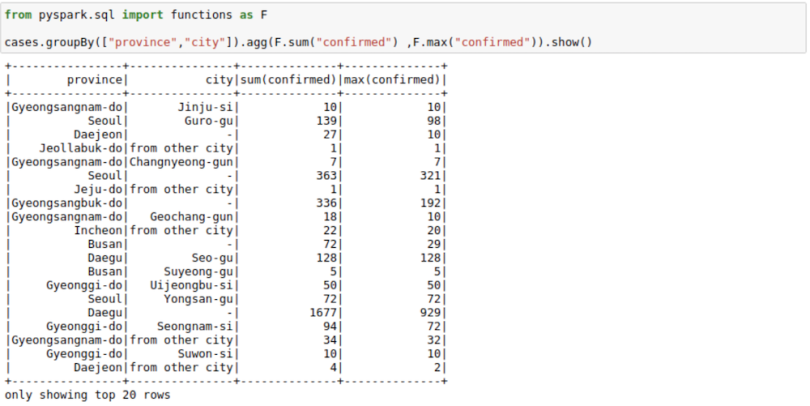

Pyspark Tutorial For Beginners With Examples Spark By 57 Off Pyspark sql simplifies the process of working with structured and semi structured data in the spark ecosystem. in this article, we explored the fundamentals of pyspark sql, including dataframes and sql queries, and provided practical code examples to illustrate its usage. In this pyspark tutorial, you’ll learn the fundamentals of spark, how to create distributed data processing pipelines, and leverage its versatile libraries to transform and analyze large datasets efficiently with examples. Pyspark is a tool created by apache spark community for using python with spark. it allows working with rdd (resilient distributed dataset) in python. it also offers pyspark shell to link python apis with spark core to initiate spark context. spark is the name engine to realize cluster computing, while pyspark is python’s library to use spark. Core concepts: learn the basics of pyspark, including resilient distributed datasets (rdds), dataframes, and spark sql. data processing: discover how to transform, filter, and aggregate large datasets with pyspark’s powerful apis. streaming: master real time data processing with spark streaming and structured streaming. In this tutorial, we will discover how to employ the immense power of pyspark for big data processing and analytics. whether you are a data engineer looking to dive into distributed computing or a data scientist eager to leverage python's simplicity to process big data, we have got you covered. In this guide, we explored several core operations in pyspark sql, including selecting and filtering data, performing joins, aggregating data, working with dates, and applying window functions.

Spark Sql Explained With Examples Spark By Examples Pyspark is a tool created by apache spark community for using python with spark. it allows working with rdd (resilient distributed dataset) in python. it also offers pyspark shell to link python apis with spark core to initiate spark context. spark is the name engine to realize cluster computing, while pyspark is python’s library to use spark. Core concepts: learn the basics of pyspark, including resilient distributed datasets (rdds), dataframes, and spark sql. data processing: discover how to transform, filter, and aggregate large datasets with pyspark’s powerful apis. streaming: master real time data processing with spark streaming and structured streaming. In this tutorial, we will discover how to employ the immense power of pyspark for big data processing and analytics. whether you are a data engineer looking to dive into distributed computing or a data scientist eager to leverage python's simplicity to process big data, we have got you covered. In this guide, we explored several core operations in pyspark sql, including selecting and filtering data, performing joins, aggregating data, working with dates, and applying window functions.