Pyspark Tutorial For Beginners With Examples Spark By 57 Off Manually create a pyspark dataframe asked 5 years, 10 months ago modified 1 year ago viewed 208k times. 105 pyspark.sql.functions.when takes a boolean column as its condition. when using pyspark, it's often useful to think "column expression" when you read "column". logical operations on pyspark columns use the bitwise operators: & for and | for or ~ for not when combining these with comparison operators such as <, parenthesis are often needed.

Pyspark Tutorial Github Topics Github I have a pyspark dataframe consisting of one column, called json, where each row is a unicode string of json. i'd like to parse each row and return a new dataframe where each row is the parsed json. When in pyspark multiple conditions can be built using & (for and) and | (for or). note:in pyspark t is important to enclose every expressions within parenthesis () that combine to form the condition. 2 i just did something perhaps similar to what you guys need, using drop duplicates pyspark. situation is this. i have 2 dataframes (coming from 2 files) which are exactly same except 2 columns file date (file date extracted from the file name) and data date (row date stamp). Pyspark: how to append dataframes in for loop asked 6 years, 1 month ago modified 2 years, 11 months ago viewed 43k times.

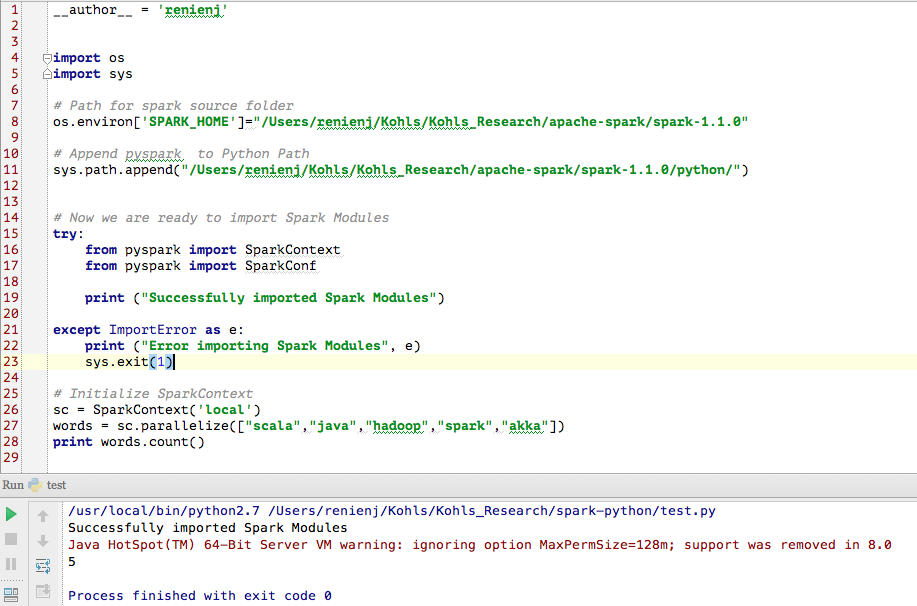

Github Jiangsy163 Pyspark Tutorial Learn Spark Using Python 2 i just did something perhaps similar to what you guys need, using drop duplicates pyspark. situation is this. i have 2 dataframes (coming from 2 files) which are exactly same except 2 columns file date (file date extracted from the file name) and data date (row date stamp). Pyspark: how to append dataframes in for loop asked 6 years, 1 month ago modified 2 years, 11 months ago viewed 43k times. Alternatively, you can use the pyspark shell where spark (the spark session) as well as sc (the spark context) are predefined (see also nameerror: name 'spark' is not defined, how to solve?). How to find count of null and nan values for each column in a pyspark dataframe efficiently? asked 8 years ago modified 2 years, 3 months ago viewed 288k times. Compare two dataframes pyspark asked 5 years, 5 months ago modified 2 years, 9 months ago viewed 107k times. Pyspark: typeerror: col should be column there is no such problem with any other of the keys in the dict, i.e. "value". i really do not understand the problem, do i have to assume that there are inconsistencies in the data? if yes, can you recommend a way to check for or even dodge them? edit: khalid had a good idea to pre define the schema.

Tutorial With Example Tutorial And Training Alternatively, you can use the pyspark shell where spark (the spark session) as well as sc (the spark context) are predefined (see also nameerror: name 'spark' is not defined, how to solve?). How to find count of null and nan values for each column in a pyspark dataframe efficiently? asked 8 years ago modified 2 years, 3 months ago viewed 288k times. Compare two dataframes pyspark asked 5 years, 5 months ago modified 2 years, 9 months ago viewed 107k times. Pyspark: typeerror: col should be column there is no such problem with any other of the keys in the dict, i.e. "value". i really do not understand the problem, do i have to assume that there are inconsistencies in the data? if yes, can you recommend a way to check for or even dodge them? edit: khalid had a good idea to pre define the schema.