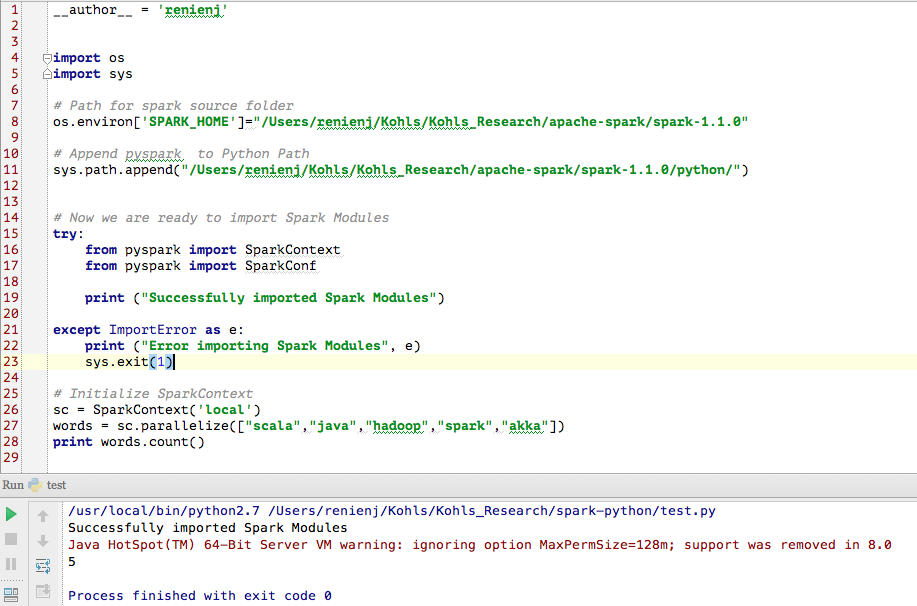

Pyspark Tutorial For Beginners With Examples Spark By 57 Off In this pyspark tutorial, you’ll learn the fundamentals of spark, how to create distributed data processing pipelines, and leverage its versatile libraries to transform and analyze large datasets efficiently with examples. What is pyspark? pyspark is a tool created by apache spark community for using python with spark. it allows working with rdd (resilient distributed dataset) in python. it also offers pyspark shell to link python apis with spark core to initiate spark context.

Pyspark Tutorial For Beginners With Examples Spark By 57 Off Pyspark is an interface for apache spark in python. with pyspark, you can write python and sql like commands to manipulate and analyze data in a distributed processing environment. using pyspark, data scientists manipulate data, build machine learning pipelines, and tune models. Pyspark is the python api for apache spark. it allows you to interface with spark's distributed computation framework using python, making it easier to work with big data in a language many data scientists and engineers are familiar with. by using pyspark, you can create and manage spark jobs, and perform complex data transformations and analyses. This comprehensive pyspark tutorial will walk you through every step, from setting up and installing pyspark to exploring its powerful features like rdds, pyspark dataframes, and much more. In this comprehensive pyspark tutorial, you will learn: and much more through practical code examples you can try out yourself. so let‘s get started! before jumping into the pyspark specifics, let‘s understand at a high level how spark works: spark utilizes a master worker architecture.

Pyspark Tutorial For Beginners With Examples Spark By 57 Off This comprehensive pyspark tutorial will walk you through every step, from setting up and installing pyspark to exploring its powerful features like rdds, pyspark dataframes, and much more. In this comprehensive pyspark tutorial, you will learn: and much more through practical code examples you can try out yourself. so let‘s get started! before jumping into the pyspark specifics, let‘s understand at a high level how spark works: spark utilizes a master worker architecture. Learn how to use pyspark’s robust features for data transformation and analysis, exploring its versatility in handling both batch and real time data processing. our hands on approach covers everything from setting up your pyspark environment to navigating through its core components like rdds and dataframes. what is pyspark?. In this article, we will see the basics of pyspark, its benefits, and how you can get started with it. what is pyspark? pyspark is the python api for apache spark, a big data processing framework. spark is designed to handle large scale data processing and machine learning tasks. with pyspark, you can write spark applications using python. Pyspark is a cloud based platform functioning as a service architecture. the platform provides an environment to compute big data files. pyspark refers to the application of python programming language in association with spark clusters. it is deeply associated with big data. Pyspark, a powerful data processing engine built on top of apache spark, has revolutionized how we handle big data. in this tutorial, we’ll explore pyspark with databricks, covering everything.

Pyspark Tutorial For Beginners With Examples Spark By 57 Off Learn how to use pyspark’s robust features for data transformation and analysis, exploring its versatility in handling both batch and real time data processing. our hands on approach covers everything from setting up your pyspark environment to navigating through its core components like rdds and dataframes. what is pyspark?. In this article, we will see the basics of pyspark, its benefits, and how you can get started with it. what is pyspark? pyspark is the python api for apache spark, a big data processing framework. spark is designed to handle large scale data processing and machine learning tasks. with pyspark, you can write spark applications using python. Pyspark is a cloud based platform functioning as a service architecture. the platform provides an environment to compute big data files. pyspark refers to the application of python programming language in association with spark clusters. it is deeply associated with big data. Pyspark, a powerful data processing engine built on top of apache spark, has revolutionized how we handle big data. in this tutorial, we’ll explore pyspark with databricks, covering everything.

Spark Tutorial For Beginners Big Data Spark Tutorial Apache Spark Pyspark is a cloud based platform functioning as a service architecture. the platform provides an environment to compute big data files. pyspark refers to the application of python programming language in association with spark clusters. it is deeply associated with big data. Pyspark, a powerful data processing engine built on top of apache spark, has revolutionized how we handle big data. in this tutorial, we’ll explore pyspark with databricks, covering everything.