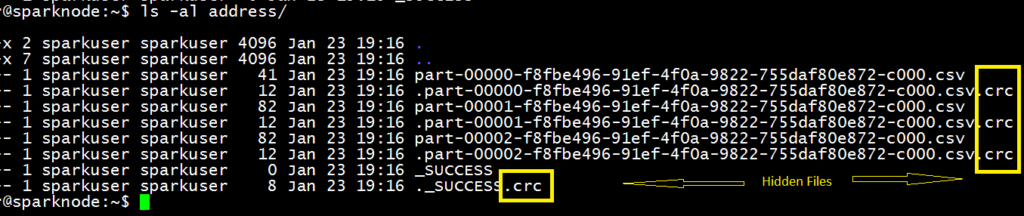

Spark Write Dataframe Into Single Csv File Merge Multiple Part Files Manually create a pyspark dataframe asked 5 years, 10 months ago modified 1 year ago viewed 207k times. I come from pandas background and am used to reading data from csv files into a dataframe and then simply changing the column names to something useful using the simple command: df.columns =.

Spark Write Dataframe Into Single Csv File Merge Multiple Part Files I have a pyspark dataframe consisting of one column, called json, where each row is a unicode string of json. i'd like to parse each row and return a new dataframe where each row is the parsed json. Pyspark: explode json in column to multiple columns asked 7 years ago modified 3 months ago viewed 86k times. When in pyspark multiple conditions can be built using & (for and) and | (for or). note:in pyspark t is important to enclose every expressions within parenthesis () that combine to form the condition. With pyspark dataframe, how do you do the equivalent of pandas df['col'].unique(). i want to list out all the unique values in a pyspark dataframe column. not the sql type way (registertemplate the.

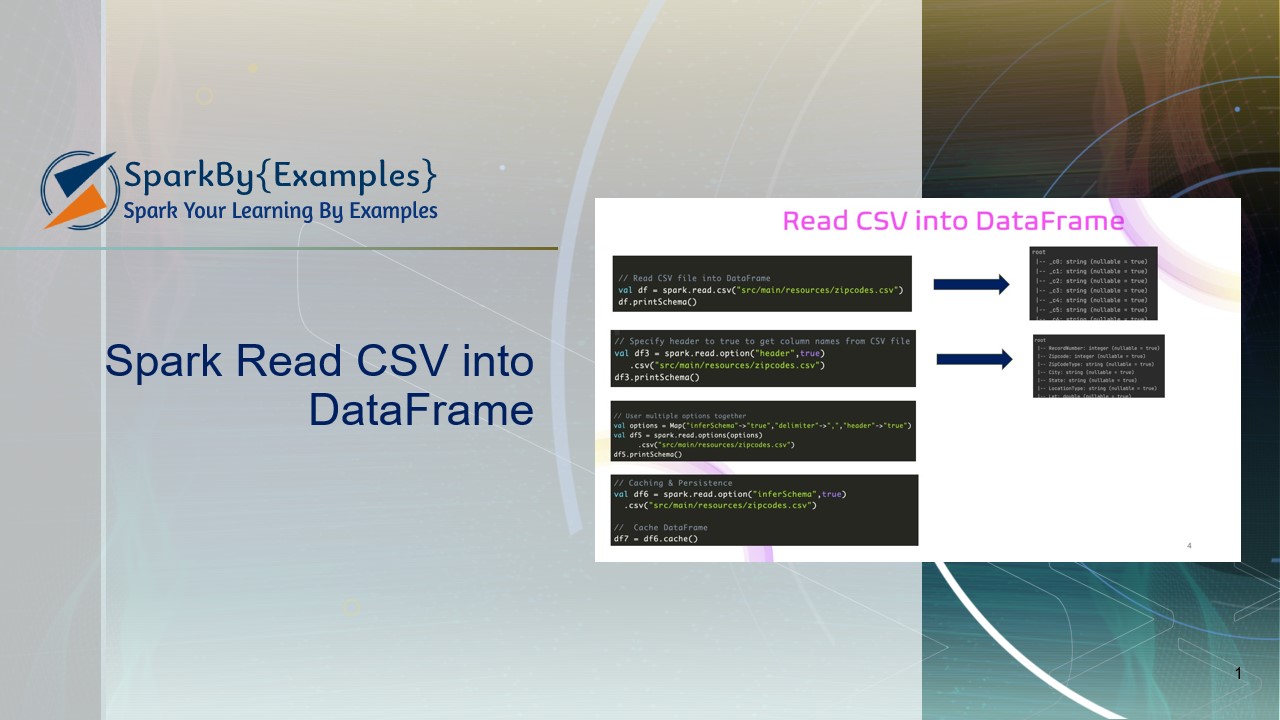

Spark Read Csv File Into Dataframe Spark By Examples When in pyspark multiple conditions can be built using & (for and) and | (for or). note:in pyspark t is important to enclose every expressions within parenthesis () that combine to form the condition. With pyspark dataframe, how do you do the equivalent of pandas df['col'].unique(). i want to list out all the unique values in a pyspark dataframe column. not the sql type way (registertemplate the. Pyspark aggregation on multiple columns asked 9 years, 3 months ago modified 6 years, 2 months ago viewed 117k times. Alternatively, you can use the pyspark shell where spark (the spark session) as well as sc (the spark context) are predefined (see also nameerror: name 'spark' is not defined, how to solve?). I show it here spark (pyspark) groupby misordering first element on collect list. this method is specially useful on large dataframes, but a large number of partitions may be needed if you are short on driver memory. Pyspark: display a spark data frame in a table format asked 8 years, 10 months ago modified 1 year, 11 months ago viewed 407k times.

Pyspark Write To Csv File Spark By Examples Pyspark aggregation on multiple columns asked 9 years, 3 months ago modified 6 years, 2 months ago viewed 117k times. Alternatively, you can use the pyspark shell where spark (the spark session) as well as sc (the spark context) are predefined (see also nameerror: name 'spark' is not defined, how to solve?). I show it here spark (pyspark) groupby misordering first element on collect list. this method is specially useful on large dataframes, but a large number of partitions may be needed if you are short on driver memory. Pyspark: display a spark data frame in a table format asked 8 years, 10 months ago modified 1 year, 11 months ago viewed 407k times.