How To Run Open Source Llms Locally Using Ollama Pdf Open Source Ollama is a tool used to run the open weights large language models locally. it’s quick to install, pull the llm models and start prompting in your terminal command prompt. this tutorial should serve as a good reference for anything you wish to do with ollama, so bookmark it and let’s get started. what is ollama?. Learn how to install ollama and run llms locally on your computer. complete setup guide for mac, windows, and linux with step by step instructions. running large language models on your local machine gives you complete control over your ai workflows.

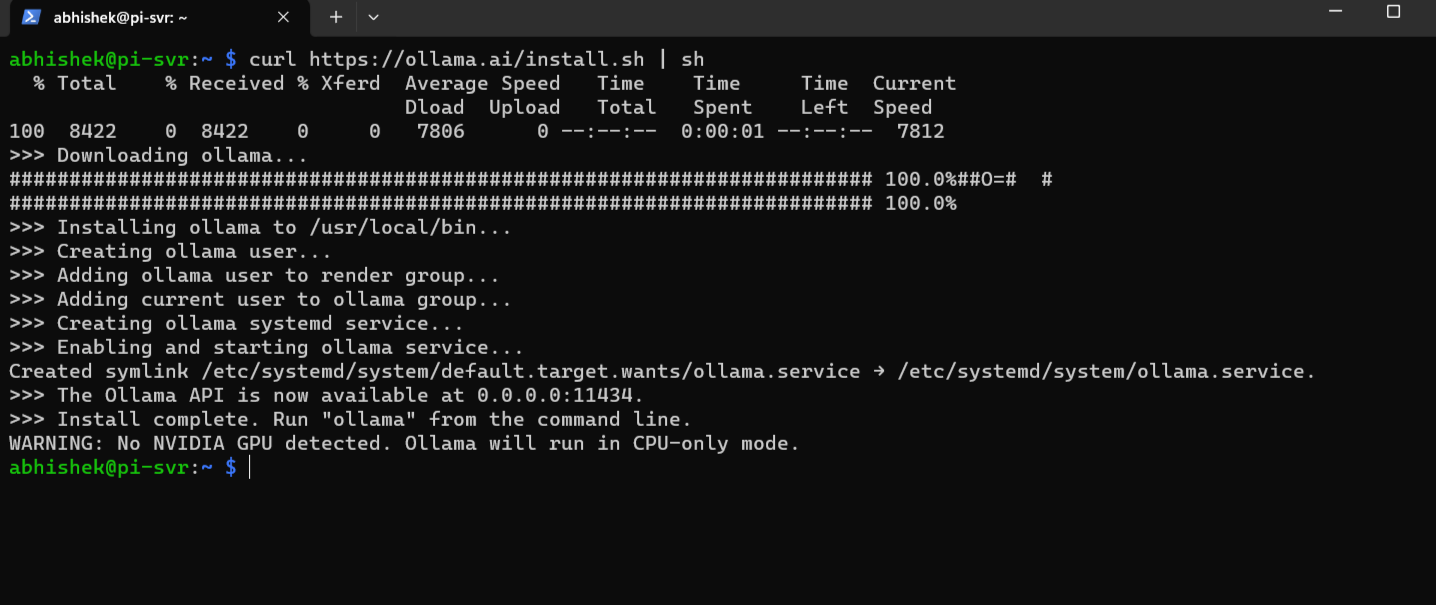

Run Llms Locally 7 Simple Methods Datacamp **run powerful llms locally with ollama!**this video provides a comprehensive tutorial on installing and using ollama, a free and open source tool that lets. Discover how to run large language models (llms) locally with ollama. this guide walks you through setup, model selection, and steps to leverage ai on your own machine. We'll explore how to download ollama and interact with two exciting open source llm models: llama 2, a text based model from meta, and llava, a multimodal model that can handle both text and images. to download ollama, head on to the official website of ollama and hit the download button. ollama homepage. Installing ollama is quick and straightforward. it works on macos, windows, and linux. go to ollama download and choose the right version for your operating system. after installation, open a terminal and test it by running: if the version number appears, ollama is successfully installed and ready to use.

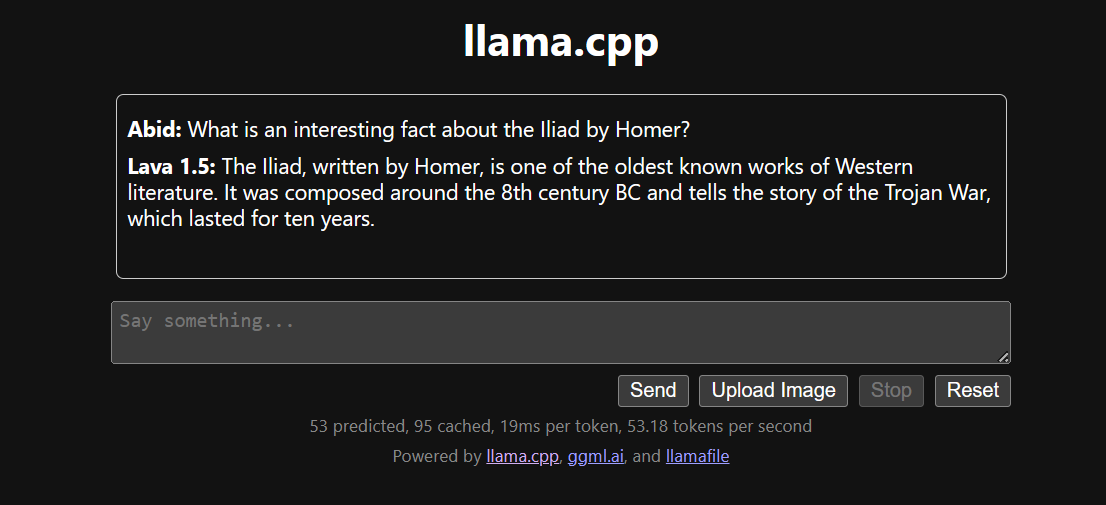

Ollama Tutorial Run Llms Locally Install Configure Ollama On Eroppa We'll explore how to download ollama and interact with two exciting open source llm models: llama 2, a text based model from meta, and llava, a multimodal model that can handle both text and images. to download ollama, head on to the official website of ollama and hit the download button. ollama homepage. Installing ollama is quick and straightforward. it works on macos, windows, and linux. go to ollama download and choose the right version for your operating system. after installation, open a terminal and test it by running: if the version number appears, ollama is successfully installed and ready to use. Follow these steps to download and run models locally. llama 3.3 is a large model (~40gb). ensure sufficient storage before proceeding: ollama allows users to execute, pull, and initialize large language models directly from its repository or from locally stored models. Ollama lets you run powerful large language models (llms) locally for free, giving you full control over your data and performance. in this tutorial, a step by step guide will be provided to help you install ollama, run models like llama 2, use the built in http api, and even create custom models tailored to your needs. what is ollama?. We aim to provide a comprehensive walkthrough for running llms locally. this is a good first step towards mastering the local operation of large language models (llms) on your devices. this allows you to power ai based applications independently without needing third party apis. Ollama revolutionizes how developers and ai enthusiasts interact with large language models (llms) by eliminating the need for expensive cloud services and providing complete privacy control. in this comprehensive guide, you’ll learn everything about ollama—from installation to advanced usage—and why […].

Ollama Tutorial Run Llms Locally Install Configure Ollama On Eroppa Follow these steps to download and run models locally. llama 3.3 is a large model (~40gb). ensure sufficient storage before proceeding: ollama allows users to execute, pull, and initialize large language models directly from its repository or from locally stored models. Ollama lets you run powerful large language models (llms) locally for free, giving you full control over your data and performance. in this tutorial, a step by step guide will be provided to help you install ollama, run models like llama 2, use the built in http api, and even create custom models tailored to your needs. what is ollama?. We aim to provide a comprehensive walkthrough for running llms locally. this is a good first step towards mastering the local operation of large language models (llms) on your devices. this allows you to power ai based applications independently without needing third party apis. Ollama revolutionizes how developers and ai enthusiasts interact with large language models (llms) by eliminating the need for expensive cloud services and providing complete privacy control. in this comprehensive guide, you’ll learn everything about ollama—from installation to advanced usage—and why […].

Ollama Tutorial For Beginners Run Llms Locally With Ease We aim to provide a comprehensive walkthrough for running llms locally. this is a good first step towards mastering the local operation of large language models (llms) on your devices. this allows you to power ai based applications independently without needing third party apis. Ollama revolutionizes how developers and ai enthusiasts interact with large language models (llms) by eliminating the need for expensive cloud services and providing complete privacy control. in this comprehensive guide, you’ll learn everything about ollama—from installation to advanced usage—and why […].

Run Llms Locally Using Ollama Private Local Llm Ollama Tutorial Eroppa