Simple Linear Regression Pdf Errors And Residuals Regression Analysis Gauss markov theorem: b0, b1 and ˆyi have minimum variance among all unbiased linear estimators. 2 σ2 = . pn i=1(xi − x )2. v ar(b1). similar inference for β0. often interested in estimating the mean response for partic ular xh, i.e., the parameter of interests is e(yh) = β0 β1xh. unbiased estimation is ˆyh = b0 b1xh. Once we make an initial judgement that linear regression is not a stupid thing to do for our data, based on plausibility of the model after examining our eda, we perform the linear regression analysis, then further verify the model assumptions with residual checking.

Simple Linear Regression Pdf Regression Analysis Errors And Residuals Linear regression models: response is a linear function of predictors. regression models attempt to minimize the distance measured vertically between the observation point and the model line (or curve). the length of the line segment is called residual, modeling error, or simply error. 1) the document presents methods for simple linear regression including examining correlation between variables, fitting a linear regression model, and assessing if the data meets model assumptions. 2) box cox transformations are applied to both the response and predictor variables to better meet normality assumptions. One way of thinking about any regression model is that it involves a systematic component and an error component. if the simple regression model is correct about the systematic component, then the errors will appear to be random as a function of x. The simple linear regression model the simplest deterministic mathematical relationship between two variables x and y is a linear relationship: y = β0 β1x. the objective of this section is to develop an equivalent linear probabilistic model.

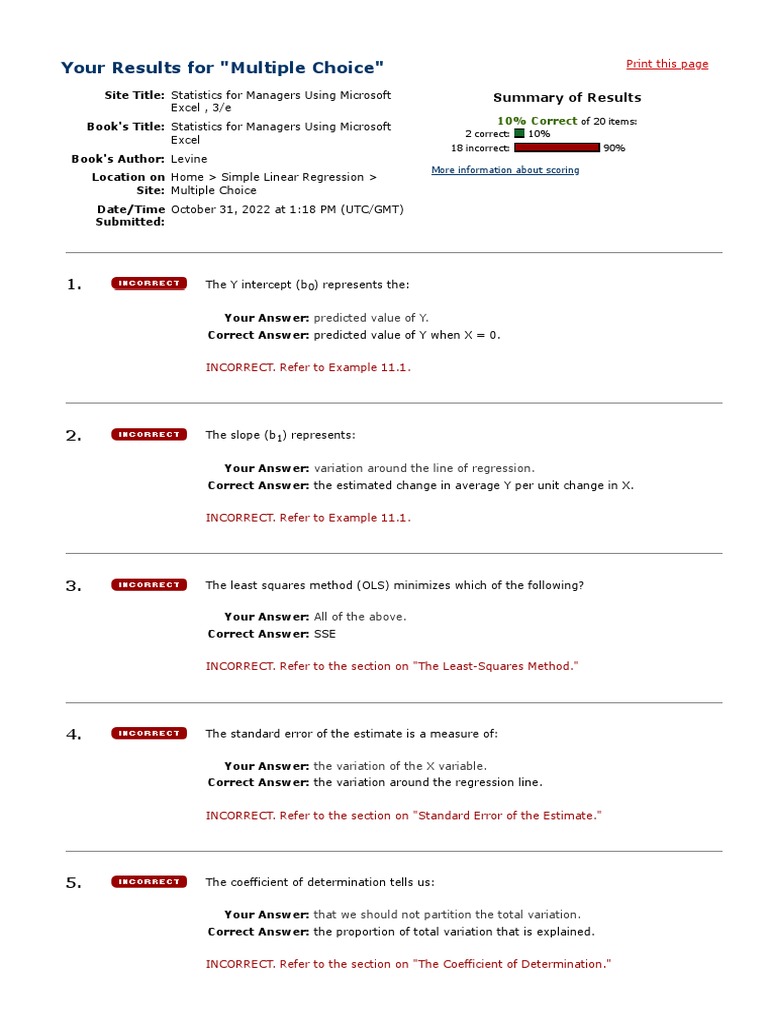

3 Simplelinearregression Pdf Errors And Residuals Least Squares One way of thinking about any regression model is that it involves a systematic component and an error component. if the simple regression model is correct about the systematic component, then the errors will appear to be random as a function of x. The simple linear regression model the simplest deterministic mathematical relationship between two variables x and y is a linear relationship: y = β0 β1x. the objective of this section is to develop an equivalent linear probabilistic model. In contrast to standard error of the regression, the correlation coefficient is a relative measure of fit of the straight line. we could write down the formula you know for a correlation coefficient, but we’ll express it differently here. It is now standard practice to examine the plot of the residuals against the fitted values to check for appropriateness of the regression model. patterns in this plot are used to detect violations of assumptions. In order to take care of errors in both the directions, the least squares principle in orthogonal regression minimizes the squared perpendicular distance between the observed data points and the line in the following scatter diagram to obtain the estimates of regression coefficients. Simple linear regression finds the linear relationship between a dependent variable (y) and independent variable (x). the least squares method is used to estimate the regression coefficients to minimize the sum of squared errors between actual and predicted y values.

Week 2 Simple Linear Regression Pdf Errors And Residuals In contrast to standard error of the regression, the correlation coefficient is a relative measure of fit of the straight line. we could write down the formula you know for a correlation coefficient, but we’ll express it differently here. It is now standard practice to examine the plot of the residuals against the fitted values to check for appropriateness of the regression model. patterns in this plot are used to detect violations of assumptions. In order to take care of errors in both the directions, the least squares principle in orthogonal regression minimizes the squared perpendicular distance between the observed data points and the line in the following scatter diagram to obtain the estimates of regression coefficients. Simple linear regression finds the linear relationship between a dependent variable (y) and independent variable (x). the least squares method is used to estimate the regression coefficients to minimize the sum of squared errors between actual and predicted y values.

Linear Regression 1 Pdf Errors And Residuals Standard Error In order to take care of errors in both the directions, the least squares principle in orthogonal regression minimizes the squared perpendicular distance between the observed data points and the line in the following scatter diagram to obtain the estimates of regression coefficients. Simple linear regression finds the linear relationship between a dependent variable (y) and independent variable (x). the least squares method is used to estimate the regression coefficients to minimize the sum of squared errors between actual and predicted y values.

Simple Linear Regression Pdf Errors And Residuals Regression Analysis