Sourabh Bajaj Big Data Processing With Apache Beam Ppt Free Download Description in this talk, we present the new python sdk for apache beam a parallel programming model that allows one to implement batch and streaming data processing jobs that can run on. Sourabh bajaj sb2nov cofounder , previously engineering brain, , and . distributed systems, machine learning, and statistics.

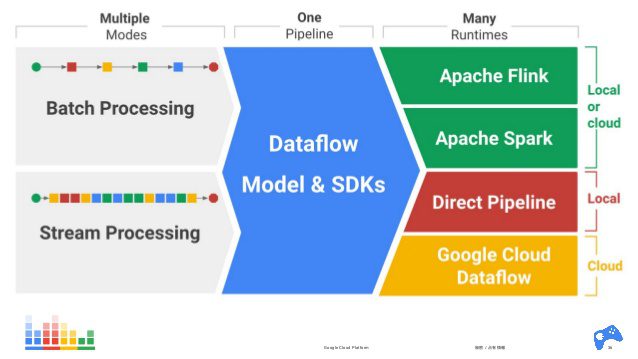

Sourabh Bajaj Big Data Processing With Apache Beam Ppt In this talk, we present the new python sdk for apache beam a parallel programming model that allows one to implement batch and streaming data processing jobs that can run on a variety of execution engines like apache spark and google cloud dataflow. In this talk, we present the new python sdk for apache beam a parallel programming model that allows one to implement batch and streaming data processing jobs that can run on a variety of execution engines like apache spark and google cloud dataflow. Apache beam: a unified model for defining both batch and streaming data parallel processing pipelines, as well as a set of language specific sdks for constructing pipelines and runners for executing them on distributed processing backends like apache spark, apache flink, and google cloud dataflow. "description": "in this talk, we present the new python sdk for apache beam a parallel programming model that allows one to implement batch and streaming data processing jobs that can run on a variety of execution engines like apache spark and google cloud dataflow. we will use examples to discuss.

Apache Beam Apache beam: a unified model for defining both batch and streaming data parallel processing pipelines, as well as a set of language specific sdks for constructing pipelines and runners for executing them on distributed processing backends like apache spark, apache flink, and google cloud dataflow. "description": "in this talk, we present the new python sdk for apache beam a parallel programming model that allows one to implement batch and streaming data processing jobs that can run on a variety of execution engines like apache spark and google cloud dataflow. we will use examples to discuss. Descriptionin this talk, we present the new python sdk for apache beam a parallel programming model that allows one to implement batch and streaming data p. Sourabh bajaj big data processing with apache beam download as a pdf or view online for free. In this talk, we present the new python sdk for apache beam a parallel programming model that allows one to implement batch and streaming data processing jobs that can run on a variety of execution engines like apache spark and google cloud dataflow. Apache beam is a unified programming model for expressing efficient and portable data processing pipelines big data.

Apache Beam Descriptionin this talk, we present the new python sdk for apache beam a parallel programming model that allows one to implement batch and streaming data p. Sourabh bajaj big data processing with apache beam download as a pdf or view online for free. In this talk, we present the new python sdk for apache beam a parallel programming model that allows one to implement batch and streaming data processing jobs that can run on a variety of execution engines like apache spark and google cloud dataflow. Apache beam is a unified programming model for expressing efficient and portable data processing pipelines big data.

Apache Beam Create Data Processing Pipelines Datasciencecentral In this talk, we present the new python sdk for apache beam a parallel programming model that allows one to implement batch and streaming data processing jobs that can run on a variety of execution engines like apache spark and google cloud dataflow. Apache beam is a unified programming model for expressing efficient and portable data processing pipelines big data.