Spark Program To Load A Csv File Into A Dataset Using Java 8 Clear Ur For json, if your text file contains one json object per line, you can use sqlcontext.jsonfile(path) to let spark sql load it as a schemardd (the schema will be automatically inferred). Spark sql provides spark.read().csv("file name") to read a file or directory of files in csv format into spark dataframe, and dataframe.write().csv("path") to write to a csv file.

Spark Program To Load A Csv File Into A Dataset Using Java 8 Clear Ur These examples demonstrate how easy it is to load a csv file into a spark dataframe using various languages. key options like `header` and `inferschema` help manage the structure and types of the data being read. Learn how to effectively parse csv files into dataframes or datasets in apache spark with java. step by step guide with code snippets. In this post, we will look at a spark (2.3.0) program to load a csv file into a dataset using java 8. please go through the below post before going through this post. Apache pyspark provides the "csv ("path")" for reading a csv file into the spark dataframe and the "dataframeobj. write. csv ("path")" for saving or writing to the csv file.

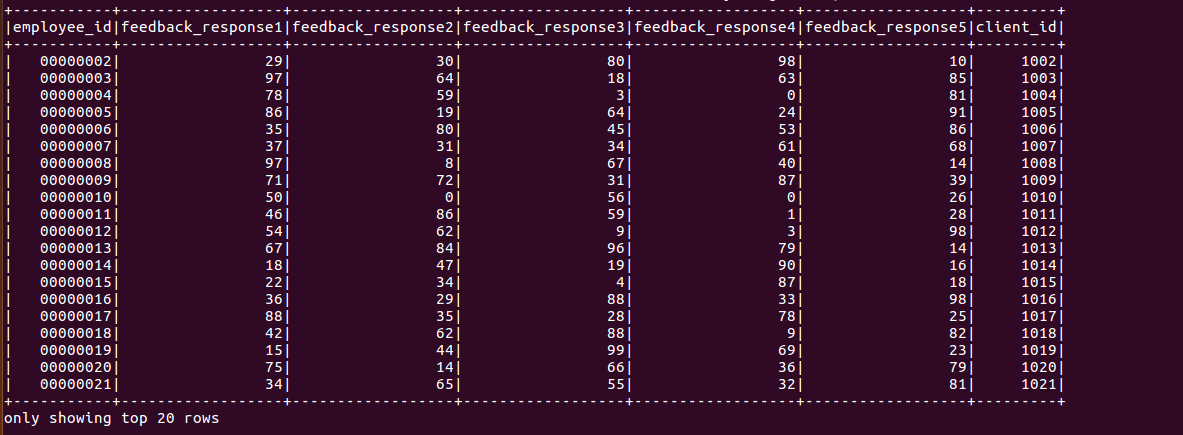

Spark Program To Load A Csv File Into A Dataset Using Java 8 Clear Ur In this post, we will look at a spark (2.3.0) program to load a csv file into a dataset using java 8. please go through the below post before going through this post. Apache pyspark provides the "csv ("path")" for reading a csv file into the spark dataframe and the "dataframeobj. write. csv ("path")" for saving or writing to the csv file. Get a spark session. read the csv file. show the content of the dataframe. * csv ingestion in a dataframe. * main() is your entry point to the application. * the processing code. this is a really basic example, but i needed one to start, no? the next episodes will certainly be a bit more complex. more resources: loading csv in spark. Reading csv file: we begin by reading a csv file into a dataframe using apache spark’s dataframe api. csv files are a common format for storing tabular data and are widely used. To read csv in spark, first create a dataframereader and set a number of options: df=spark.read.format("csv").option("header","true").load(filepath) then, choose to read csv files in spark using either inferschema or a custom schema: inferschema: df=spark.read.format("csv").option("inferschema","true").load(filepath). We are going to start the apache spark using java with local standalone feature. lets have java 8 or 11 to avoid spark compatability issues. to have spark in the project, we need to have spark core and spark sql. spark core is to create the spark session and spark sql to have data frame operations. given the complete pom below:.

Program To Load A Text File Into A Dataset In Spark Using Java 8 Get a spark session. read the csv file. show the content of the dataframe. * csv ingestion in a dataframe. * main() is your entry point to the application. * the processing code. this is a really basic example, but i needed one to start, no? the next episodes will certainly be a bit more complex. more resources: loading csv in spark. Reading csv file: we begin by reading a csv file into a dataframe using apache spark’s dataframe api. csv files are a common format for storing tabular data and are widely used. To read csv in spark, first create a dataframereader and set a number of options: df=spark.read.format("csv").option("header","true").load(filepath) then, choose to read csv files in spark using either inferschema or a custom schema: inferschema: df=spark.read.format("csv").option("inferschema","true").load(filepath). We are going to start the apache spark using java with local standalone feature. lets have java 8 or 11 to avoid spark compatability issues. to have spark in the project, we need to have spark core and spark sql. spark core is to create the spark session and spark sql to have data frame operations. given the complete pom below:. Use spark's dataframe api to load data from a csv file into a dataframe. this makes data manipulation easier. import org.apache.spark. sql. row; . option ("header", "true") .csv("path to your data.csv"); apply several transformations such as filtering, selecting, and grouping data in the dataframe.