Strategies For Deploying Llm Driven Ai Applications Embarking on the adventure of crafting ai applications utilizing large language models (llms) unveils a realm of possibilities while simultaneously presenting a complex maze of challenges when transitioning from fundamental prototypes to applications ready for production. Robust ai infrastructure is essential for developing, deploying, and scaling large language model (llm) applications efficiently. effective data management, model training, and deployment strategies ensure optimal performance, security, and compliance in ai workflows.

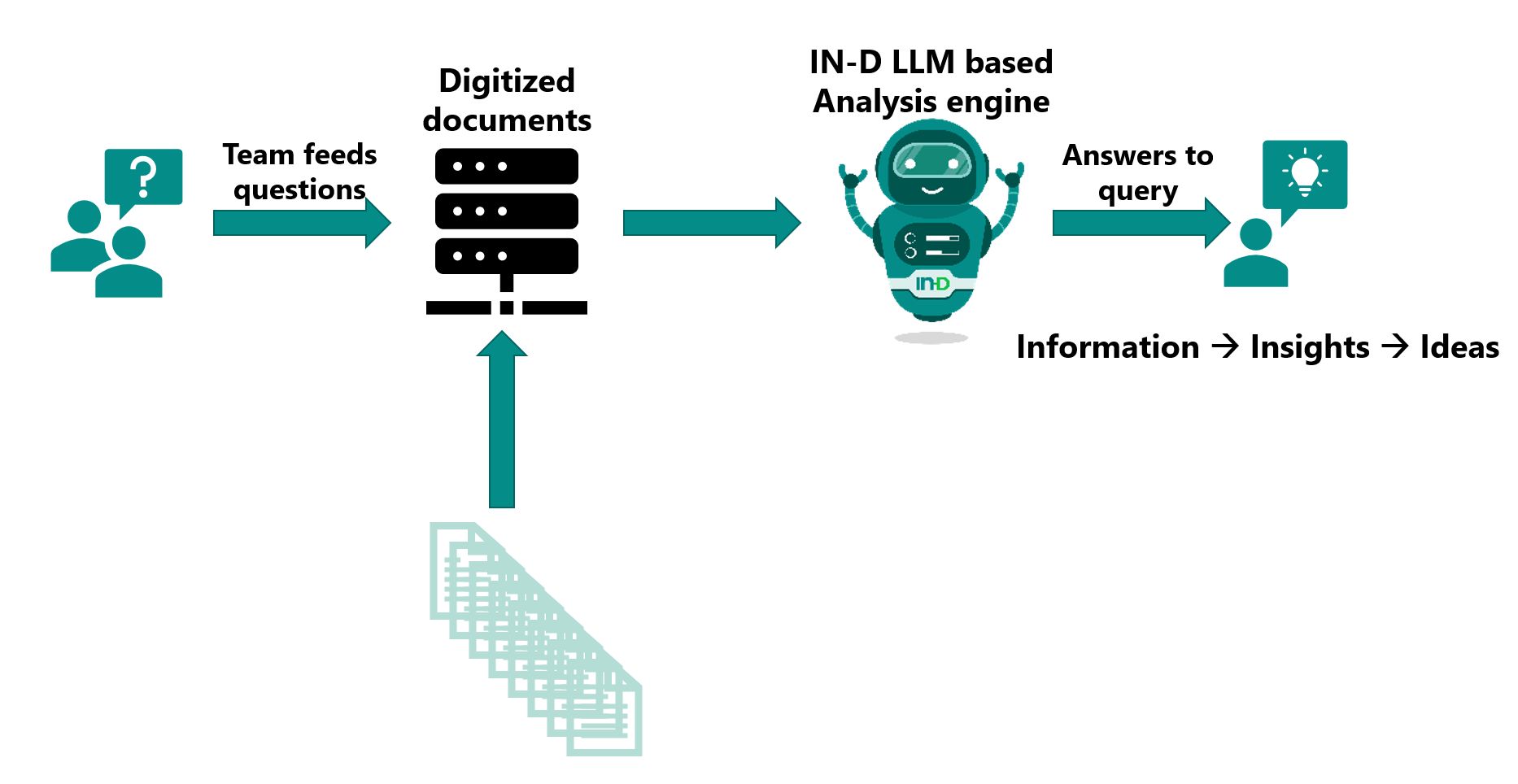

Query Documents With Ai And Llm Enhance Business Intelligence In this post, we provide an overview of common multi llm applications. we then explore strategies for implementing effective multi llm routing in these applications, discussing the key factors that influence the selection and implementation of such strategies. In this guide, we’ll break down the key components, architecture, and optimization strategies required to design a highly efficient llm system for real world deployment. get ready to explore infrastructure planning, prompt engineering, inference optimization, and more. Businesses can optimize performance by understanding different deployment methods to minimize costs and maintain flexibility. this blog covers three primary deployment options: using third party api providers, self hosting llms, and building custom models. Learn how to deploy large language models efficiently and securely. see best practices for managing infrastructure, ensuring data privacy, and optimizing for cost without compromising on performance. large language models (llms) like gpt 4, claude, and gemini are catalyzing a technological revolution with their in depth language capabilities.

Securing Ai Llm Based Applications Best Practices рџљђ Businesses can optimize performance by understanding different deployment methods to minimize costs and maintain flexibility. this blog covers three primary deployment options: using third party api providers, self hosting llms, and building custom models. Learn how to deploy large language models efficiently and securely. see best practices for managing infrastructure, ensuring data privacy, and optimizing for cost without compromising on performance. large language models (llms) like gpt 4, claude, and gemini are catalyzing a technological revolution with their in depth language capabilities. Unlike off the shelf ai models, enterprise llms require deliberate planning: picking the right architecture, deciding between cloud vs. local deployment, integrating llmops tools, and managing ongoing fine tuning. you don’t need to reinvent the wheel. In this article, we will explore the essential steps and considerations for successfully deploying llm applications into production. from defining objectives and success metrics to implementing security measures and gathering user feedback, we will provide a comprehensive overview of the llm deployment process. With diligent preparation and an adaptive mindset, organizations can place themselves at the forefront of this transformative ai wave, unlocking the potential of llms to drive innovation. this article explores the challenges organizations face in deploying llm driven applications.

Llm Ai Applications And Use Cases Tars Blog Unlike off the shelf ai models, enterprise llms require deliberate planning: picking the right architecture, deciding between cloud vs. local deployment, integrating llmops tools, and managing ongoing fine tuning. you don’t need to reinvent the wheel. In this article, we will explore the essential steps and considerations for successfully deploying llm applications into production. from defining objectives and success metrics to implementing security measures and gathering user feedback, we will provide a comprehensive overview of the llm deployment process. With diligent preparation and an adaptive mindset, organizations can place themselves at the forefront of this transformative ai wave, unlocking the potential of llms to drive innovation. this article explores the challenges organizations face in deploying llm driven applications.

Llm Ai Applications And Use Cases Tars Blog With diligent preparation and an adaptive mindset, organizations can place themselves at the forefront of this transformative ai wave, unlocking the potential of llms to drive innovation. this article explores the challenges organizations face in deploying llm driven applications.