Unveiling The Powerhouse Behind Bert Gpt And Other Language Models One of the most popular transformer based models is called bert, short for “bidirectional encoder representations from transformers.” more recently, the model gpt 4, created by openai, has been. These language models cater to different nlp needs, with bert excelling in understanding context and semantics, and gpt 3 dominating generative tasks. the choice between them depends on the specific application and requirements.

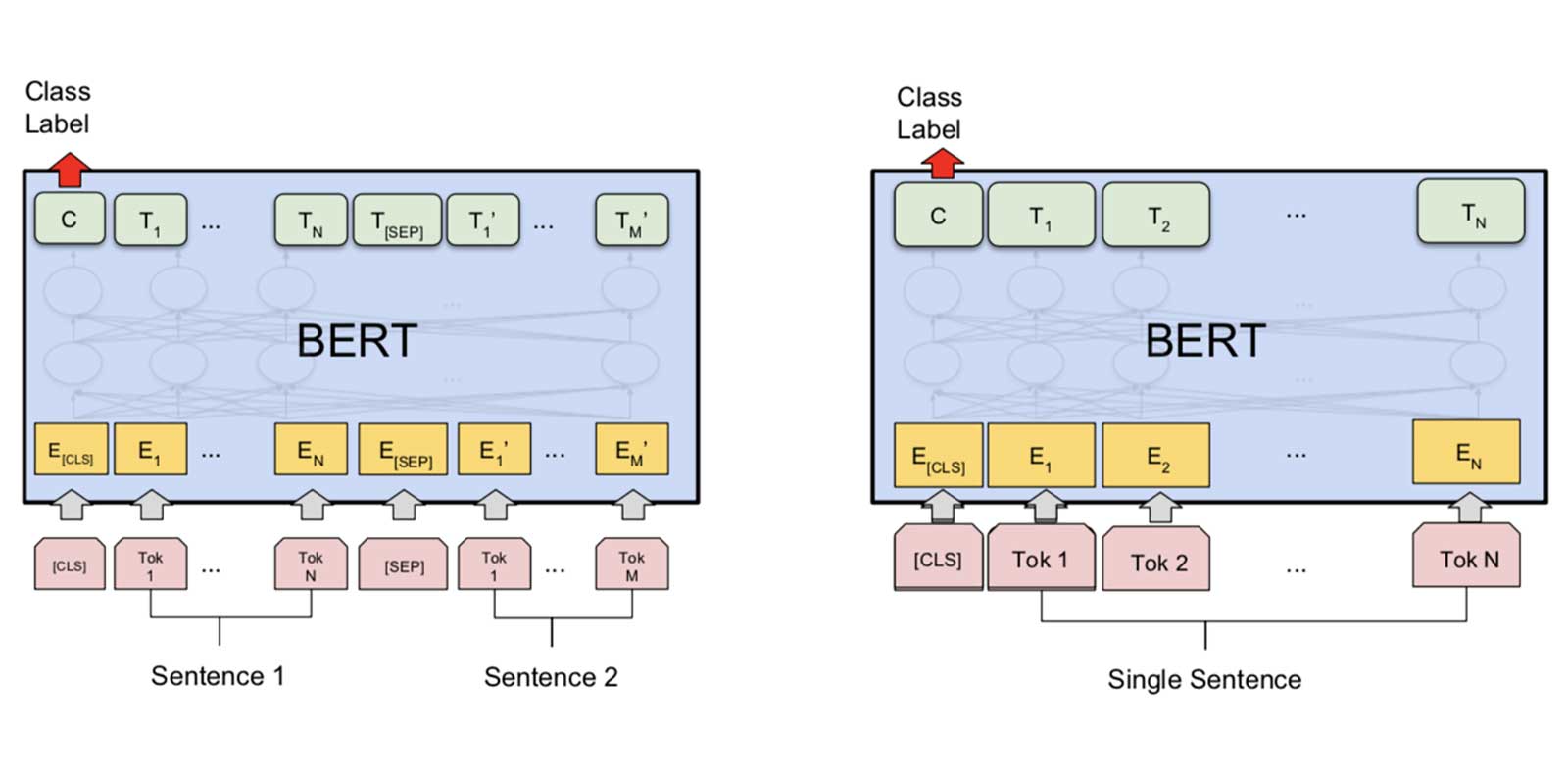

Bert Vs Gpt 3 Comparing Two Powerhouse Language Models Towards Nlp In the world of natural language processing (nlp), two powerful models have taken the spotlight: bert and gpt. while both are based on deep learning and use transformers, they differ in their. Gpt: uses language modeling, predicting the next word in a sequence. bert: uses masked language modeling and next sentence prediction, enhancing its understanding of language context. At its core, bert is a deep learning model based on the transformer architecture, introduced by google in 2018. what sets bert apart is its ability to understand the context of a word by looking at both the words before and after it—this bidirectional context is key to its superior performance. In this comprehensive overview, designed for data scientists and enthusiasts alike, we will explore the definition, significance, and real world applications of these game changing models, highlighting their role in the evolving landscape of nlp and llm. what are large language models (llms)?.

Bert Vs Gpt 3 Comparing Two Powerhouse Language Models Towards Nlp At its core, bert is a deep learning model based on the transformer architecture, introduced by google in 2018. what sets bert apart is its ability to understand the context of a word by looking at both the words before and after it—this bidirectional context is key to its superior performance. In this comprehensive overview, designed for data scientists and enthusiasts alike, we will explore the definition, significance, and real world applications of these game changing models, highlighting their role in the evolving landscape of nlp and llm. what are large language models (llms)?. Learn how transformers, the model behind gpt, bert, and t5, revolutionized nlp with their groundbreaking neural network architec. in the ever evolving world of machine learning, groundbreaking discoveries frequently push the boundaries of what’s possible. Large language models process trillions of words, unlocking new possibilities for ai language understanding. these models are built on transformer architecture, with algorithms like bert and gpt 3 leading the way. they have diverse applications in nlp, from sentiment analysis to machine translation. Google's bert (bidirectional encoder representations from transformers) revolutionized natural language processing. by training models on massive amounts of data, bert brought in an unprecedented level of contextual understanding. it paved the way for more sophisticated models that could comprehend textual nuances and provide meaningful insights. Bert and gpt are two significant models in natural language processing, each presenting unique advantages and challenges. bert stands out with its bidirectional design, enabling a deeper understanding of context by considering both preceding and following words.

Large Language Models Llm Difference Between Gpt 3 Bert 60 Off Learn how transformers, the model behind gpt, bert, and t5, revolutionized nlp with their groundbreaking neural network architec. in the ever evolving world of machine learning, groundbreaking discoveries frequently push the boundaries of what’s possible. Large language models process trillions of words, unlocking new possibilities for ai language understanding. these models are built on transformer architecture, with algorithms like bert and gpt 3 leading the way. they have diverse applications in nlp, from sentiment analysis to machine translation. Google's bert (bidirectional encoder representations from transformers) revolutionized natural language processing. by training models on massive amounts of data, bert brought in an unprecedented level of contextual understanding. it paved the way for more sophisticated models that could comprehend textual nuances and provide meaningful insights. Bert and gpt are two significant models in natural language processing, each presenting unique advantages and challenges. bert stands out with its bidirectional design, enabling a deeper understanding of context by considering both preceding and following words.

Large Language Models Llm Difference Between Gpt 3 Bert 60 Off Google's bert (bidirectional encoder representations from transformers) revolutionized natural language processing. by training models on massive amounts of data, bert brought in an unprecedented level of contextual understanding. it paved the way for more sophisticated models that could comprehend textual nuances and provide meaningful insights. Bert and gpt are two significant models in natural language processing, each presenting unique advantages and challenges. bert stands out with its bidirectional design, enabling a deeper understanding of context by considering both preceding and following words.