Spark Read And Write Mysql Database Table Spark By Examples In this article, i will cover step by step instructions on how to connect to the mysql database, read the table into a pyspark spark dataframe, and write the dataframe back to the mysql table. What is the correct method to insert records into a mysql table from pyspark. use spark dataframe instead of pandas', as .write is available on spark dataframe only. so the final code could be. url='jdbc:mysql: localhost database name', driver='com.mysql.jdbc.driver', dbtable='destinationtablename', user='your user name',.

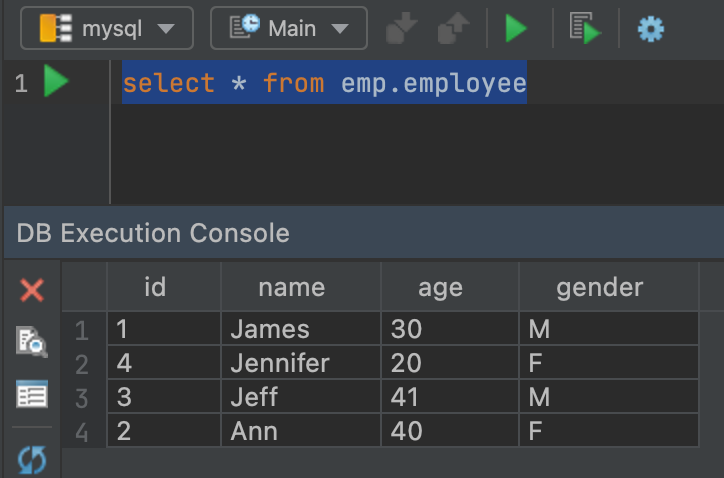

Spark Read And Write Mysql Database Table Spark By Examples Recipe objective: how to save a dataframe to mysql in pyspark? data merging and aggregation are essential parts of big data platforms' day to day activities in most big data scenarios. in this scenario, we will load the dataframe to the mysql database table or save the dataframe to the table. learn spark sql for relational big data procesing. This tutorial will explain how to write data from spark dataframe into various types of databases (such as mysql, singlestore, teradata) using jdbc connection. dataframewriter's "write" function can be used to export data from spark dataframe to database table. Writing a spark dataframe to mysql is something you might want to do for a number of reasons. it’s somewhat trivial to do so on the fly, you can do so like this: this will create a new table called my new table and write the data there, inferring schema and column order from the dataframe. To read data from a mysql database, you can use the spark sql data sources api which allows you to connect your spark dataframe to external data sources using jdbc. the following code demonstrates how to create a spark dataframe by reading data from a mysql table named ’employees’: .appname("mysql integration example").

Pyspark Read And Write Mysql Database Table Spark By Examples Writing a spark dataframe to mysql is something you might want to do for a number of reasons. it’s somewhat trivial to do so on the fly, you can do so like this: this will create a new table called my new table and write the data there, inferring schema and column order from the dataframe. To read data from a mysql database, you can use the spark sql data sources api which allows you to connect your spark dataframe to external data sources using jdbc. the following code demonstrates how to create a spark dataframe by reading data from a mysql table named ’employees’: .appname("mysql integration example"). The process of reading and writing a database table in redshift, sql server, oracle, mysql, snowflake, and bigquery using pyspark dataframes involves the following steps:. In this video lecture we will learn how to read a csv file and store it in an database table which can be mysql, oracle, teradata or any database which supports jdbc connection. in this video. To read a table from mysql using pyspark, we’ll use the `dataframereader` interface, which provides the `format` method for specifying the data source type, and the `option` method for passing connection options. In this article, you have learned how to connect to a mysql server from spark and write the dataframe to a table and read the table into dataframe with examples.

Python Writing To Delta Table Using Spark Sql Stack Overflow The process of reading and writing a database table in redshift, sql server, oracle, mysql, snowflake, and bigquery using pyspark dataframes involves the following steps:. In this video lecture we will learn how to read a csv file and store it in an database table which can be mysql, oracle, teradata or any database which supports jdbc connection. in this video. To read a table from mysql using pyspark, we’ll use the `dataframereader` interface, which provides the `format` method for specifying the data source type, and the `option` method for passing connection options. In this article, you have learned how to connect to a mysql server from spark and write the dataframe to a table and read the table into dataframe with examples.