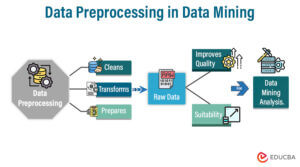

Data Preprocessing Dwdm Mod 2 Pdf Principal Component Analysis Data mining: data preprocessing in data mining involves cleaning and transforming raw data to make it suitable for analysis. this step is crucial for identifying patterns and extracting insights from large datasets. Data warehousing is a method of organizing and compiling data into one database, whereas data mining deals with fetching important data from databases. data.

Data Preprocessing Dwm Pdf Quantile Median Data transformations, such as normalization, may be applied. normalization may improve the accuracy and efficiency of mining algorithms. data reduction can reduce the data size by aggregating, eliminating redundant features, or clustering. Data preprocessing describes any type of processing performed on raw data to prepare it for another processing procedure. commonly used as a preliminary data mining practice, data preprocessing transforms the data into a format that will be more easily and effectively processed for the purpose of the user. 2 why data preprocessing?. This article comprises of data preprocessing which help data to get converted into usable format. tasks which helps data preprocessing are data cleaning, data integration, data transformation and data reduction. Data can be smoothed by fitting the data to a function, such as with linear regression involves finding the best line to fit two attributes. multiple linear regression is an extension, where more than two attributes are involved and the data are fit to a multidimensional surface.

Data Preprocessing In Data Mining Vtupulse This article comprises of data preprocessing which help data to get converted into usable format. tasks which helps data preprocessing are data cleaning, data integration, data transformation and data reduction. Data can be smoothed by fitting the data to a function, such as with linear regression involves finding the best line to fit two attributes. multiple linear regression is an extension, where more than two attributes are involved and the data are fit to a multidimensional surface. Data preprocessing: need for preprocessing the data, data cleaning, data integration and transformation, data reduction, discretization and concept hierarchy generation. In this article, you will learn what data preprocessing is and why it is important in data science, data mining, and machine learning. we will look at key techniques used for data preprocessing, like data cleaning, which helps fix mistakes and fill in missing information. Data preprocessing is a crucial step in data mining. it involves transforming raw data into a clean, structured, and suitable format for mining. proper data preprocessing helps improve the quality of the data, enhances the performance of algorithms, and ensures more accurate and reliable results. why preprocess the data?. The major tasks of data pre processing are described as data cleaning, integration, reduction, and transformation. data cleaning involves handling missing, noisy, and inconsistent data through techniques like filling in missing values, smoothing noisy data, and resolving inconsistencies.

Data Preprocessing In Data Mining A Comprehensive Guide Data preprocessing: need for preprocessing the data, data cleaning, data integration and transformation, data reduction, discretization and concept hierarchy generation. In this article, you will learn what data preprocessing is and why it is important in data science, data mining, and machine learning. we will look at key techniques used for data preprocessing, like data cleaning, which helps fix mistakes and fill in missing information. Data preprocessing is a crucial step in data mining. it involves transforming raw data into a clean, structured, and suitable format for mining. proper data preprocessing helps improve the quality of the data, enhances the performance of algorithms, and ensures more accurate and reliable results. why preprocess the data?. The major tasks of data pre processing are described as data cleaning, integration, reduction, and transformation. data cleaning involves handling missing, noisy, and inconsistent data through techniques like filling in missing values, smoothing noisy data, and resolving inconsistencies.