A Bettertransformer For Fast Transformer Inference Pytorch In this video, i show you how to accelerate transformer inference with optimum, an open source library by hugging face, and better transformer, a pytorch extension available since. Sometimes you can directly load your model on your gpu devices using `accelerate` library, therefore you can optionally try out the following command: step 3: convert your model to bettertransformer! now time to convert your model using bettertransformer api! you can run the commands below:.

A Bettertransformer For Fast Transformer Inference Pytorch Optimum provides wrappers around the original transformers trainer to enable training on powerful hardware easily. we support many providers: intel gaudi accelerators (hpu) enabling optimal performance on first gen gaudi, gaudi2 and gaudi3. aws trainium for accelerated training on trn1 and trn1n instances. onnx runtime (optimized for gpus). Accelerating transformers with better transformer’s “fastpath” traditional pytorch transformer implementation based on executing sequence of pytorch operations. this implementation misses several optimization opportunities. To solve this challenge, we created optimum – an extension of hugging face transformers to accelerate the training and inference of transformer models like bert. 1. what is optimum? an eli5. 2. new optimum inference and pipeline features. 3. end to end tutorial on accelerating roberta for question answering including quantization and optimization. The goal of this post is to show how to apply few practical optimizations to improve inference performance of 🤗 transformers pipelines on a single gpu. compatibility with pipeline api is the driving factor behind the selection of approaches for inference optimization.

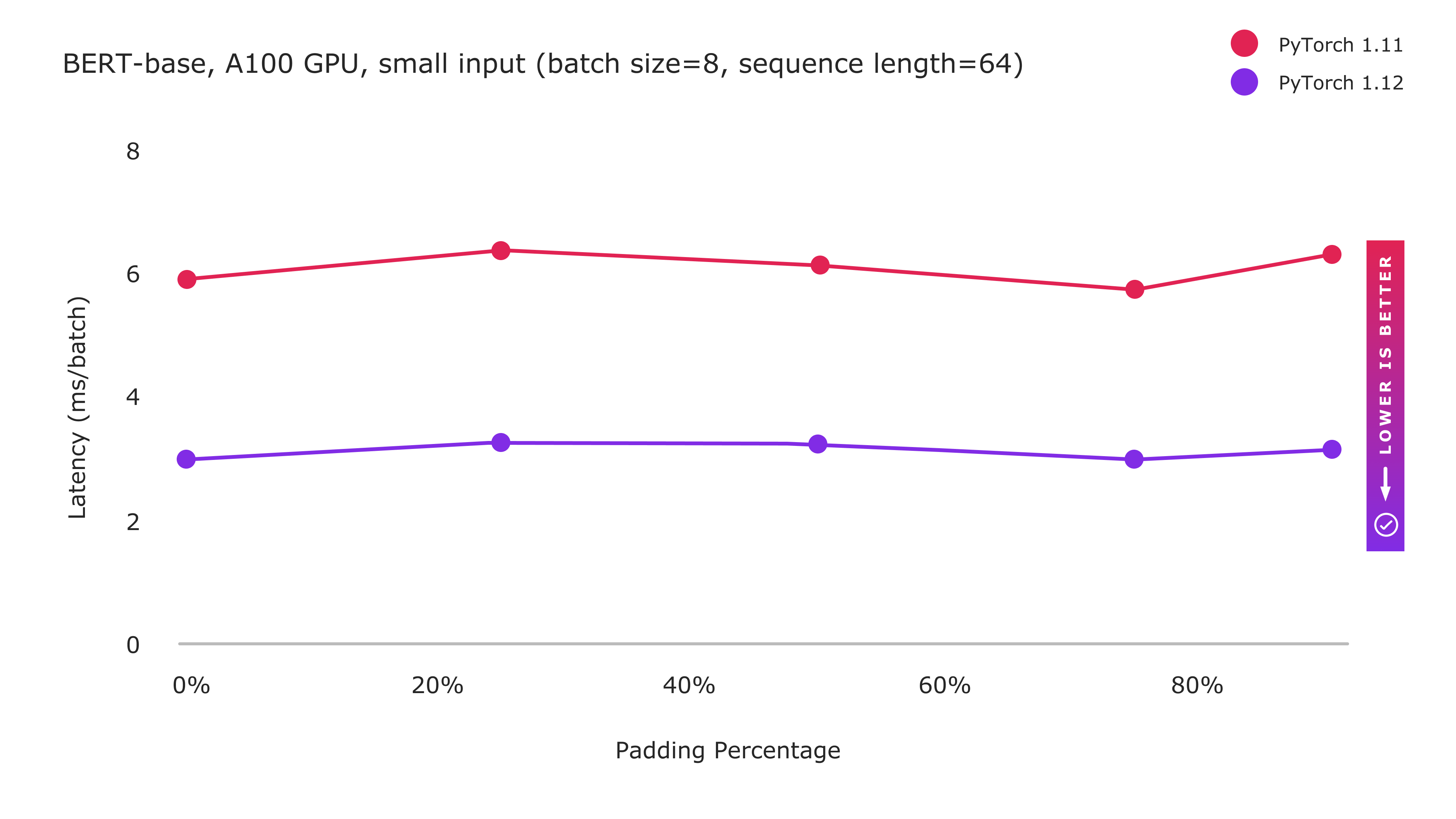

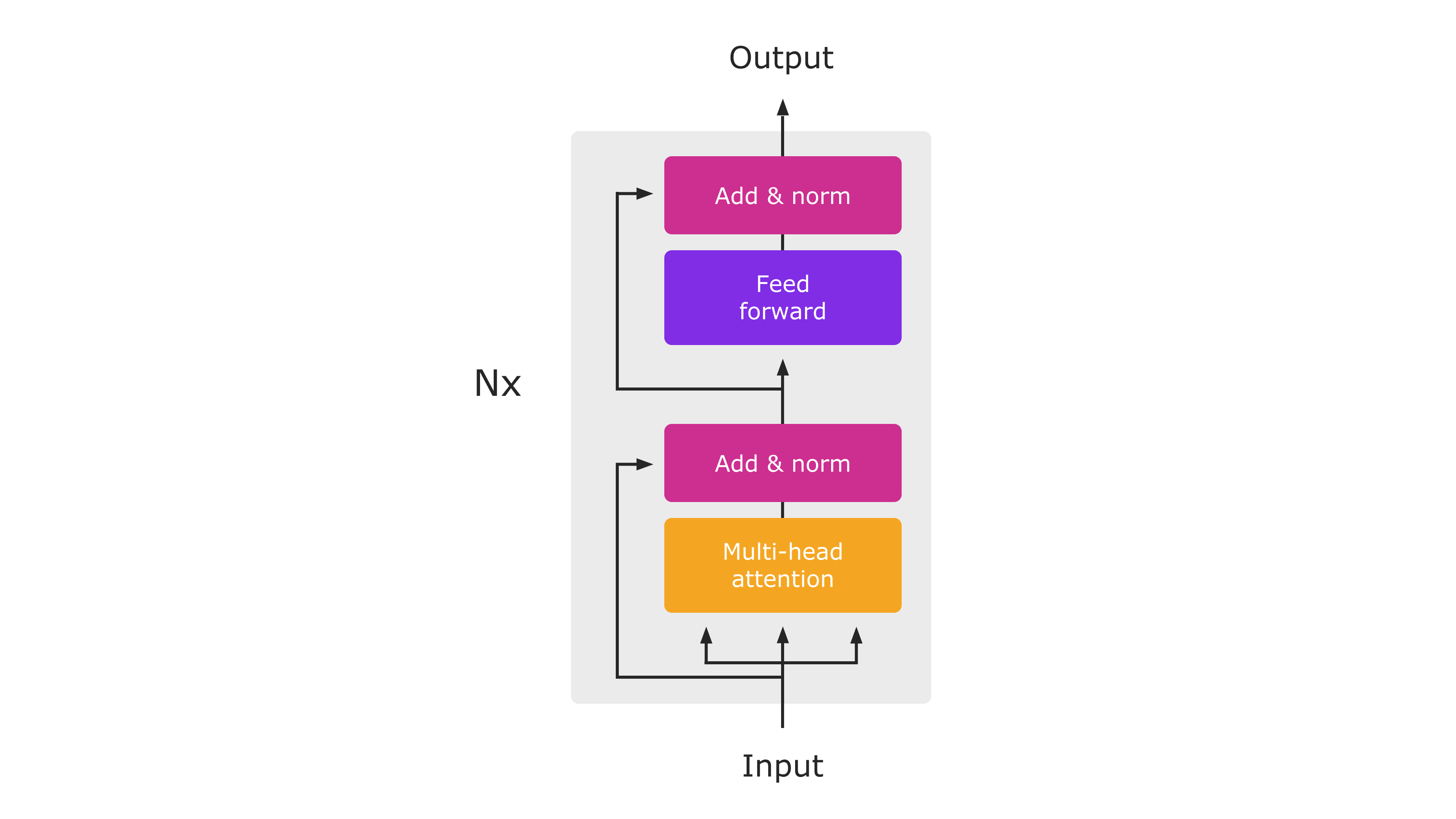

Openvino邃 Blog Accelerate Inference Of Hugging Face Transformer To solve this challenge, we created optimum – an extension of hugging face transformers to accelerate the training and inference of transformer models like bert. 1. what is optimum? an eli5. 2. new optimum inference and pipeline features. 3. end to end tutorial on accelerating roberta for question answering including quantization and optimization. The goal of this post is to show how to apply few practical optimizations to improve inference performance of 🤗 transformers pipelines on a single gpu. compatibility with pipeline api is the driving factor behind the selection of approaches for inference optimization. This guide will demonstrate a few ways to optimize inference on a gpu. the optimization methods shown below can be combined with each other to achieve even better performance, and they also work for distributed gpus. In this tutorial, we show how to use better transformer for production inference with torchtext. better transformer is a production ready fastpath to accelerate deployment of transformer models with high performance on cpu and gpu. Taking advantage of the fastpath bettertransformer is a fastpath for the pytorch transformer api. the fastpath is a native, specialized implementation of key transformer functions for cpu and gpu that applies to common transformer use cases. Bettertransformer is also supported for faster inference on single and multi gpu for text, image, and audio models. pytorch native nn.multiheadattention attention fastpath, called bettertransformer, can be used with transformers through the integration in the 🤗 optimum library.

Accelerated Inference For Large Transformer Models Using Nvidia Triton This guide will demonstrate a few ways to optimize inference on a gpu. the optimization methods shown below can be combined with each other to achieve even better performance, and they also work for distributed gpus. In this tutorial, we show how to use better transformer for production inference with torchtext. better transformer is a production ready fastpath to accelerate deployment of transformer models with high performance on cpu and gpu. Taking advantage of the fastpath bettertransformer is a fastpath for the pytorch transformer api. the fastpath is a native, specialized implementation of key transformer functions for cpu and gpu that applies to common transformer use cases. Bettertransformer is also supported for faster inference on single and multi gpu for text, image, and audio models. pytorch native nn.multiheadattention attention fastpath, called bettertransformer, can be used with transformers through the integration in the 🤗 optimum library.