Authenticate Databricks Rest Api And Access Delta Databricks Here's an example link that demonstrates how to authenticate and authorize access to delta tables using a service principal and azure ad token: yes, you can use a service principal and azure ad token to create a new db pipeline (jenkins ci cd) instead of using the existing azure resource token and pat token. To access a databricks resource with the databricks cli or rest apis, clients must authorize using a databricks account. this account must have permissions to access the resource, which can be configured by your databricks administrator or a user account with adminstrator privileges.

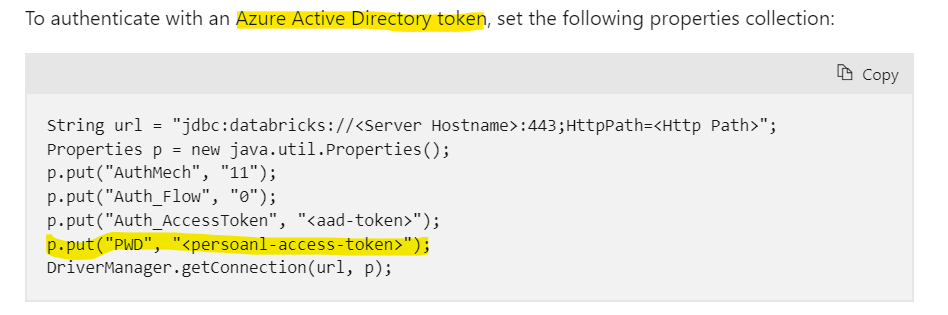

Databricks Rest Api You can use the oauth access token to authenticate to azure databricks account level rest apis and workspace level rest apis. the service principal must have account admin privileges to call account level rest apis. To authenticate with the service principal, you can use personal access tokens (pats) or an oauth access token, which is a microsoft entra id (formerly azure active directory) access token. databricks recommends using oauth access tokens instead of pats for greater security and convenience. In databricks: go to settings > identity and access > service principals > then verify 'application id' is matching in azure portal. in azure portal: search for spn and go it application (not spn). verify 'application (client) id' is matching with databricks from previous step. once i sync up this, i was able to use spn to make successful api call. Azure databricks authentication information, such as a azure databricks oauth token, an azure managed identity, or a microsoft entra id token. any request payload or request query parameters that are supported by the rest api operation, such as a cluster's id.

Creating Rest Api In Databricks Which Would Display Delta Tables In databricks: go to settings > identity and access > service principals > then verify 'application id' is matching in azure portal. in azure portal: search for spn and go it application (not spn). verify 'application (client) id' is matching with databricks from previous step. once i sync up this, i was able to use spn to make successful api call. Azure databricks authentication information, such as a azure databricks oauth token, an azure managed identity, or a microsoft entra id token. any request payload or request query parameters that are supported by the rest api operation, such as a cluster's id. In this post, we’ll dive into another integration method: using databricks sql rest apis with databricks oauth for authorization. we’ll demonstrate how to connect to databricks with single sign on (sso) in both power apps and a custom python application using oauth. Databricks provides unified client authentication, a common interface for creating and refreshing databricks oauth tokens by setting local (to the process) environment variables and specific fields for .databrickscfg and terraform configurations. This authentication flow uses oidc federation, allowing json web tokens (jwts) issued by the recipient's idp to be used as short lived oauth tokens that are authenticated by databricks. this databricks to open sharing authentication flow is for recipients who do not have access to a unity catalog enabled databricks workspace. So i had written an article earlier on how to trigger a databricks spark job via rest api (link). however, that article demonstrates the calling of the api using a personal token as.

Creating Rest Api In Databricks Which Would Display Delta Tables In this post, we’ll dive into another integration method: using databricks sql rest apis with databricks oauth for authorization. we’ll demonstrate how to connect to databricks with single sign on (sso) in both power apps and a custom python application using oauth. Databricks provides unified client authentication, a common interface for creating and refreshing databricks oauth tokens by setting local (to the process) environment variables and specific fields for .databrickscfg and terraform configurations. This authentication flow uses oidc federation, allowing json web tokens (jwts) issued by the recipient's idp to be used as short lived oauth tokens that are authenticated by databricks. this databricks to open sharing authentication flow is for recipients who do not have access to a unity catalog enabled databricks workspace. So i had written an article earlier on how to trigger a databricks spark job via rest api (link). however, that article demonstrates the calling of the api using a personal token as.

Creating Rest Api In Databricks Which Would Display Delta Tables This authentication flow uses oidc federation, allowing json web tokens (jwts) issued by the recipient's idp to be used as short lived oauth tokens that are authenticated by databricks. this databricks to open sharing authentication flow is for recipients who do not have access to a unity catalog enabled databricks workspace. So i had written an article earlier on how to trigger a databricks spark job via rest api (link). however, that article demonstrates the calling of the api using a personal token as.