Bert Transformers For Natural Language Processing Bert (bidirectional encoder representations from transformers) stands as an open source machine learning framework designed for the natural language processing (nlp). originating in 2018, this framework was crafted by researchers from google ai language. the article aims to explore the architecture, working and applications of bert. what is bert?. Bert (bidirectional encoder representations from transformers) has revolutionized natural language processing (nlp) by significantly enhancing the capabilities of language models.

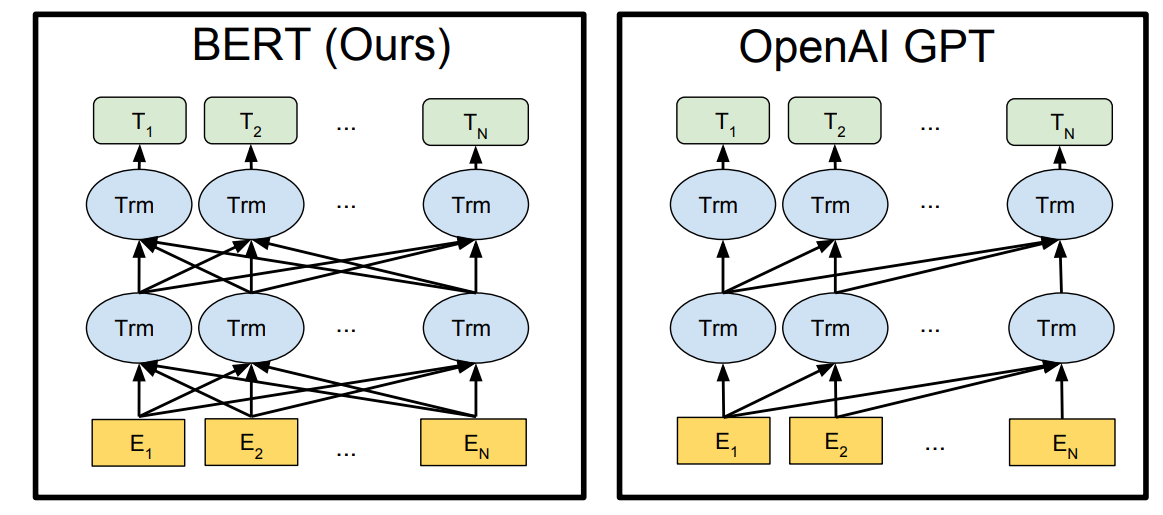

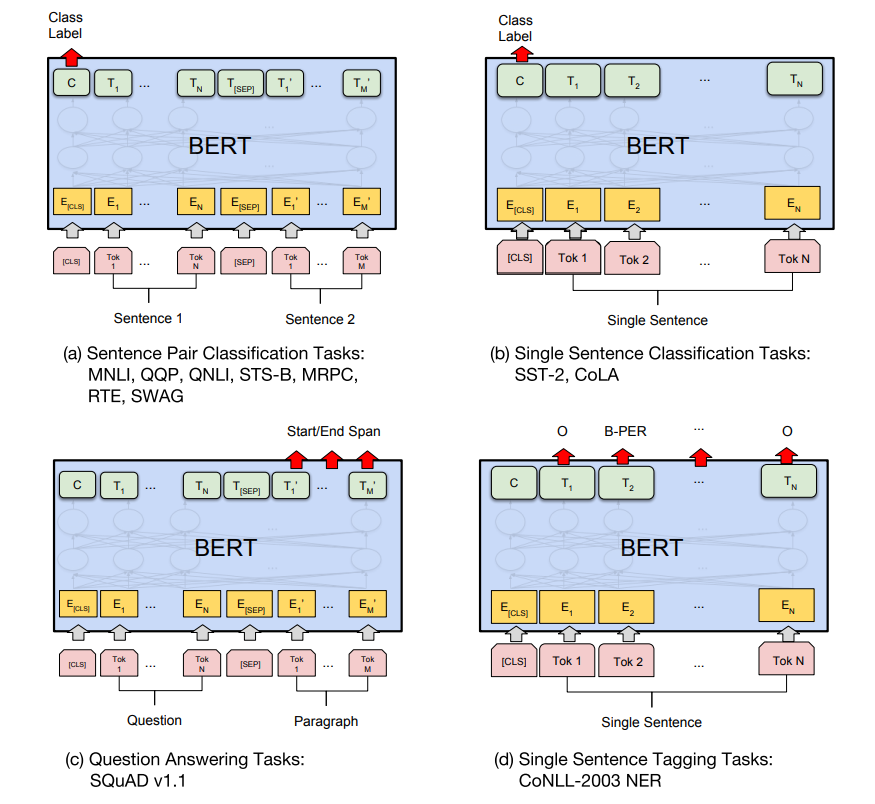

Bert Transformers For Natural Language Processing It uses the encoder only transformer architecture. bert dramatically improved the state of the art for large language models. as of 2020, bert is a ubiquitous baseline in natural language processing (nlp) experiments. [3] bert is trained by masked token prediction and next sentence prediction. In this review, we describe the application of one of the most popular deep learning based language models bert. the paper describes the mechanism of operation of this model, the main areas of its application to the tasks of text analytics, comparisons with similar models in each task, as well as a description of some proprietary models. Bert model is one of the first transformer application in natural language processing (nlp). its architecture is simple, but sufficiently do its job in the tasks that it is intended to. Bert (bidirectional encoder representations from transformers) is a revolutionary natural language processing (nlp) model developed by google. it has transformed the landscape of language.

Bert Transformers For Natural Language Processing Bert model is one of the first transformer application in natural language processing (nlp). its architecture is simple, but sufficiently do its job in the tasks that it is intended to. Bert (bidirectional encoder representations from transformers) is a revolutionary natural language processing (nlp) model developed by google. it has transformed the landscape of language. Bert (bidirectional encoder representations from transformers) is a groundbreaking model in natural language processing (nlp) that has significantly enhanced machines’ understanding of human language. let’s delve into its core mechanisms step by step: 1. bidirectional training: understanding context from both left and right. Bert enhances understanding by providing context aware representations of words, addressing limitations faced by previous models and making it a game changer for various nlp applications such as sentiment analysis and machine translation. Bert (bidirectional encoder representation from transformers) is an open source machine learning framework designed to assist computers in comprehending the context of ambiguous language and learn to derive knowledge and patterns from the sequence of words. Bert has revolutionized the field of natural language processing (nlp) with its groundbreaking ability to understand language in a deeply contextual and nuanced way. developed by google, bert (bidirectional encoder representations from transformers) is one of the most influential language models in modern nlp.