Bert Neural Network Explained Bert revolutionized the nlp space by solving for 11 of the most common nlp tasks (and better than previous models) making it the jack of all nlp trades. in this guide, you'll learn what bert is, why it’s different, and how to get started using bert: what is bert used for? how does bert work? let's get started! 🚀. 1. what is bert used for?. At the end of 2018 researchers at google ai language open sourced a new technique for natural language processing (nlp) called bert (bidirectional encoder representations from transformers).

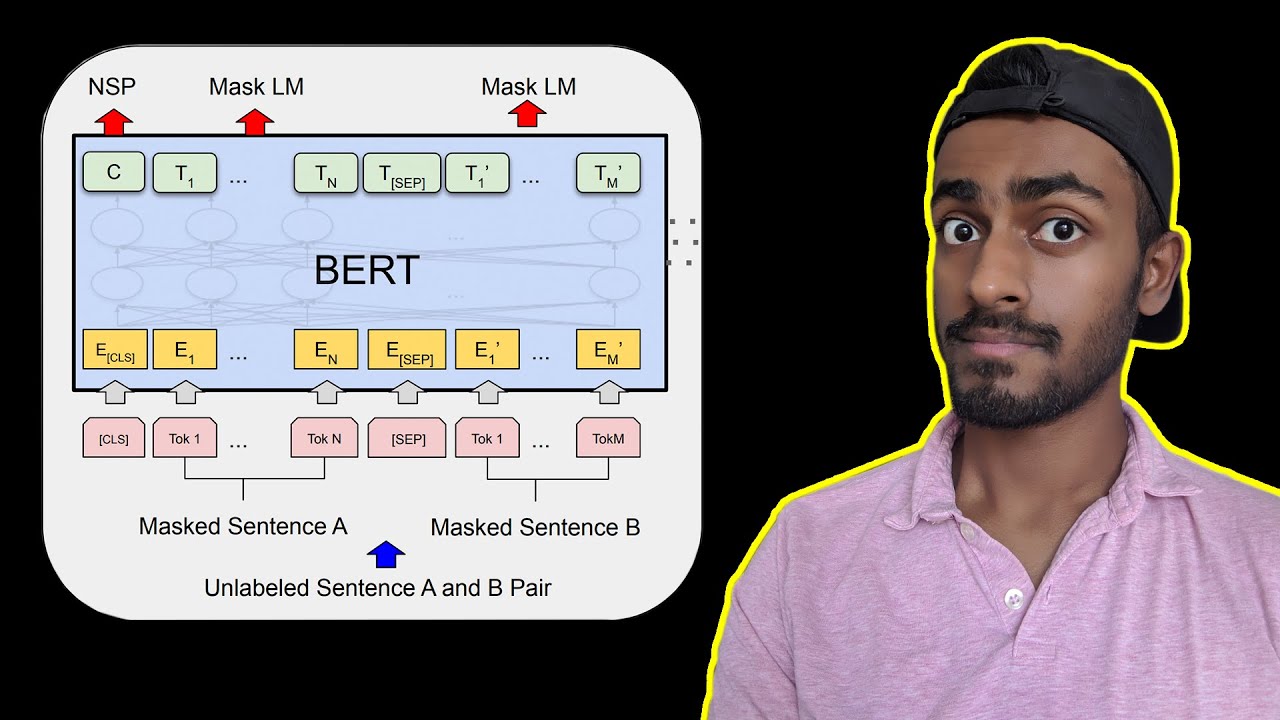

Bert Model Pdf Artificial Neural Network Cognitive Science Bidirectional encoder representations from transformers (bert) is a language model introduced in october 2018 by researchers at google. [1][2] it learns to represent text as a sequence of vectors using self supervised learning. it uses the encoder only transformer architecture. Bert (bidirectional encoder representations from transformers) leverages a transformer based neural network to understand and generate human like language. bert employs an encoder only architecture. Bert is a deep learning language model designed to improve the efficiency of natural language processing (nlp) tasks. it is famous for its ability to consider context by analyzing the relationships between words in a sentence bidirectionally. In this article we’ll discuss "bidirectional encoder representations from transformers" (bert), a model designed to understand language. while bert is similar to models like gpt, the focus of bert is to understand text rather than generate it.

Bert Neural Network Explained On Make A Gif Bert is a deep learning language model designed to improve the efficiency of natural language processing (nlp) tasks. it is famous for its ability to consider context by analyzing the relationships between words in a sentence bidirectionally. In this article we’ll discuss "bidirectional encoder representations from transformers" (bert), a model designed to understand language. while bert is similar to models like gpt, the focus of bert is to understand text rather than generate it. Transformers are models that can be designed to translate text, write poems and op eds, and even generate computer code. Bert (bidirectional encoder representations from transformers) is a recent paper published by researchers at google ai language. it has caused a stir in the machine learning community by presenting state of the art results in a wide variety of nlp tasks, including question answering (squad v1.1), natural language inference (mnli), and others. Bert has revolutionized nlp meaning by enabling machines to better understand the nuances of human language, such as context, sentiment, and syntax. it's like a decoder ring for ai, unlocking the secrets of linguistic. at the heart of modern nlp meaning lies bert, a remarkable model powered by names transformers. Bert has revolutionized natural language processing (nlp) by introducing a powerful framework for pre training language representations using bidirectional transformers. this markdown file explores bert in depth, covering its architecture, training process, fine tuning, applications, advantages, and future directions.

Bert And Openai Gpt Neural Network Architecture 18 Download Transformers are models that can be designed to translate text, write poems and op eds, and even generate computer code. Bert (bidirectional encoder representations from transformers) is a recent paper published by researchers at google ai language. it has caused a stir in the machine learning community by presenting state of the art results in a wide variety of nlp tasks, including question answering (squad v1.1), natural language inference (mnli), and others. Bert has revolutionized nlp meaning by enabling machines to better understand the nuances of human language, such as context, sentiment, and syntax. it's like a decoder ring for ai, unlocking the secrets of linguistic. at the heart of modern nlp meaning lies bert, a remarkable model powered by names transformers. Bert has revolutionized natural language processing (nlp) by introducing a powerful framework for pre training language representations using bidirectional transformers. this markdown file explores bert in depth, covering its architecture, training process, fine tuning, applications, advantages, and future directions.