A Soft Introduction To Nlp Semantic Similarity Calculations Using Text similarity is a really useful natural language processing (nlp) tool. it allows you to find similar pieces of text and has many real world use cases. this article discusses text similarity, its use, and the most common algorithms that implement it. To compute the similarity between two text documents, you can use the word2vec model from the gensim library. this model captures semantic relationships between words and can be utilized to calculate the similarity between sentences.

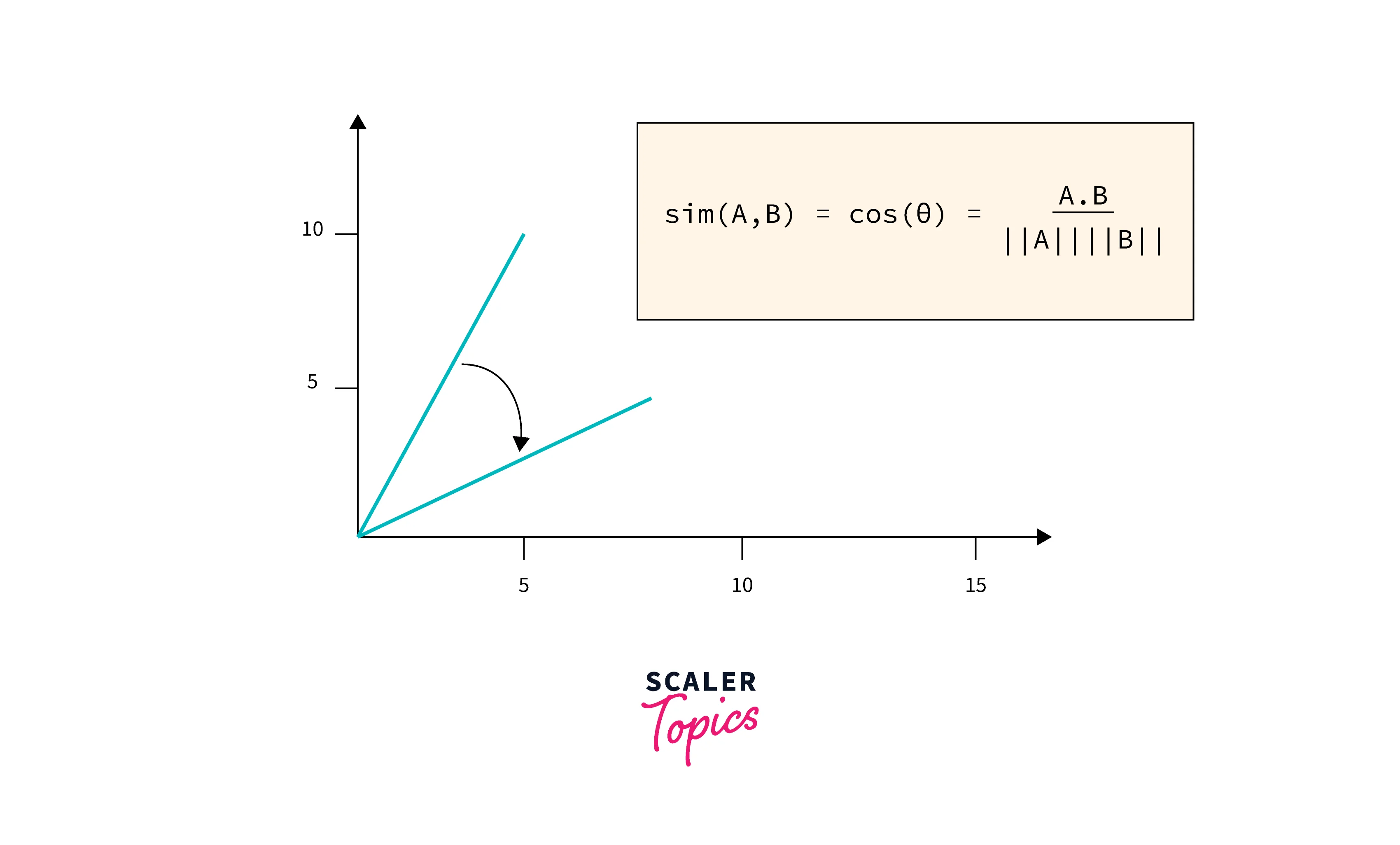

Text Similarity In Nlp Scaler Topics After we clean up the text, we move on to calculate something called tf idf to see how important words are in a document. then, we use cosine similarity to figure out how similar two. In the realm of natural language processing (nlp), determining the degree of similarity between two documents is a crucial task. there are various methodologies to achieve this, each with its strengths and applicability depending on the use case. Text similarity is a crucial aspect of natural language processing (nlp) and information retrieval. understanding the similarity between texts can enable us to perform tasks such as plagiarism detection, document clustering, recommendation systems, and search engine optimization. Similarity measures are used in nlp to quantify the degree of similarity or dissimilarity between two pieces of text. here are some commonly used similarity measures in nlp: cosine similarity: this measures the similarity between two vectors by calculating the cosine of the angle between them.

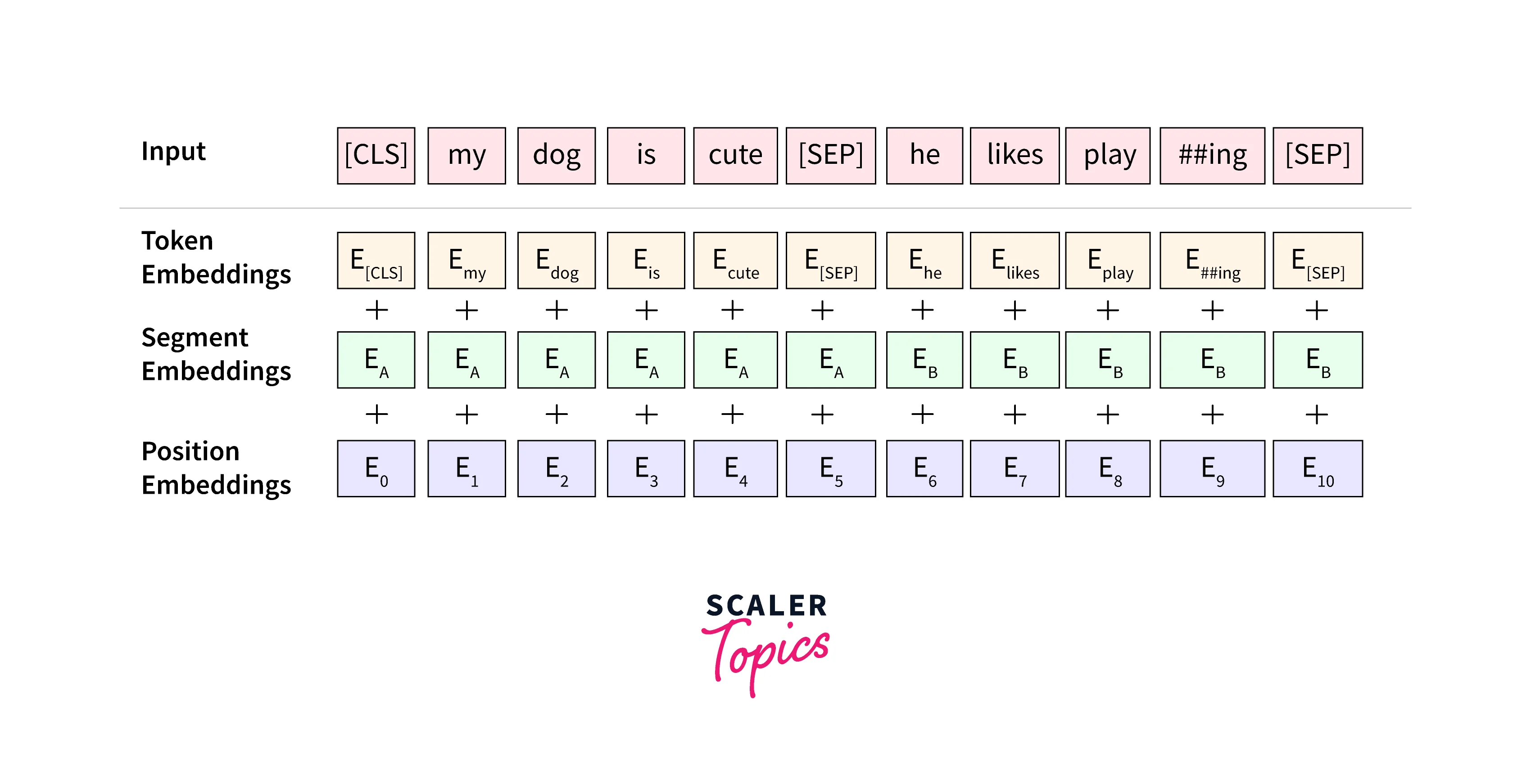

Text Similarity In Nlp Scaler Topics Text similarity is a crucial aspect of natural language processing (nlp) and information retrieval. understanding the similarity between texts can enable us to perform tasks such as plagiarism detection, document clustering, recommendation systems, and search engine optimization. Similarity measures are used in nlp to quantify the degree of similarity or dissimilarity between two pieces of text. here are some commonly used similarity measures in nlp: cosine similarity: this measures the similarity between two vectors by calculating the cosine of the angle between them. Computing the similarity between two text documents is a common task in nlp, with several practical applications. it has commonly been used to, for example, rank results in a search engine or recommend similar content to readers. This repository contains python code for performing text similarity analysis using natural language processing (nlp) techniques. the code utilizes libraries like pandas, scikit learn, nltk, and seaborn to analyze the similarity between different documents based on their content. In this article, we will explore different techniques to calculate text document similarity using python 3. 1. preprocessing the text documents. before calculating the similarity between two text documents, it is essential to preprocess the documents to remove any noise and irrelevant information. the preprocessing steps typically involve:. Showing 4 algorithms to transform the text into embeddings: tf idf, word2vec, doc2vect, and transformers and two methods to get the similarity: cosine similarity and euclidean distance.

Text Similarity In Nlp Scaler Topics Computing the similarity between two text documents is a common task in nlp, with several practical applications. it has commonly been used to, for example, rank results in a search engine or recommend similar content to readers. This repository contains python code for performing text similarity analysis using natural language processing (nlp) techniques. the code utilizes libraries like pandas, scikit learn, nltk, and seaborn to analyze the similarity between different documents based on their content. In this article, we will explore different techniques to calculate text document similarity using python 3. 1. preprocessing the text documents. before calculating the similarity between two text documents, it is essential to preprocess the documents to remove any noise and irrelevant information. the preprocessing steps typically involve:. Showing 4 algorithms to transform the text into embeddings: tf idf, word2vec, doc2vect, and transformers and two methods to get the similarity: cosine similarity and euclidean distance.