Practice Using Chromadb For Multimodal Embeddings It seems that a workaround has been found to mitigate potential errors with chromadb, and a fix has been implemented. however, a new issue has been reported where a typeerror occurs when trying to add a record to a collection using the huggingfacebgeembeddings object. I am trying to process 1000 page pdfs using huggingface embeddings and chroma db. whenever i try to upload a large file, however, i get the error below. i don't know if chromadb can handle that bi.

Chromadb Github Topics Github There could be a problem with passing the model to the function and calling it repeatedly. if that doesn’t solve it, we would need a stand alone reproducibility script for us to try on our end. The embedding model i am using is returning a k x f tensor, with k being the number of tokens in the query phrase and f being the number of features. the chroma huggingface embedding function is expecting a 1xf tensor only. So how do i fix the errors? since it’s an error on the part of hugging face, all we users can do is report the error and wait. Error : error: expected embeddingfunction. call to have the following signature: odict keys ( [‘self’, ‘input’]), got odict keys ( [‘args’, ‘kwargs’]).

Using Chromadb Vector Database To Store And Retrieve Information Using So how do i fix the errors? since it’s an error on the part of hugging face, all we users can do is report the error and wait. Error : error: expected embeddingfunction. call to have the following signature: odict keys ( [‘self’, ‘input’]), got odict keys ( [‘args’, ‘kwargs’]). My goal is to use this embedding model through the endpoint to convert a pdf file into vectors and store them in a vector database using langchain. however, i’m encountering an issue where i receive an error stating “maximum allowed batch size 32” when i run my code. I have chromadb vector database and i'm trying to create embeddings for chunks of text like the example below, using a custom embedding function. my end goal is to do semantic search of a collection i create from these text chunks. In this example, used huffon sentence klue roberta base model in huggingface. from chromadb.db.base import uniqueconstrainterror. from chromadb.utils import embedding functions. try: collection = client.create collection(name= 'article', embedding function=em) except uniqueconstrainterror: # already exist collection . I'm facing an issue when using langflow, huggingface embeddings, and chromadb together. tested the embedding model alone in langflow – it works fine. checked api key – initially misconfigured, but now fixed. added debug logs – the first api call returns valid data, but the second one returns an empty response (b''), causing the error.

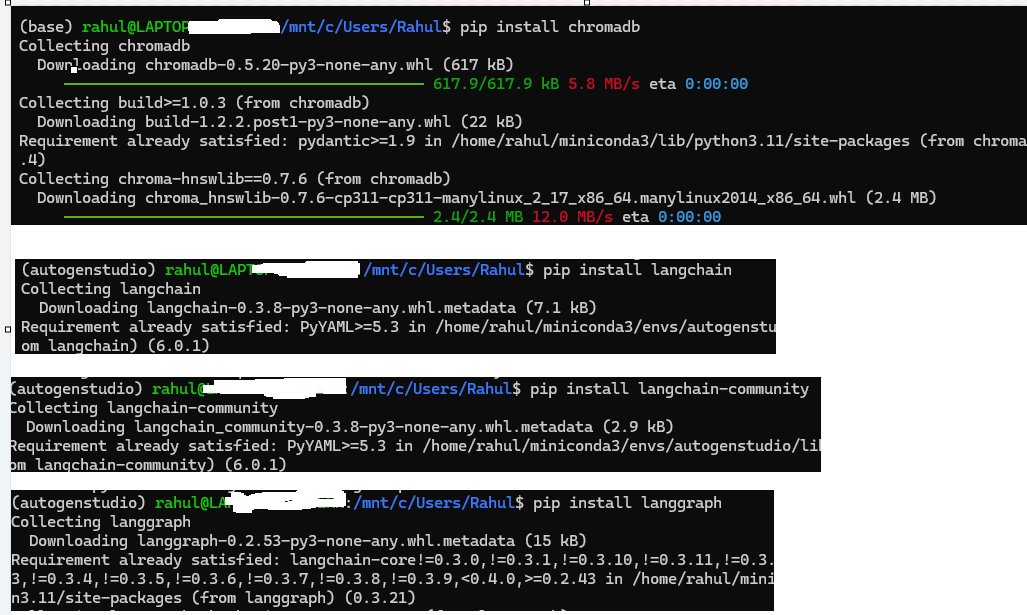

Building A Basic News Agent Using Ollama Langchain Chromadb And My goal is to use this embedding model through the endpoint to convert a pdf file into vectors and store them in a vector database using langchain. however, i’m encountering an issue where i receive an error stating “maximum allowed batch size 32” when i run my code. I have chromadb vector database and i'm trying to create embeddings for chunks of text like the example below, using a custom embedding function. my end goal is to do semantic search of a collection i create from these text chunks. In this example, used huffon sentence klue roberta base model in huggingface. from chromadb.db.base import uniqueconstrainterror. from chromadb.utils import embedding functions. try: collection = client.create collection(name= 'article', embedding function=em) except uniqueconstrainterror: # already exist collection . I'm facing an issue when using langflow, huggingface embeddings, and chromadb together. tested the embedding model alone in langflow – it works fine. checked api key – initially misconfigured, but now fixed. added debug logs – the first api call returns valid data, but the second one returns an empty response (b''), causing the error.