Cs231n Convolutional Neural Networks For Visual Recognition Pdf 3. 反向传播 我们几乎给你展示了反向传播。 本质就是链式法则的使用。 技巧: 我们在计算低层导数时利用对高层的的求导来减少计算量。 computation graphs and backpropagation 软件工程中用图来表示神经网络式子: 源节点:输入 内部节点:操作 边传递操作的结果. Stanford cs224n: nlp with deep learning | spring 2024 | lecture 3 backpropagation, neural network stanford online 733k subscribers 6.

Cs224n Lecture 3 Backprop And Neural Networks Aigonna Using word vectors, build a context window of word vectors, then pass through a neural network and feed it to logistic classifier. idea: classify each word in its context window of neighboring words train logistic classifier on hand labeled data to classify center word {yes no} for each class based on a concatenation of word vectors in a window. Key learning: the mathematics and practical implementation of how neural networks are trained by backpropagation. My lecture notes on the nlp series provided by stanford. stanford cs224n lecture notes lecture 3 backprop and neural networks.ipynb at main · alckasoc stanford cs224n lecture notes. [study] stanford cs224n: lecture 3 backprop and neural networks 1 분 소요 week 3 task of stanford cs244n: natural language processing with deep learning lecture named entity recognition (ner) named entity recognition identifies and classifies named entities into predefined entity categories such as person names, organizations… for example,.

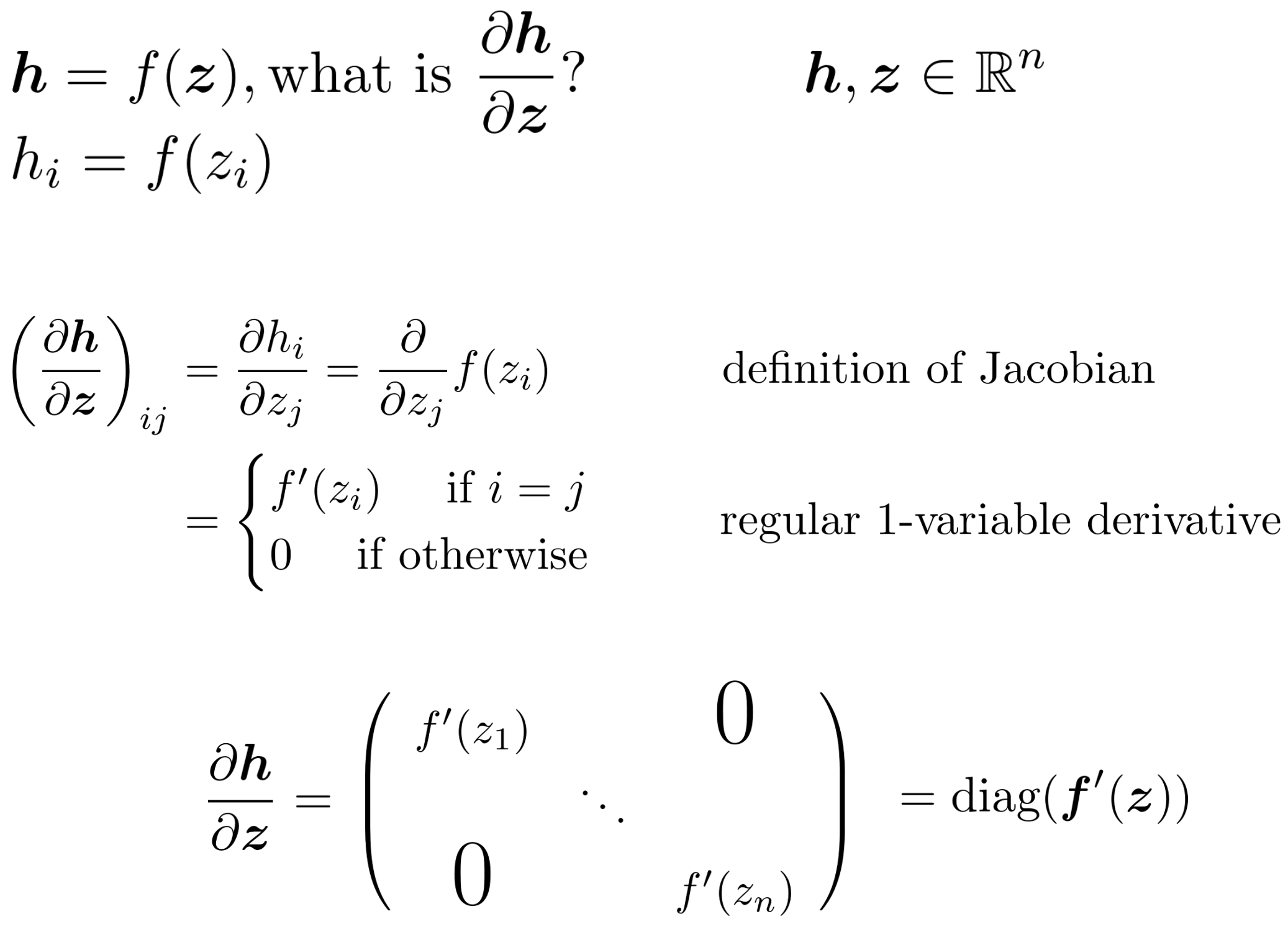

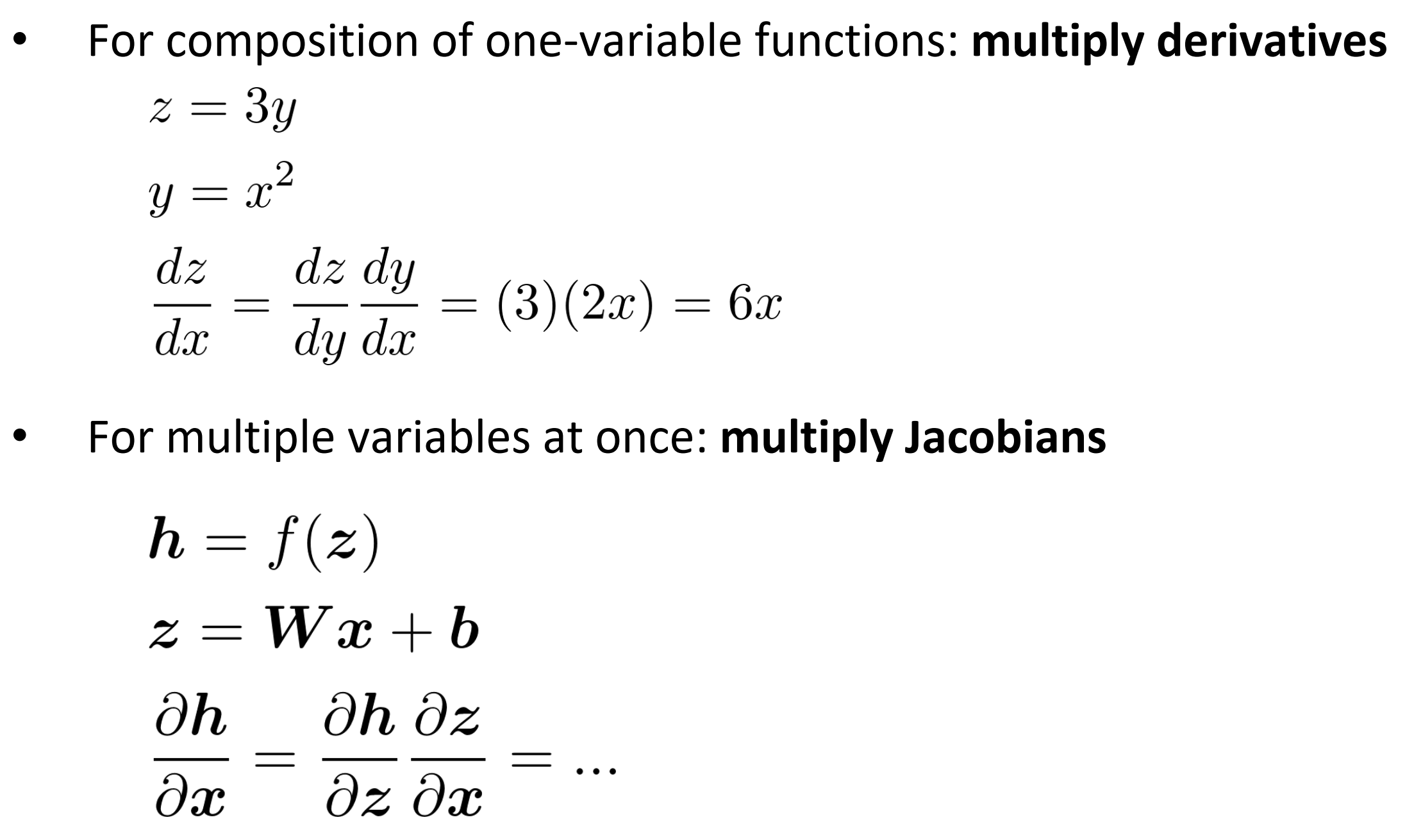

Cs224n Lecture 3 Backprop And Neural Networks Aigonna My lecture notes on the nlp series provided by stanford. stanford cs224n lecture notes lecture 3 backprop and neural networks.ipynb at main · alckasoc stanford cs224n lecture notes. [study] stanford cs224n: lecture 3 backprop and neural networks 1 분 소요 week 3 task of stanford cs244n: natural language processing with deep learning lecture named entity recognition (ner) named entity recognition identifies and classifies named entities into predefined entity categories such as person names, organizations… for example,. For more information about stanford's artificial intelligence professional and graduate programs visit: stanford.io 3vudzrvthis lecture covers:1. why. Lecture 3 of cs224n focuses on neural network learning, emphasizing the importance of understanding the mathematics behind neural networks and dependency parsing. it covers intrinsic and extrinsic evaluations of word vectors, the challenges of word sense ambiguity, and introduces named entity recognition (ner) using logistic classifiers. This course aims to teach deep learning for nlp use. you can find the clas videos from: playlist?list=ploromvodv4rosh4v6133s9lfprhjembmj. lecture notes and assignments. contribute to busekoseoglu stanford cs224n development by creating an account on github. Christopher manning lecture 3: neural net learning: gradients by hand (matrix calculus) and algorithmically (the backpropagation algorithm) assignment 2 is all about making sure you really understand the math of neural networks then we’ll let the software do it!.

Cs224n Lecture 3 Backprop And Neural Networks Aigonna For more information about stanford's artificial intelligence professional and graduate programs visit: stanford.io 3vudzrvthis lecture covers:1. why. Lecture 3 of cs224n focuses on neural network learning, emphasizing the importance of understanding the mathematics behind neural networks and dependency parsing. it covers intrinsic and extrinsic evaluations of word vectors, the challenges of word sense ambiguity, and introduces named entity recognition (ner) using logistic classifiers. This course aims to teach deep learning for nlp use. you can find the clas videos from: playlist?list=ploromvodv4rosh4v6133s9lfprhjembmj. lecture notes and assignments. contribute to busekoseoglu stanford cs224n development by creating an account on github. Christopher manning lecture 3: neural net learning: gradients by hand (matrix calculus) and algorithmically (the backpropagation algorithm) assignment 2 is all about making sure you really understand the math of neural networks then we’ll let the software do it!.