Encryption Vs Data Tokenization Which Is Better For Securing Your In previous “take charge of your data” posts, we showed you how to gain visibility into your data using cloud data loss prevention (dlp) and how to protect sensitive data by incorporating. Protect sensitive data with tokenization. learn how data tokenization works, its benefits, real world examples, and how to implement it for security and compliance.

.jpeg)

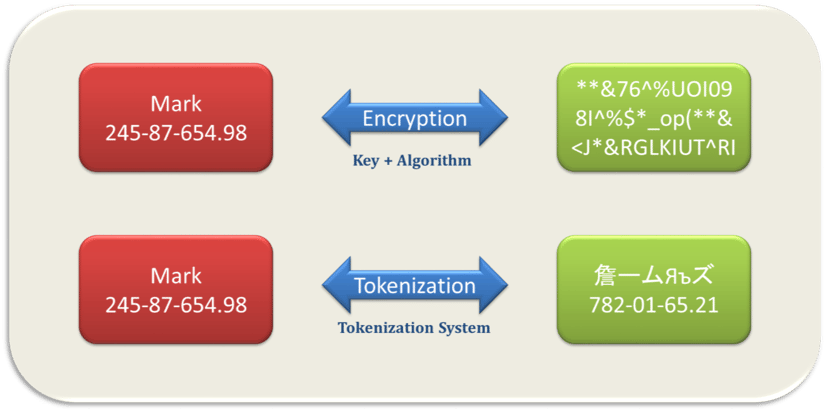

Data Tokenization And Encryption Using Go To perform tokenization or encryption of data, the baffle manager is used to define a data protection policy to create a privacy schema and maps rds database columns to a given encryption key and protection mode. Data tokenization is the process of protecting sensitive data by replacing it with unique identification symbols, known as tokens. these tokens have no meaningful value on their own and cannot be reverse engineered to reveal the original confidential data. Encryption provides secure access to data while allowing reversible transformation. tokenization replaces sensitive data with unique tokens for storage and processing purposes, retaining referential integrity. masking on the other hand, modifies data to ensure privacy while preserving its structure. however, all three tec. Data security is crucial for businesses, and two popular methods to protect sensitive data are tokenization and encryption. tokenization substitutes real values with tokens, maintaining referential integrity and future proofing security.

Credit Card Data Encryption Vs Tokenization Encryption provides secure access to data while allowing reversible transformation. tokenization replaces sensitive data with unique tokens for storage and processing purposes, retaining referential integrity. masking on the other hand, modifies data to ensure privacy while preserving its structure. however, all three tec. Data security is crucial for businesses, and two popular methods to protect sensitive data are tokenization and encryption. tokenization substitutes real values with tokens, maintaining referential integrity and future proofing security. Encryption and tokenization both aim to protect data, but the manner in which each method does so varies. it’s important to begin by understanding the core concepts for each of these methods of protecting data before diving deeper into the specific differences between the two. Data tokenization as a broad term is the process of replacing raw data with a digital representation. in data security, tokenization replaces sensitive data with randomized, nonsensitive substitutes, called tokens, that have no traceable relationship back to the original data. Tokenization is the process of replacing sensitive data elements (such as a bank account number credit card number) with a non sensitive substitute, known as a token. the token is a randomized data string which has no essential value or meaning.

Tokenization Encryption And Data Sovereignty Encryption and tokenization both aim to protect data, but the manner in which each method does so varies. it’s important to begin by understanding the core concepts for each of these methods of protecting data before diving deeper into the specific differences between the two. Data tokenization as a broad term is the process of replacing raw data with a digital representation. in data security, tokenization replaces sensitive data with randomized, nonsensitive substitutes, called tokens, that have no traceable relationship back to the original data. Tokenization is the process of replacing sensitive data elements (such as a bank account number credit card number) with a non sensitive substitute, known as a token. the token is a randomized data string which has no essential value or meaning.

Data Tokenization And Encryption Using Go Radiostudio Tokenization is the process of replacing sensitive data elements (such as a bank account number credit card number) with a non sensitive substitute, known as a token. the token is a randomized data string which has no essential value or meaning.