Deep Learning For Nlp An Introduction To Neural Word Embeddings Deep Convert words into compact vectors as input to neural networks; e.g., rnns implementation detail: may need to learn extra tokens such as “unk” and “eos” to represent out of vocabulary words and signify end of the string respectively. In this post, we will unveil the magic behind them, see what they are, why they have become a standard in the natural language processing (nlp hereinafter) world, how they are built, and explore some of the most used word embedding algorithms.

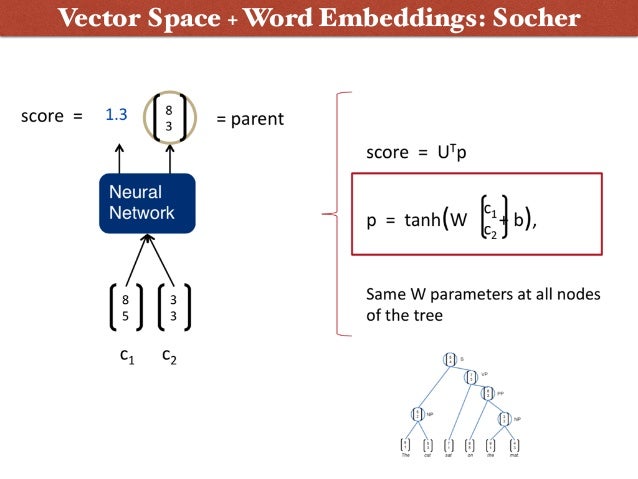

Deep Learning For Nlp An Introduction To Neural Word Embeddings In this seminar, we are planning to review modern nlp frameworks starting with a methodology that can be seen as the beginning of modern nlp: word embeddings. All modern nlp techniques use neural networks as a statistical architecture. word embeddings are mathematical representations of words, sentences and (sometimes) whole documents . Word2vec “vectorizes” about words, and by doing so it makes natural language computer readable – we can start to perform powerful mathematical operations on words to detect their similarities. so a neural word embedding represents a word with numbers. it’s a simple, yet unlikely, translation. Word embeddings give us a way to use an efficient, dense representation in which similar words have a similar encoding. importantly, you do not have to specify this encoding by hand. an embedding is a dense vector of floating point values (the length of the vector is a parameter you specify).

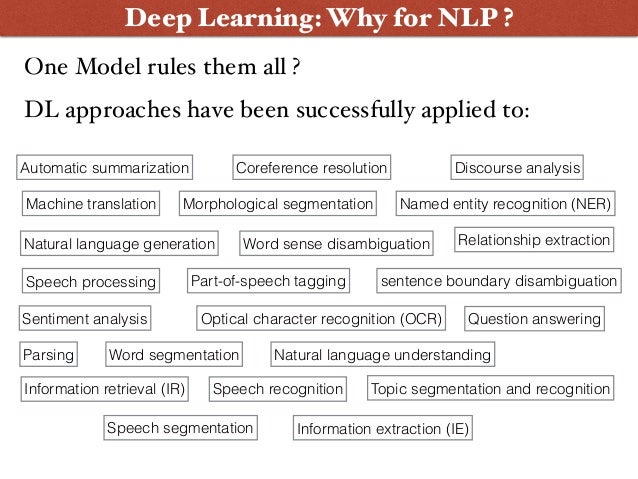

Deep Learning For Nlp An Introduction To Neural Word Embeddings Word2vec “vectorizes” about words, and by doing so it makes natural language computer readable – we can start to perform powerful mathematical operations on words to detect their similarities. so a neural word embedding represents a word with numbers. it’s a simple, yet unlikely, translation. Word embeddings give us a way to use an efficient, dense representation in which similar words have a similar encoding. importantly, you do not have to specify this encoding by hand. an embedding is a dense vector of floating point values (the length of the vector is a parameter you specify). The document discusses the challenges and advancements in natural language processing (nlp), particularly focusing on deep learning techniques like word embeddings and recurrent neural networks. In this paper, we present a survey of the application of deep learning techniques in nlp, with a focus on the various tasks where deep learning is demonstrating stronger impact. Word embeddings in nlp is a technique where individual words are represented as real valued vectors in a lower dimensional space and captures inter word semantics. each word is represented by a real valued vector with tens or hundreds of dimensions.

Deep Learning For Nlp An Introduction To Neural Word Embeddings The document discusses the challenges and advancements in natural language processing (nlp), particularly focusing on deep learning techniques like word embeddings and recurrent neural networks. In this paper, we present a survey of the application of deep learning techniques in nlp, with a focus on the various tasks where deep learning is demonstrating stronger impact. Word embeddings in nlp is a technique where individual words are represented as real valued vectors in a lower dimensional space and captures inter word semantics. each word is represented by a real valued vector with tens or hundreds of dimensions.

Deep Learning For Nlp An Introduction To Neural Word Embeddings Word embeddings in nlp is a technique where individual words are represented as real valued vectors in a lower dimensional space and captures inter word semantics. each word is represented by a real valued vector with tens or hundreds of dimensions.