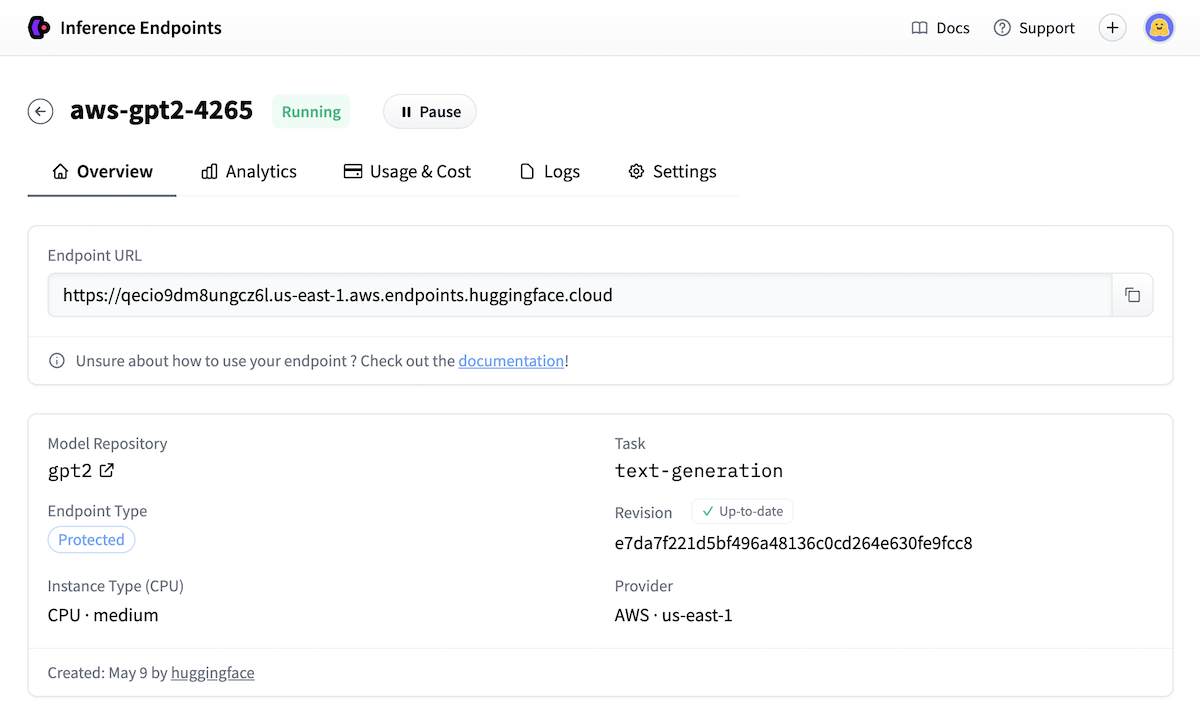

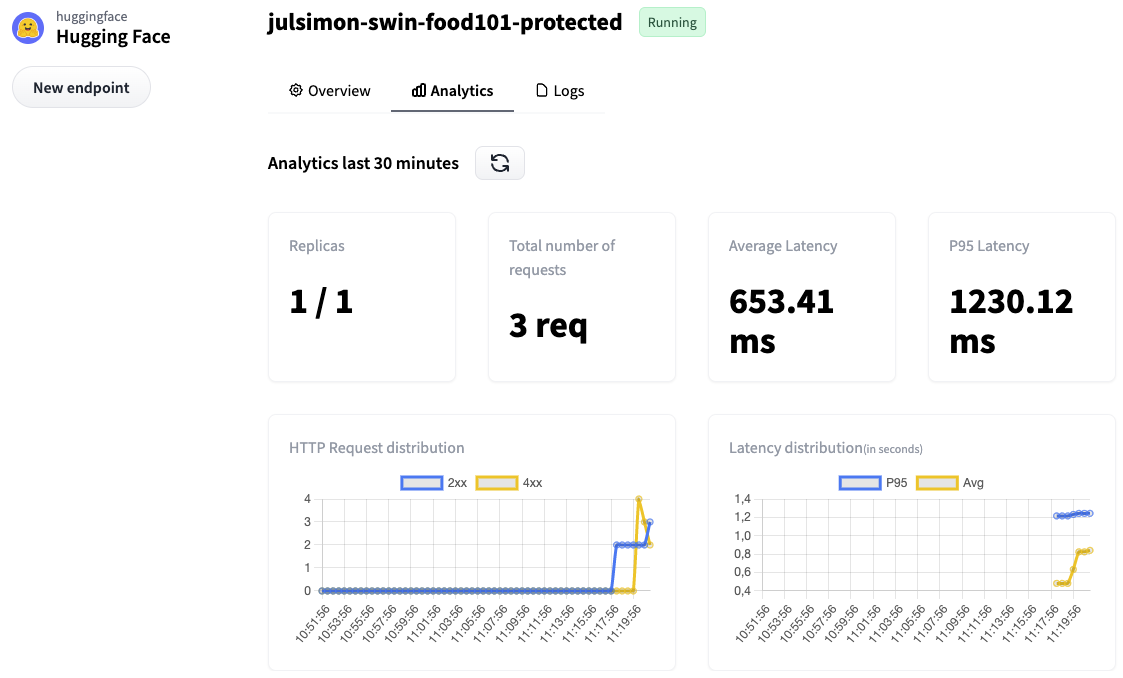

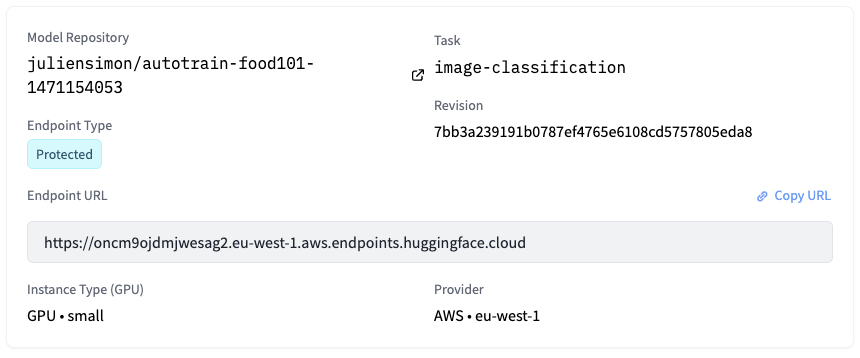

Inference Endpoints Hugging Face In this blog post, we will show you how to deploy open source llms to hugging face inference endpoints, our managed saas solution that makes it easy to deploy models. additionally, we will teach you how to stream responses and test the performance of our endpoints. so let's get started!. In this blog post, we showed you how to deploy open llms with vllm on hugging face inference endpoints using the custom container image. we used the huggingface hub python library to programmatically create and manage inference endpoints.

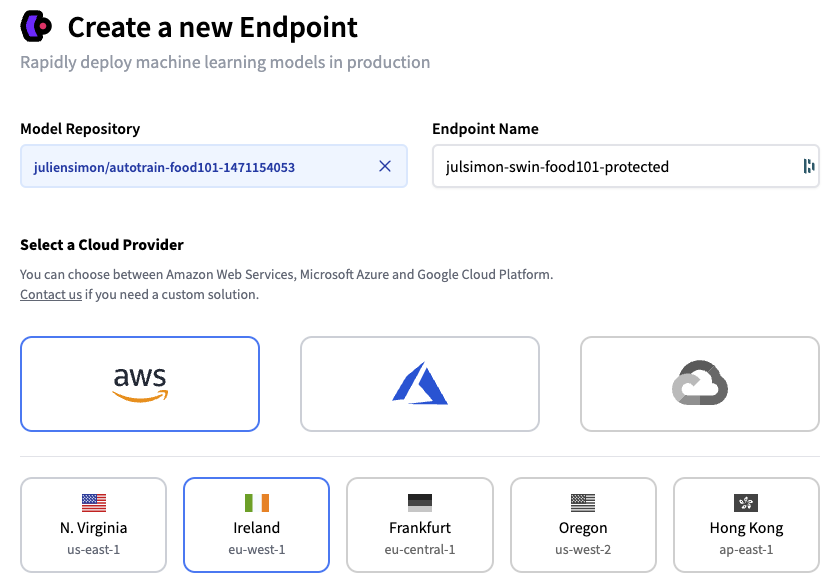

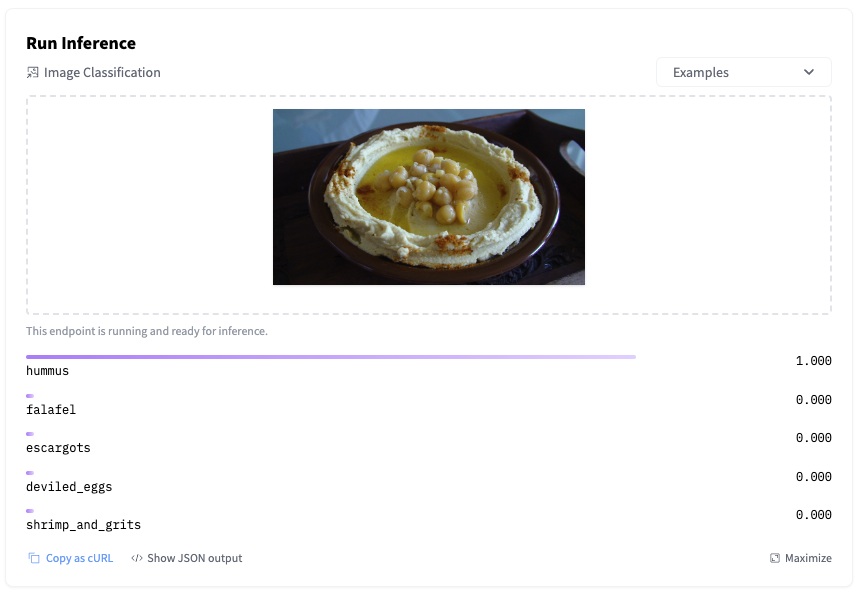

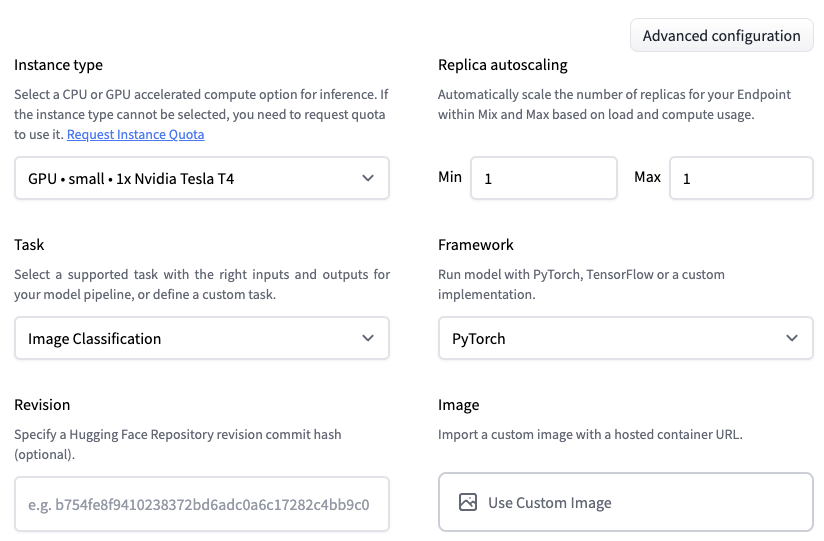

Getting Started With Hugging Face Inference Endpoints In this article, you have learned how to deploy your model using the user friendly solution developed by hugging face: inference endpoints. additionally, you have learned how to build an. In this blog post, we showed you how to deploy open source llms using hugging face inference endpoints, how to control the text generation with advanced parameters, and how to stream responses to a python or javascript client to improve the user experience. Explore the deployment options for custom llms with a focus on hugging face inference endpoints. learn the step by step process. Deploying meta llama 3 8b instruct llm from hugging face using friendli dedicated endpoints. with friendli dedicated endpoints, you can easily spin up scalable, secure, and highly available inference deployments, without the need for extensive infrastructure expertise or significant capital expenditures.

Getting Started With Hugging Face Inference Endpoints Explore the deployment options for custom llms with a focus on hugging face inference endpoints. learn the step by step process. Deploying meta llama 3 8b instruct llm from hugging face using friendli dedicated endpoints. with friendli dedicated endpoints, you can easily spin up scalable, secure, and highly available inference deployments, without the need for extensive infrastructure expertise or significant capital expenditures. In this blog post, we will show you how to deploy open source embedding models to hugging face inference endpoints using text embedding inference, our managed saas solution that makes it easy to deploy models. additionally, we will teach you how to run large scale batch requests. In this comprehensive guide, we’ll explore three popular methods for deploying custom llms and delve into the detailed process of deploying models as hugging face inference endpoints,. Hugging face inference endpoints are managed apis that make it easy to deploy and scale ml models directly from the hugging face hub or your own models. the key benefits include scale to zero cost savings, autoscaling infrastructure, ease of use, customization, production ready deployment, and more. To deploy our model, we’ll merge the fine tuned qlora adapter with the base falcon 7b model. next, we’ll upload the combined model, along with the original tokenizer and necessary supporting files, to the huggingface hub. we’ll also include a custom handler to facilitate the inference process.

Getting Started With Hugging Face Inference Endpoints In this blog post, we will show you how to deploy open source embedding models to hugging face inference endpoints using text embedding inference, our managed saas solution that makes it easy to deploy models. additionally, we will teach you how to run large scale batch requests. In this comprehensive guide, we’ll explore three popular methods for deploying custom llms and delve into the detailed process of deploying models as hugging face inference endpoints,. Hugging face inference endpoints are managed apis that make it easy to deploy and scale ml models directly from the hugging face hub or your own models. the key benefits include scale to zero cost savings, autoscaling infrastructure, ease of use, customization, production ready deployment, and more. To deploy our model, we’ll merge the fine tuned qlora adapter with the base falcon 7b model. next, we’ll upload the combined model, along with the original tokenizer and necessary supporting files, to the huggingface hub. we’ll also include a custom handler to facilitate the inference process.

Getting Started With Hugging Face Inference Endpoints Hugging face inference endpoints are managed apis that make it easy to deploy and scale ml models directly from the hugging face hub or your own models. the key benefits include scale to zero cost savings, autoscaling infrastructure, ease of use, customization, production ready deployment, and more. To deploy our model, we’ll merge the fine tuned qlora adapter with the base falcon 7b model. next, we’ll upload the combined model, along with the original tokenizer and necessary supporting files, to the huggingface hub. we’ll also include a custom handler to facilitate the inference process.

Getting Started With Hugging Face Inference Endpoints