Classification Metrics In Machine Learning Pdf Receiver Operating This users’ guide will help clinicians understand the available metrics for assessing discrimination, calibration, and the relative performance of different prediction models. this article complements existing users’ guides that address the development and validation of prediction models. Discrimination was evaluated with the area under the roc curve, while calibration with the integrated calibration index. we investigated the dependence of models’ performances on the sample size and on class imbalance corrections introduced with random under sampling.

Discrimination And Calibration Metrics Of The Machine Learning Based Calibration assesses how well the model’s predicted probabilities match actual outcomes. for example, if a model predicts a 70% risk of disease for a group of patients, then roughly 70 out of. This section walks the reader through some of the common performance metrics to evaluate the discrimination and calibration of clinical prediction models based on machine learning (ml). Discrimination: the model's ability to differentiate between those with the event from those without the event. calibration: the agreement between the frequency of observed events with the predicted probabilities. Discrimination was evaluated with the area under the roc curve, while calibration with the integrated calibration index. we investigated the dependence of models' performances on the sample size and on class imbalance corrections introduced with random under sampling.

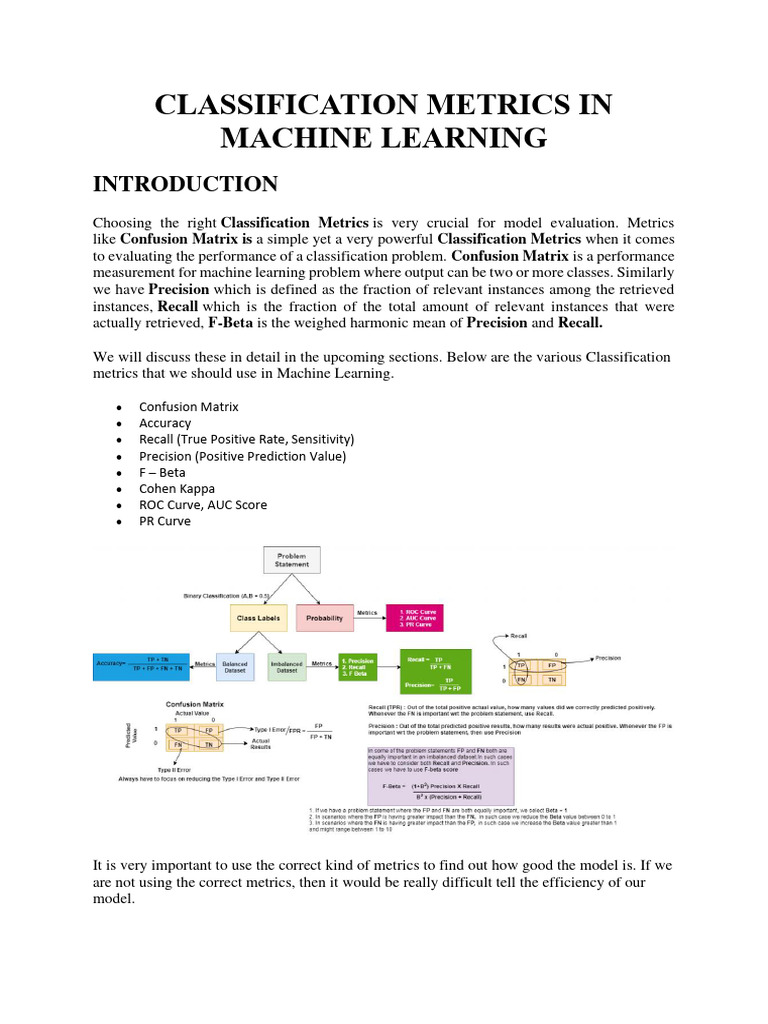

Discrimination Vs Calibration Machine Learning Models Data Science Discrimination: the model's ability to differentiate between those with the event from those without the event. calibration: the agreement between the frequency of observed events with the predicted probabilities. Discrimination was evaluated with the area under the roc curve, while calibration with the integrated calibration index. we investigated the dependence of models' performances on the sample size and on class imbalance corrections introduced with random under sampling. An empiric framework incorporating multiple metrics of discrimination, calibration, and clinical usefulness permits selection of optimal readmission risk modeling strategy including method of calibration to achieve target performance with a minimum of validation data. In this survey, we review the state of the art calibration methods and their principles for performing model calibration. first, we start with the definition of model calibration and explain the root causes of model miscalibration. then we introduce the key metrics that can measure this aspect. We identify 82 major metrics, which can be grouped into four classifier families (point based, bin based, kernel or curve based, and cumulative) and an object detection family. for each metric, we provide equations where available, facilitating implementation and comparison by future researchers. We investigated multiple ml classifiers, used shapley additive explanations (shap) for model interpretation, and determined model prediction bias with two metrics, disparate impact, and equal.