Machine Learning Pdf Errors And Residuals Linear Regression Errors, residuals, and regularization in machine learning || updegree ️ watch our python tutorial for beginners course (10 hours): youtu.be fiadtpg. Regularization is an important technique in machine learning that helps to improve model accuracy by preventing overfitting which happens when a model learns the training data too well including noise and outliers and perform poor on new data.

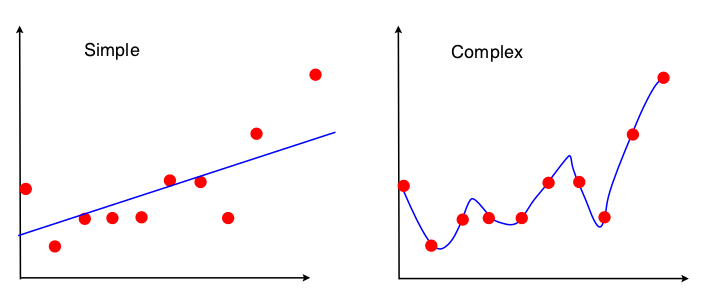

Machine Learning Pdf Machine Learning Errors And Residuals In this article, we will talk about what is regularization in data science and machine learning and dive more into techniques that help us reduce errors in predictive algorithms (and not just them). To understand regularization, we need to first explicitly consider loss cost functions for the parametric statistical models we have been using. a loss function quantifies the error between a single predicted and observed outcome within some statistical model. Comparing the mean squared errors for the two models, we observe that the error on the training set is least for the overfitting curve but there is a significant drop in error observed on the. Learn what machine learning is and why regularization is an important strategy to improve your machine learning models. plus, learn what bias variance trade off is and how lambda values play in regularization algorithms. machine learning is an exciting field projected to grow over the next decade.

Machine Learning 5 Pdf Errors And Residuals Regression Analysis Comparing the mean squared errors for the two models, we observe that the error on the training set is least for the overfitting curve but there is a significant drop in error observed on the. Learn what machine learning is and why regularization is an important strategy to improve your machine learning models. plus, learn what bias variance trade off is and how lambda values play in regularization algorithms. machine learning is an exciting field projected to grow over the next decade. This article explores the concept of regularization, different types of regularization techniques, and best practices for selecting the optimal regularization parameter to build robust machine learning models. In this comprehensive exploration of regularization in machine learning using linear regression as our framework, we delved into critical concepts such as overfitting, underfitting, and the bias variance trade off, providing a foundational understanding of model performance. Regularization in machine learning is a processus consisting of adding constraints on a model’s parameters. the two main types of regularization techniques are the ridge regularization (also known as tikhonov regularization, albeit the latter is more general) and the lasso regularization. In this article, we learned about regularization in machine learning and its advantages and explored methods like ridge regression and lasso. finally, we understood how regularization techniques help improve the accuracy of regression models.

Regularization In Machine Learning Machine Learning Career Launcher This article explores the concept of regularization, different types of regularization techniques, and best practices for selecting the optimal regularization parameter to build robust machine learning models. In this comprehensive exploration of regularization in machine learning using linear regression as our framework, we delved into critical concepts such as overfitting, underfitting, and the bias variance trade off, providing a foundational understanding of model performance. Regularization in machine learning is a processus consisting of adding constraints on a model’s parameters. the two main types of regularization techniques are the ridge regularization (also known as tikhonov regularization, albeit the latter is more general) and the lasso regularization. In this article, we learned about regularization in machine learning and its advantages and explored methods like ridge regression and lasso. finally, we understood how regularization techniques help improve the accuracy of regression models.

Unit 3 Machine Learning Pdf Linear Regression Errors And Residuals Regularization in machine learning is a processus consisting of adding constraints on a model’s parameters. the two main types of regularization techniques are the ridge regularization (also known as tikhonov regularization, albeit the latter is more general) and the lasso regularization. In this article, we learned about regularization in machine learning and its advantages and explored methods like ridge regression and lasso. finally, we understood how regularization techniques help improve the accuracy of regression models.