Evaluating The Effectiveness Of Llm Evaluators Aka Llm As Judge Pdf Llm evaluators, also known as “llm as a judge”, are large language models (llms) that evaluate the quality of another llm’s response to an instruction or query. their growing adoption is partly driven by necessity. llms can now solve increasingly complex and open ended tasks such as long form summarization, translation, and multi turn dialogue. Llm as a judge is a common technique to evaluate llm powered products. in this guide, we’ll cover how it works, how to build an llm evaluator and craft good prompts, and what are the alternatives to llm evaluations.

Ankush Borse On Linkedin Evaluating The Effectiveness Of Llm Judging the judges: evaluating alignment and vulnerabilities in llms as judges evaluates nine llm evaluators, using the triviaqa dataset as a knowledge benchmark. We systematically evaluate the effectiveness of llms as a judge using the taxonomy with several major llm families including gpt4, llama3, mistral, and phi3 across 4 levels of increasing prompt instruction. This paper presents a comprehensive survey of the llms as judges paradigm from five key perspectives: functionality, methodology, applications, meta evaluation, and limitations. we begin by providing a systematic definition of llms as judges and introduce their functionality (why use llm judges?). Building an effective llm as a judge evaluation system involves several key steps, each contributing to a robust and reliable assessment framework: following these steps and adhering to best practices for llm evaluators will help you build a robust llm as a judge evaluation system.

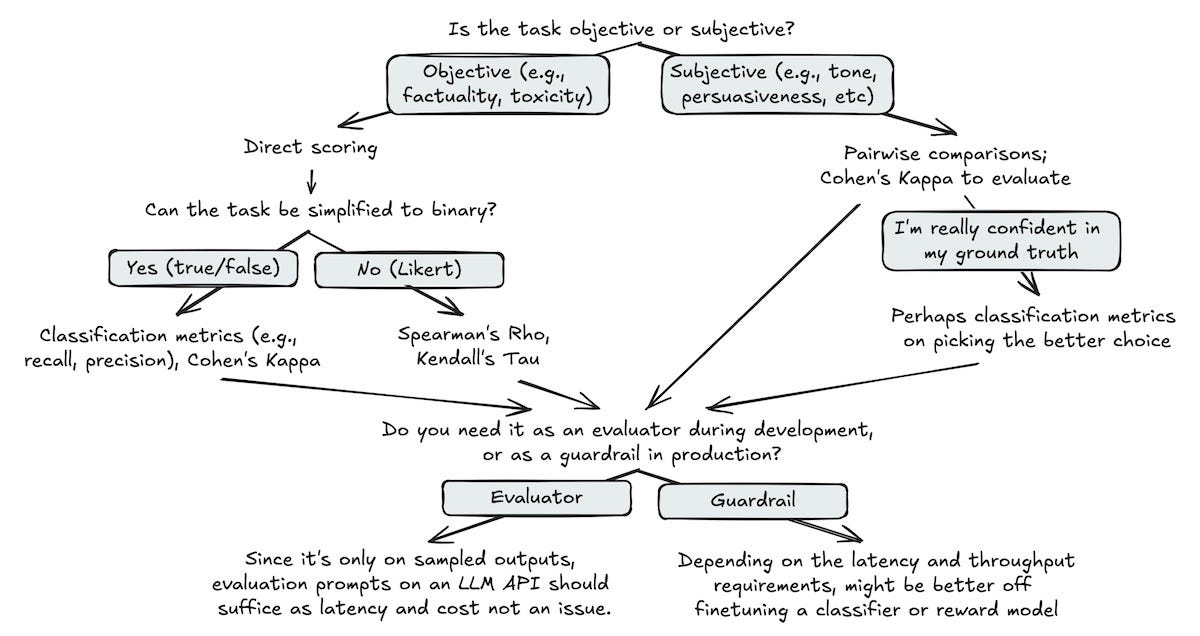

Deep Dive Into Llm Evaluators Aka Llm As A Judge By Yugank Aman This paper presents a comprehensive survey of the llms as judges paradigm from five key perspectives: functionality, methodology, applications, meta evaluation, and limitations. we begin by providing a systematic definition of llms as judges and introduce their functionality (why use llm judges?). Building an effective llm as a judge evaluation system involves several key steps, each contributing to a robust and reliable assessment framework: following these steps and adhering to best practices for llm evaluators will help you build a robust llm as a judge evaluation system. Evaluating the effectiveness of llm evaluators (aka llm as judge) eugene yan “after reading this, you’ll gain an intuition on how to apply, evaluate, and operate llm evaluators. we’ll learn when to apply (i) direct scoring vs. pairwise comparisons, (ii) correlation vs. classification metrics, and (iii) llm apis vs. finetuned evaluator. Llm as a judge is the process of using llms to evaluate llm (system) outputs, and it works by first defining an evaluation prompt based on any specific criteria of your choice, before asking an llm judge to assign a score based on the input and outputs of your llm system. Llm as a judge is a reference free metric that directly prompts a powerful llm to evaluate the quality of another model’s output. despite its limitations, this technique is found to consistently agree with human preferences in addition to being capable of evaluating a wide variety of open ended tasks in a scalable manner and with minimal. The document evaluates the effectiveness of llm evaluators, which are large language models used to assess the quality of responses from other llms. it discusses key considerations, use cases, and various scoring techniques for llm evaluators, highlighting their advantages over traditional evaluation methods.

Deep Dive Into Llm Evaluators Aka Llm As A Judge By Yugank Aman Evaluating the effectiveness of llm evaluators (aka llm as judge) eugene yan “after reading this, you’ll gain an intuition on how to apply, evaluate, and operate llm evaluators. we’ll learn when to apply (i) direct scoring vs. pairwise comparisons, (ii) correlation vs. classification metrics, and (iii) llm apis vs. finetuned evaluator. Llm as a judge is the process of using llms to evaluate llm (system) outputs, and it works by first defining an evaluation prompt based on any specific criteria of your choice, before asking an llm judge to assign a score based on the input and outputs of your llm system. Llm as a judge is a reference free metric that directly prompts a powerful llm to evaluate the quality of another model’s output. despite its limitations, this technique is found to consistently agree with human preferences in addition to being capable of evaluating a wide variety of open ended tasks in a scalable manner and with minimal. The document evaluates the effectiveness of llm evaluators, which are large language models used to assess the quality of responses from other llms. it discusses key considerations, use cases, and various scoring techniques for llm evaluators, highlighting their advantages over traditional evaluation methods.

Deep Dive Into Llm Evaluators Aka Llm As A Judge By Yugank Aman Llm as a judge is a reference free metric that directly prompts a powerful llm to evaluate the quality of another model’s output. despite its limitations, this technique is found to consistently agree with human preferences in addition to being capable of evaluating a wide variety of open ended tasks in a scalable manner and with minimal. The document evaluates the effectiveness of llm evaluators, which are large language models used to assess the quality of responses from other llms. it discusses key considerations, use cases, and various scoring techniques for llm evaluators, highlighting their advantages over traditional evaluation methods.