Finetune Llms On Your Own Consumer Hardware Using Tools From Pytorch This in depth tutorial is about fine tuning llms locally with huggingface transformers and pytorch. we use meta's new llama 3.2 1b instruct model and teach it to predict paper categories. We demonstrate how to finetune a 7b parameter model on a typical consumer gpu (nvidia t4 16gb) with lora and tools from the pytorch and hugging face ecosystem with complete reproducible google colab notebook.

Finetune Llms On Your Own Consumer Hardware Using Tools From Pytorch This blog post contains "chapter 0: tl;dr" of my latest book a hands on guide to fine tuning large language models with pytorch and hugging face. in this blog post, we'll get right to it and fine tune a small language model, microsoft's phi 3 mini 4k instruct, to translate english into yoda speak. Learn to fine tune large language models (llms) locally through a comprehensive step by step tutorial that demonstrates using meta's llama 3.2 1b instruct model with huggingface transformers and pytorch. A hands on guide to fine tuning llms with pytorch and hugging face kindle | paperback | pdf [leanpub] | pdf [gumroad]. In the following section, we will explore model parallelism and data parallelism strategies, which are commonly used to address this issue. when training a llm, the choice between model.

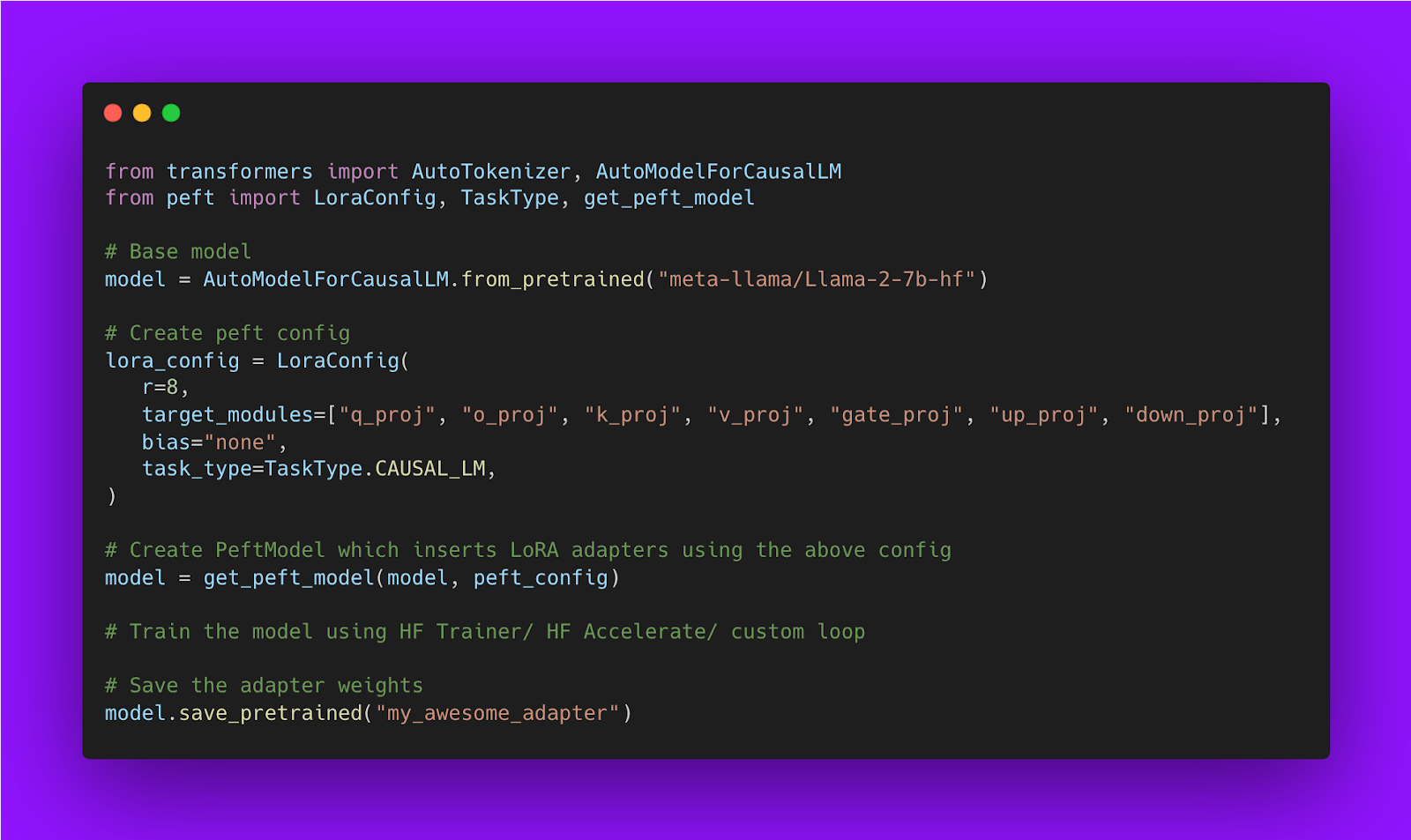

Finetune Llms On Your Own Consumer Hardware Using Tools From Pytorch A hands on guide to fine tuning llms with pytorch and hugging face kindle | paperback | pdf [leanpub] | pdf [gumroad]. In the following section, we will explore model parallelism and data parallelism strategies, which are commonly used to address this issue. when training a llm, the choice between model. # 🔥 build your custom ai llm with pytorch lightning ## introduction processes and information are at the heart of every business. the vulnerability and opportunity of this moment is the question of whether your business can automate your processes using ai, and reap the rewards of doing so. chatgpt, a general purpose ai, has. This book is a practical guide to fine tuning large language models (llms), offering both a high level overview and detailed instructions on how to train these models for specific tasks. This blog post walks you thorugh how to fine tune open llms using hugging face trl, transformers & datasets in 2024. in the blog, we are going to: 1. define our use case. when fine tuning llms, it is important you know your use case and the task you want to solve. We demonstrate how to finetune a 7b parameter model on a typical consumer gpu (nvidia t4 16gb) with lora and tools from the pytorch and hugging face ecosystem with complete reproducible google colab notebook. large language models (llms) have shown impressive capabilities in industrial applications.

Finetune Llms On Your Own Consumer Hardware Using Tools From Pytorch # 🔥 build your custom ai llm with pytorch lightning ## introduction processes and information are at the heart of every business. the vulnerability and opportunity of this moment is the question of whether your business can automate your processes using ai, and reap the rewards of doing so. chatgpt, a general purpose ai, has. This book is a practical guide to fine tuning large language models (llms), offering both a high level overview and detailed instructions on how to train these models for specific tasks. This blog post walks you thorugh how to fine tune open llms using hugging face trl, transformers & datasets in 2024. in the blog, we are going to: 1. define our use case. when fine tuning llms, it is important you know your use case and the task you want to solve. We demonstrate how to finetune a 7b parameter model on a typical consumer gpu (nvidia t4 16gb) with lora and tools from the pytorch and hugging face ecosystem with complete reproducible google colab notebook. large language models (llms) have shown impressive capabilities in industrial applications.

Github Praveen76 Finetune Open Source Llms On Custom Data Fine Tune This blog post walks you thorugh how to fine tune open llms using hugging face trl, transformers & datasets in 2024. in the blog, we are going to: 1. define our use case. when fine tuning llms, it is important you know your use case and the task you want to solve. We demonstrate how to finetune a 7b parameter model on a typical consumer gpu (nvidia t4 16gb) with lora and tools from the pytorch and hugging face ecosystem with complete reproducible google colab notebook. large language models (llms) have shown impressive capabilities in industrial applications.

Tutorial Llm Finetune Inference A Hugging Face Space By Chtai