Llm2vec Large Language Models Are Secretly Powerful Text Encoders Pdf Llm2vec class is a wrapper on top of huggingface models to support enabling bidirectionality in decoder only llms, sequence encoding and pooling operations. the steps below showcase an example on how to use the library. Llm2vec is a simple recipe to convert decoder only llms into text encoders. it consists of 3 simple steps: 1) enabling bidirectional attention, 2) training with masked next token prediction, and 3) unsupervised contrastive learning.

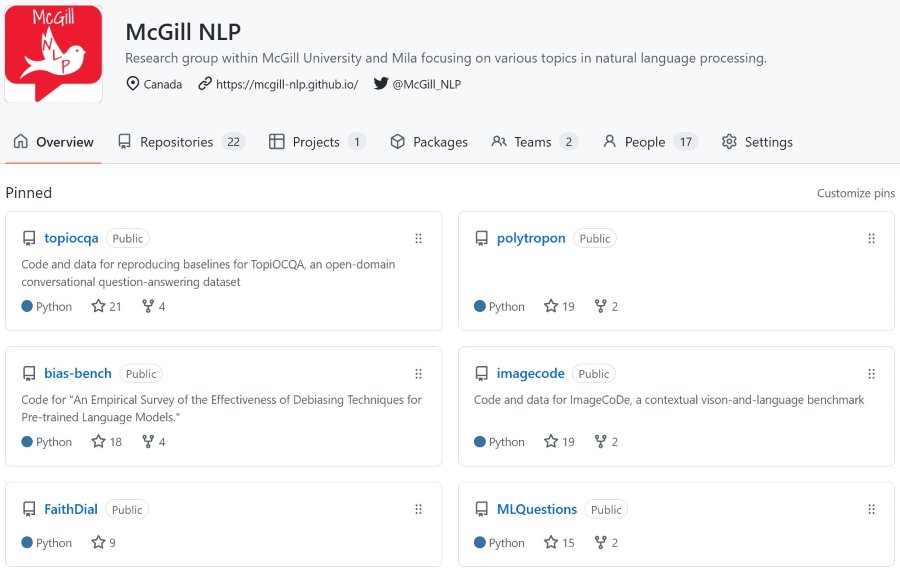

Mcgill Natural Language Processing Mcgill Nlp Llm2vec is a simple recipe to convert decoder only llms into text encoders. it consists of 3 simple steps: 1) enabling bidirectional attention, 2) masked next token prediction, and 3) unsupervised contrastive learning. the model can be further fine tuned to achieve state of the art performance. import torch. In this work, we introduce llm2vec, a simple unsupervised approach that can transform any decoder only llm into a strong text encoder. llm2vec consists of three simple steps: 1) enabling bidirectional attention, 2) masked next token prediction, and 3) unsupervised contrastive learning. Evaluation dataset for our naacl 2025 paper on "does generative ai speak nigerian pidgin?: issues about representativeness and bias for multilingualism in llms" loading… research group within mcgill university and mila focusing on various topics in natural language processing. mcgill nlp. By applying llm2vec to the llama 3 model, it becomes a super text encoder. this means it gets really good at turning sentences into a special code that computers understand, which helps in tasks like finding information or grouping similar ideas together¹.

Llm2vec Large Language Models Are Secretly Powerful Text Encoders Evaluation dataset for our naacl 2025 paper on "does generative ai speak nigerian pidgin?: issues about representativeness and bias for multilingualism in llms" loading… research group within mcgill university and mila focusing on various topics in natural language processing. mcgill nlp. By applying llm2vec to the llama 3 model, it becomes a super text encoder. this means it gets really good at turning sentences into a special code that computers understand, which helps in tasks like finding information or grouping similar ideas together¹. Llm2vec consists of 3 simple steps to transform decoder only llms into text encoders: 1) enabling bidirectional attention, 2) training with masked next token prediction, and 3) unsupervised contrastive learning. Help function to get the length for the input text. text can be either a string (which means a single text) (representing several text inputs to the model). Tl;dr: we provide llm2vec, a simple recipe to transform decoder only llms into powerful text embedding models. abstract: large decoder only language models (llms) are the state of the art models on most of today's nlp tasks and benchmarks. Llm2vec: large language models are secretly powerful text encoders llm2vec is a simple recipe to convert decoder only llms into text encoders. it consists of 3 simple steps: 1) enabling bidirectional attention, 2) masked next token prediction, and 3) unsupervised contrastive learning. the model can be further fine tuned to achieve state of the art performance. repository: github.

Github Mcgill Nlp Llm2vec Code For Llm2vec Large Language Models Llm2vec consists of 3 simple steps to transform decoder only llms into text encoders: 1) enabling bidirectional attention, 2) training with masked next token prediction, and 3) unsupervised contrastive learning. Help function to get the length for the input text. text can be either a string (which means a single text) (representing several text inputs to the model). Tl;dr: we provide llm2vec, a simple recipe to transform decoder only llms into powerful text embedding models. abstract: large decoder only language models (llms) are the state of the art models on most of today's nlp tasks and benchmarks. Llm2vec: large language models are secretly powerful text encoders llm2vec is a simple recipe to convert decoder only llms into text encoders. it consists of 3 simple steps: 1) enabling bidirectional attention, 2) masked next token prediction, and 3) unsupervised contrastive learning. the model can be further fine tuned to achieve state of the art performance. repository: github.