Hugging Face Model Hub Resources Restackio Hugging face models can be run locally through the huggingfacepipeline class. the hugging face model hub hosts over 120k models, 20k datasets, and 50k demo apps (spaces), all open source and publicly available, in an online platform where people can easily collaborate and build ml together. Based on the information you've provided, it seems like you're trying to use a local model with the huggingfaceembeddings function in langchain. to do this, you should pass the path to your local model as the model name parameter when instantiating the huggingfaceembeddings class. here's an example:.

Hugging Face The Ai Community Building The Future Langchain is a python framework that simplifies the integration of ai models into workflows. to install it, use: let’s wrap a hugging face model inside langchain to enhance its functionality:. Colab code notebook: [ drp.li m1mbm] ( drp.li m1mbm) load huggingface models locally so that you can use models you can’t use via the api endpoints. this video shows you how to. Hi, i want to use jinaai embeddings completely locally (jinaai jina embeddings v2 base de · hugging face) and downloaded all files to my machine (into folder jina embeddings). however when i am now loading the embeddings, i am getting this message: i am loading the models like this: from langchain community.embeddings import huggingfaceembeddings. By combining huggingface and langchain, one can easily incorporate domain specific chatbots. the integration of langchain and hugging face enhances natural language processing capabilities by combining hugging face’s pre trained models with langchain’s linguistic toolkit.

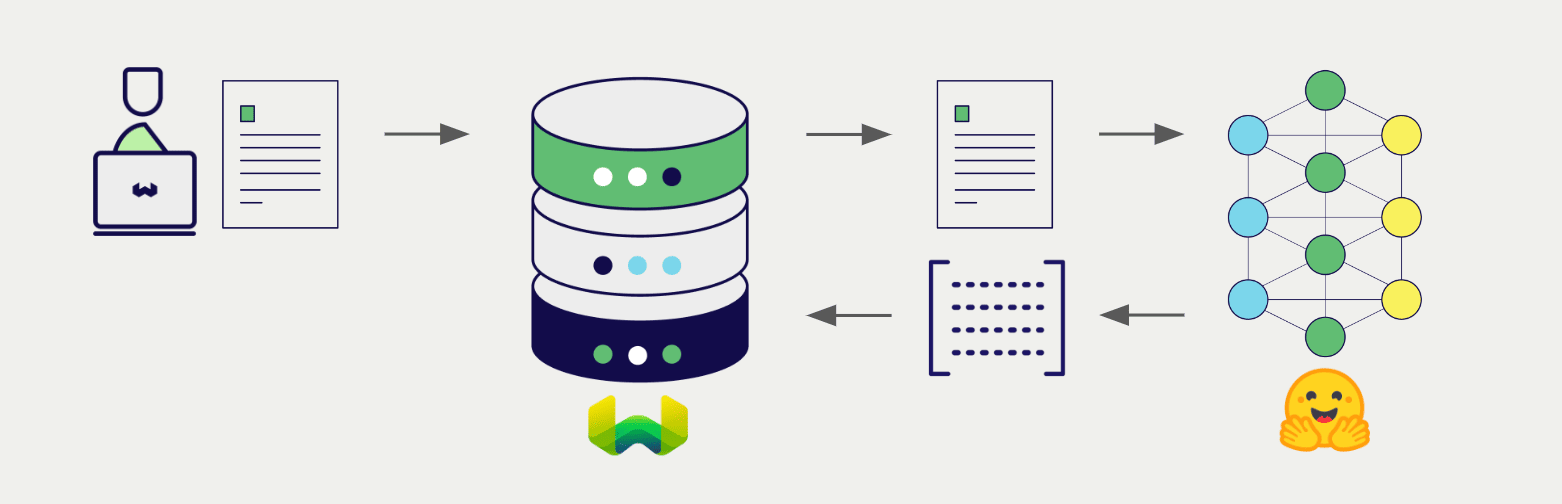

How To Deploy A Robust Huggingface Ai Model For Sentiment Analysis Into Hi, i want to use jinaai embeddings completely locally (jinaai jina embeddings v2 base de · hugging face) and downloaded all files to my machine (into folder jina embeddings). however when i am now loading the embeddings, i am getting this message: i am loading the models like this: from langchain community.embeddings import huggingfaceembeddings. By combining huggingface and langchain, one can easily incorporate domain specific chatbots. the integration of langchain and hugging face enhances natural language processing capabilities by combining hugging face’s pre trained models with langchain’s linguistic toolkit. I am trying to use a custom embedding model in langchain with chromadb. i can't seem to find a way to use the base embedding class without having to use some other provider (like openaiembeddings or huggingfaceembeddings). Let's figure out the best approach for using a locally downloaded embedding model in huggingfaceembeddings. to use a locally downloaded embedding model with the huggingfaceembeddings class in langchain, you need to point to the directory containing all the necessary model files. First, we need to get a read only api key from hugging face. now we can use the huggingfaceinferenceapiembeddings class to run open source embedding models via inference providers. api key=huggingfacehub api token, model name="sentence transformers all minilm l6 v2",. This quick tutorial covers how to use langchain with a model directly from huggingface and a model saved locally. langchain is an open source python library that helps you combine large.