An Introduction To Hugging Face Transformers And Pipelines In this article, we’ll explore how to use hugging face 🤗 transformers library, and in particular pipelines. with over 1 million hosted models, hugging face is the platform bringing artificial intelligence practitioners together. We’re on a journey to advance and democratize artificial intelligence through open source and open science.

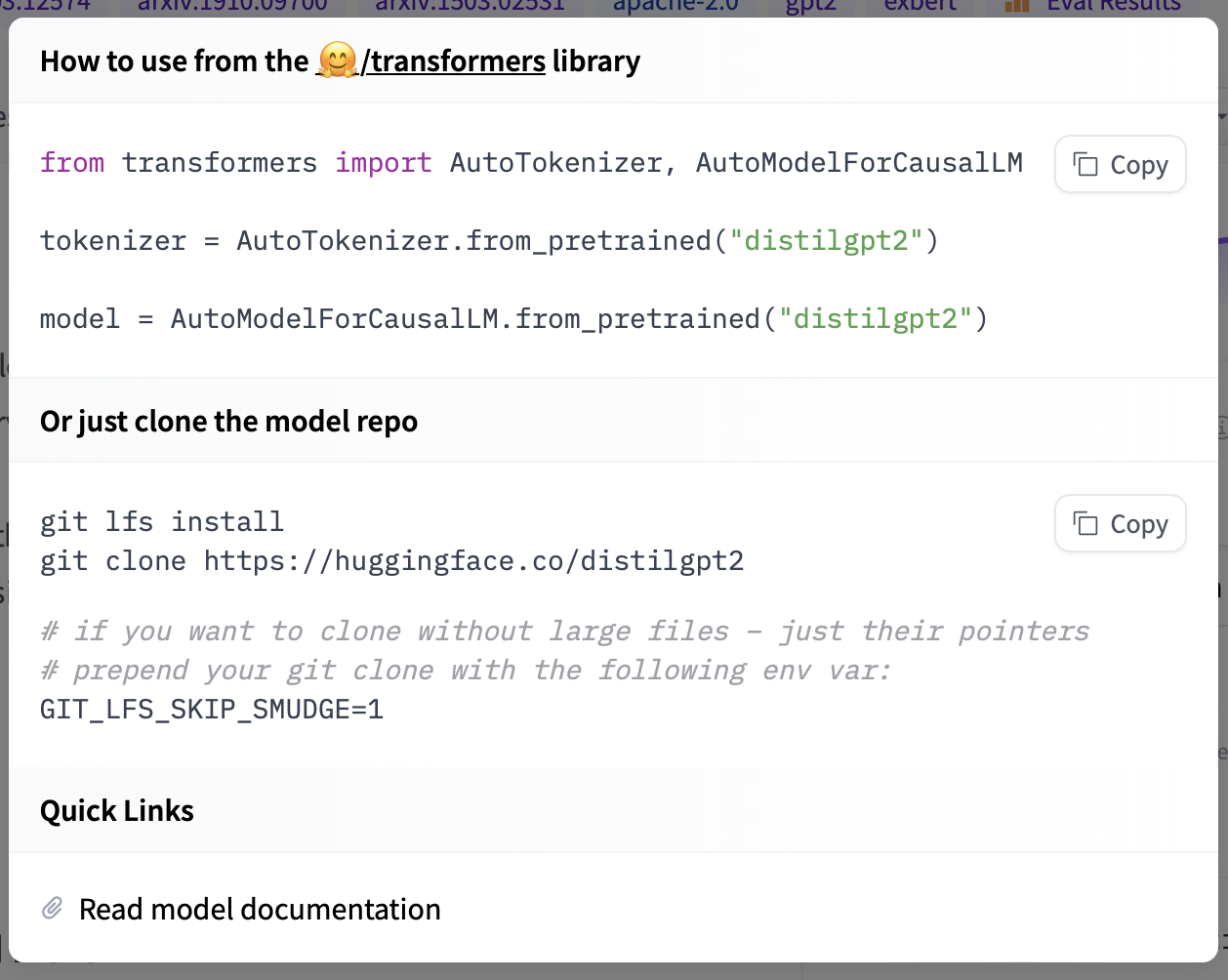

Using рџ Transformers At Hugging Face This page provides practical examples and common usage patterns for the hugging face transformers library across nlp, computer vision, and multimodal tasks. it demonstrates both high level pipeline apis for quick inference and detailed training workflows using both the trainer class and manual training loops with accelerate. Hugging face transformers is an open source python library that provides thousands of pre trained models for tasks such as text classification, named entity recognition, summarization, translation, and question answering. In this step by step guide, you will learn exactly how to install, configure and utilize hugging face transformers in python to quickly build production grade nlp systems. i‘ll be explaining concepts and providing code samples suitable both for beginners as well as more advanced ml practitioners. so buckle up, and let‘s get started!. This article will discuss the fundamentals of using a pre trained model from hugging face including loading, performing inference and a hands on example with code.

Github Nazarcoder123 Transformers And Pipelines In Hugging Face In In this step by step guide, you will learn exactly how to install, configure and utilize hugging face transformers in python to quickly build production grade nlp systems. i‘ll be explaining concepts and providing code samples suitable both for beginners as well as more advanced ml practitioners. so buckle up, and let‘s get started!. This article will discuss the fundamentals of using a pre trained model from hugging face including loading, performing inference and a hands on example with code. In this article, we’ll guide you through the steps to get started with transformers, troubleshoot common issues, and provide handy analogies to make the code clearer. 🤗 transformers provides apis to easily download and train state of the art pretrained models. using pretrained models can reduce your compute costs, carbon footprint, and save you time from training a model from scratch. the models can be used across different modalities such as:. Hugging face transformers has truly lowered the barrier to entry for nlp, empowering beginners to leverage the power of state of the art models with ease. we encourage you to explore the vast models on the hugging face hub, experiment with loading different pre trained models, and most importantly, try fine tuning them on your own datasets to. Hugging face transformers makes nlp accessible to everyone with pre trained models. install it with pip, then select the right model for your task – bert for classification, gpt for generation, you get the idea.