Nvidia Deep Learning Inference Platform Performance Study Nvidia Nvidia® tensorrt™ is an sdk for high performance deep learning inference. it is designed to work in a complementary fashion with training frameworks such as tensorflow, pytorch, and mxnet. it focuses specifically on running an already trained network quickly and efficiently on nvidia hardware. Introduction to nvidia tensorrt an sdk for high performance deep learning inference that delivers the performance, efficiency, and responsiveness critical.

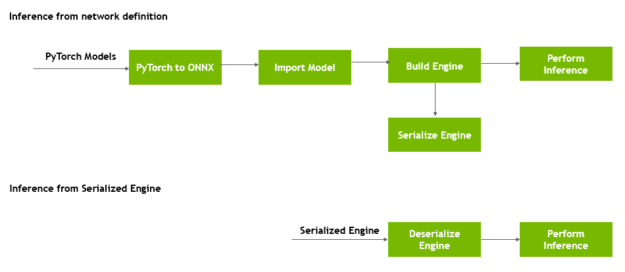

Speeding Up Deep Learning Inference Using Nvidia Tensorrt Updated Tensorrt provides apis and parsers to import trained models from all major deep learning frameworks. it then generates optimized runtime engines deployable in the datacenter as well as in automotive and embedded environments. this post provides a simple introduction to using tensorrt. Introduction to nvidia tensorrt an sdk for high performance deep learning inference that delivers the performance, efficiency, and responsiveness critical to powering the next generation of ai products and services—in the cloud, in the data center, at the edge, and in automotive environments. This document provides an introduction to nvidia tensorrt, an open source platform for high performance deep learning inference optimization and runtime. it covers the core architecture, key components, and main use cases of the tensorrt library. To address this issue, nvidia published the tensorrt, a high performance dl inference engine for production deployments of deep learning models.

Speeding Up Deep Learning Inference Using Tensorrt Nvidia Technical Blog This document provides an introduction to nvidia tensorrt, an open source platform for high performance deep learning inference optimization and runtime. it covers the core architecture, key components, and main use cases of the tensorrt library. To address this issue, nvidia published the tensorrt, a high performance dl inference engine for production deployments of deep learning models. What is nvidia tensorrt and how it speeds up ai inference in vision tasks? nvidia tensorrt is a high performance deep learning inference library and optimizer that plays a crucial role in accelerating ai workloads, particularly in vision tasks. In this article, we’ll explore tensorrt, its benefits, and the steps involved in converting a pytorch or tensorflow model to a tensorrt model. what is tensorrt? tensorrt is a. Nvidia tensorrt is an sdk for optimizing trained deep learning models to enable high performance inference. tensorrt contains a deep learning inference optimizer and a runtime for execution. Nvidia® tensorrt™ is an ecosystem of tools for developers to achieve high performance deep learning inference. tensorrt includes inference compilers, runtimes, and model optimizations that deliver low latency and high throughput for production applications.

Speeding Up Deep Learning Inference Using Tensorrt Nvidia Technical Blog What is nvidia tensorrt and how it speeds up ai inference in vision tasks? nvidia tensorrt is a high performance deep learning inference library and optimizer that plays a crucial role in accelerating ai workloads, particularly in vision tasks. In this article, we’ll explore tensorrt, its benefits, and the steps involved in converting a pytorch or tensorflow model to a tensorrt model. what is tensorrt? tensorrt is a. Nvidia tensorrt is an sdk for optimizing trained deep learning models to enable high performance inference. tensorrt contains a deep learning inference optimizer and a runtime for execution. Nvidia® tensorrt™ is an ecosystem of tools for developers to achieve high performance deep learning inference. tensorrt includes inference compilers, runtimes, and model optimizations that deliver low latency and high throughput for production applications.

Speeding Up Deep Learning Inference Using Tensorrt Nvidia Technical Blog Nvidia tensorrt is an sdk for optimizing trained deep learning models to enable high performance inference. tensorrt contains a deep learning inference optimizer and a runtime for execution. Nvidia® tensorrt™ is an ecosystem of tools for developers to achieve high performance deep learning inference. tensorrt includes inference compilers, runtimes, and model optimizations that deliver low latency and high throughput for production applications.